In today’s fast-evolving landscape of artificial intelligence, the deployment of machine learning models on edge devices—smartphones, IoT sensors, embedded systems, and more—has become increasingly popular. However, these devices often come with strict constraints on computational power, memory, and energy consumption. To address these limitations, techniques such as model quantization have emerged as powerful tools. This article delves deep into model quantization, offering clear explanations and simple examples to illustrate the concept, while also exploring the rapid rise of edge AI and its transformative impact on various industries.

Table of Contents

- Introduction

- Understanding Model Quantization

- Types of Quantization Techniques

- Examples and Simple Explanations

- How Model Quantization Boosts Edge AI

- The Rise of Edge AI

- Future Directions in Model Quantization and Edge AI

- Conclusion

Introduction

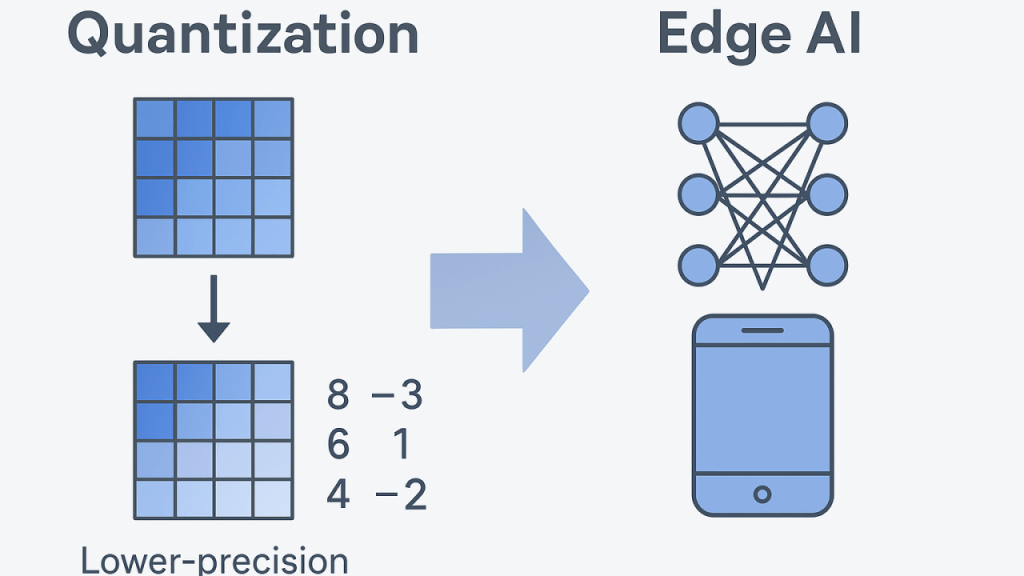

Artificial intelligence (AI) is no longer confined to high-powered data centers. As our world becomes increasingly interconnected, there is a growing demand for AI that can operate efficiently in decentralized settings. Edge AI refers to AI algorithms and applications that run locally on hardware at the edge of the network, rather than being dependent on centralized cloud resources. However, running sophisticated models on devices with limited resources can be challenging. One of the most promising approaches to overcoming these challenges is model quantization—a process that reduces the complexity of a machine learning model without significantly sacrificing its performance.

Model quantization converts the numerical representations of model parameters and activations from high-precision (typically 32-bit floating-point) to lower-precision formats (such as 8-bit integers). This reduction in precision leads to smaller model sizes, faster inference times, and lower energy consumption, making it ideal for deployment on resource-constrained devices. This article will explore the key aspects of model quantization, present clear examples, and discuss how the advancements in this field are propelling the growth of edge AI.

Understanding Model Quantization

What Is Model Quantization?

At its core, model quantization is a technique used to reduce the number of bits required to represent the parameters of a neural network. Neural networks are typically trained using 32-bit floating-point numbers (FP32), which provide a high degree of precision. However, in many real-world applications—especially on edge devices—this level of precision is often not necessary. Quantization involves converting these 32-bit values into a lower bit representation, such as 16-bit (FP16) or 8-bit (INT8).

This process can be broken down into a few steps:

- Mapping: The original range of floating-point values is mapped to a smaller set of discrete values.

- Scaling and Zero-Point Calculation: A scale factor and zero-point are computed to correctly map the lower-precision values back to the original range.

- Rounding: The values are rounded to the nearest discrete quantized value.

By reducing the precision, quantization decreases the memory footprint and speeds up the computation, which is particularly beneficial for real-time applications on edge devices.

Why Quantize a Model?

There are several compelling reasons to quantize machine learning models, particularly for deployment on edge devices:

- Reduced Model Size: Quantized models require less storage space, making them easier to distribute and deploy.

- Faster Inference: Lower-precision arithmetic operations can be executed more quickly on many hardware platforms, resulting in faster model inference times.

- Lower Energy Consumption: Reduced computational requirements translate to lower power usage, which is crucial for battery-powered devices.

- Cost-Effectiveness: With lower memory and compute requirements, less expensive hardware can often be used for deployment.

Despite these benefits, quantization may sometimes lead to a minor degradation in model accuracy. Therefore, understanding the trade-offs and employing the appropriate quantization method for the task at hand is essential.

Types of Quantization Techniques

Quantization techniques can be broadly categorized into two types: post-training quantization and quantization-aware training.

Post-Training Quantization

Post-training quantization is applied after the model has been trained. It is a relatively simple and widely used method, particularly because it does not require any modifications to the training process. The steps typically involved include:

- Calibration: The model is run on a subset of data to collect statistics on the activation ranges.

- Conversion: Based on the collected statistics, the floating-point weights and activations are converted to lower-precision values.

There are several variants of post-training quantization, including:

- Dynamic Range Quantization: Only weights are quantized, while activations remain in floating-point during inference.

- Full Integer Quantization: Both weights and activations are quantized to integer values. This method is often used when the deployment hardware supports efficient integer operations.

Quantization-Aware Training

Quantization-aware training (QAT) integrates quantization into the training process itself. During QAT, the network is simulated to work in lower precision even though it is stored in a high-precision format. This process allows the model to learn how to cope with the reduced precision, often leading to better accuracy after quantization compared to post-training methods.

The QAT process involves:

- Forward Pass Simulation: During the training’s forward pass, quantization effects are simulated.

- Backward Pass Compensation: The gradients are adjusted to compensate for the simulated quantization noise.

- Fine-Tuning: The model is fine-tuned in this lower-precision environment, resulting in a model that is more robust when actually quantized.

Quantization-aware training is particularly beneficial when deploying models in critical applications where even small degradations in accuracy are unacceptable.

Examples and Simple Explanations

Let’s walk through two simple examples that illustrate the concepts of post-training quantization and quantization-aware training.

Example 1: Simple Quantization of a Neural Network

Imagine you have a simple neural network that has been trained to classify handwritten digits (such as the MNIST dataset). This model was originally trained with 32-bit floating-point precision. To deploy it on a smartphone, you decide to use post-training quantization to convert the model to 8-bit integers.

Subscribe to continue reading

Subscribe to get access to the rest of this post and other subscriber-only content.