I. Introduction

Neural networks have become the cornerstone of modern artificial intelligence, revolutionizing industries and driving innovation across the globe. With their roots tracing back to the biological brain, these computational models have evolved into powerful tools capable of learning complex patterns and making intelligent decisions. In this comprehensive guide, we will delve into the fundamentals of neural networks, exploring their anatomy, operation, and various types. By the end of this journey, you’ll have a solid understanding of neural networks and their applications in diverse domains.

A. Introduction to Neural Networks

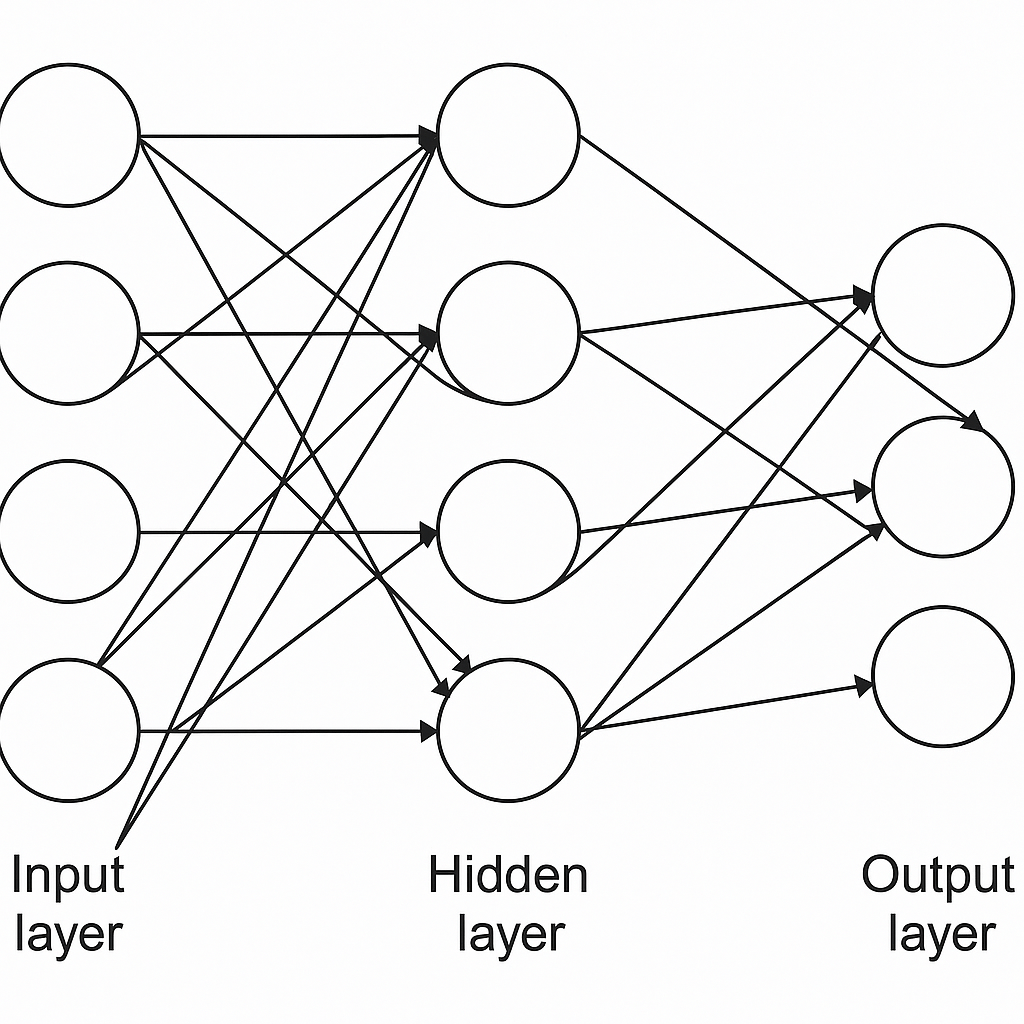

Definition and Concept: At its core, a neural network is a computational system inspired by the structure and function of the human brain. It comprises interconnected nodes, or neurons, organized into layers. These neurons process input data, perform computations, and generate output signals, mimicking the way biological neurons transmit information through synapses.

Motivation Behind Mimicking the Human Brain: The human brain is a marvel of nature, capable of learning from experience, recognizing patterns, and making decisions in complex environments. By emulating the brain’s neural architecture, neural networks aim to replicate these cognitive abilities in machines, enabling them to perform tasks such as image recognition, natural language processing, and autonomous driving.

Applications Across Various Industries: Neural networks have found applications in virtually every sector, from healthcare and finance to entertainment and transportation. They power recommendation systems, diagnose diseases from medical images, analyze financial markets, and even compose music and generate art. The versatility and adaptability of neural networks make them indispensable tools in the era of artificial intelligence.

II. Fundamentals of Neural Networks

B. Anatomy of a Neuron

To truly grasp the inner workings of neural networks, it’s essential to understand the fundamental building block: the neuron.

Structure and Function of Biological Neurons: At its most basic level, a neuron consists of three main parts: the dendrites, the cell body (soma), and the axon. Dendrites receive signals from other neurons or sensory receptors, while the cell body integrates these signals and decides whether to transmit an output signal. If the decision is affirmative, the signal travels down the axon, which extends to connect with other neurons through synapses, transmitting the signal onward.

Comparison with Artificial Neurons: Artificial neurons, also known as nodes or perceptrons, emulate the structure and function of biological neurons. Each artificial neuron receives input signals, performs a weighted sum of these inputs, applies an activation function to determine its output, and transmits the output signal to the next layer of neurons. While simplified compared to their biological counterparts, artificial neurons form the foundation of neural network architectures.

Role of Dendrites, Cell Body, Axon, and Synapses: In a neural network, dendrites correspond to input connections, which receive data from external sources or previous layers of neurons. The cell body acts as the processing unit, applying weights to input signals and generating an output based on an activation function. The axon serves as the output connection, transmitting the processed signal to subsequent layers of neurons. Synapses, the connections between neurons, facilitate the transmission of signals by modulating the strength of connections through synaptic weights.

Understanding the structure and function of neurons lays the groundwork for comprehending how neural networks process information and learn from data. In the following section, we’ll explore the feedforward process, the mechanism by which neural networks propagate input data through their layers to produce output predictions.

C. The Feedforward Process

In neural networks, the feedforward process is the mechanism by which input data is propagated through the network to produce an output prediction. This process involves several key steps:

1. Overview of Feedforward Propagation:

- Feedforward propagation begins with the input layer, where raw data is fed into the network.

- Each neuron in the input layer represents a feature or attribute of the input data.

- The input signals are transmitted through the network via connections between neurons, with each connection associated with a weight parameter.

2. Role of Activation Functions:

- As the input signals propagate through the network, they undergo transformations at each neuron.

- Activation functions introduce non-linearity to the network, enabling it to learn complex patterns and relationships in the data.

- Common activation functions include sigmoid, tanh, ReLU (Rectified Linear Unit), and softmax, each with its own characteristics and applications.

3. Illustration with a Simple Neural Network Example:

- Consider a simple neural network with an input layer, one or more hidden layers, and an output layer.

- Each neuron in the hidden layers applies a weighted sum of input signals, followed by an activation function to produce its output.

- The output layer aggregates the outputs of the hidden layers and produces the final prediction.

Example: Let’s say we have a neural network tasked with classifying images of handwritten digits (0-9). The input layer consists of neurons representing pixel values of the input image. As the input data propagates through the network, hidden layers extract features such as edges, shapes, and textures. Finally, the output layer produces a probability distribution over the possible digit classes, indicating the network’s prediction for the input image.

Understanding the feedforward process is crucial for grasping how neural networks transform input data into meaningful predictions. In the next section, we’ll explore the different types of neural networks and their unique architectures and applications.

III. Types of Neural Networks

Neural networks come in various shapes and sizes, each tailored to specific tasks and domains. In this section, we’ll explore some of the most common types of neural networks and their unique architectures and applications.

A. Feedforward Neural Networks (FNNs)

Basic Architecture and Operation:

- Feedforward neural networks are the simplest form of neural networks, consisting of input, hidden, and output layers.

- Information flows in one direction, from the input layer through the hidden layers to the output layer, without any feedback loops.

- Each neuron in a feedforward network is connected to every neuron in the subsequent layer, forming fully connected layers.

Applications and Examples:

- Feedforward neural networks are widely used for tasks such as classification, regression, and pattern recognition.

- Examples include single-layer perceptrons for binary classification and multilayer perceptrons (MLPs) for more complex tasks like image recognition and natural language processing.

B. Recurrent Neural Networks (RNNs)

Introduction to Recurrent Connections:

- Unlike feedforward networks, recurrent neural networks incorporate feedback connections, allowing information to persist over time.

- RNNs have loops within their architecture, enabling them to process sequential data such as time series, text, and speech.

Applications in Sequential Data Processing:

- RNNs excel at tasks requiring temporal dependencies, such as speech recognition, language translation, and sentiment analysis.

- Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) are specialized RNN architectures designed to address the vanishing gradient problem and capture long-term dependencies.

C. Convolutional Neural Networks (CNNs)

Architecture Optimized for Image Data:

- Convolutional neural networks are specifically designed for processing grid-like data, such as images.

- They leverage convolutional layers to extract spatial hierarchies of features and pooling layers to reduce spatial dimensions while preserving important information.

Role of Convolutional and Pooling Layers:

- Convolutional layers apply filters (kernels) to input images, detecting features like edges, textures, and shapes.

- Pooling layers downsample feature maps, reducing computational complexity and improving translation invariance.

Subscribe to continue reading

Subscribe to get access to the rest of this post and other subscriber-only content.