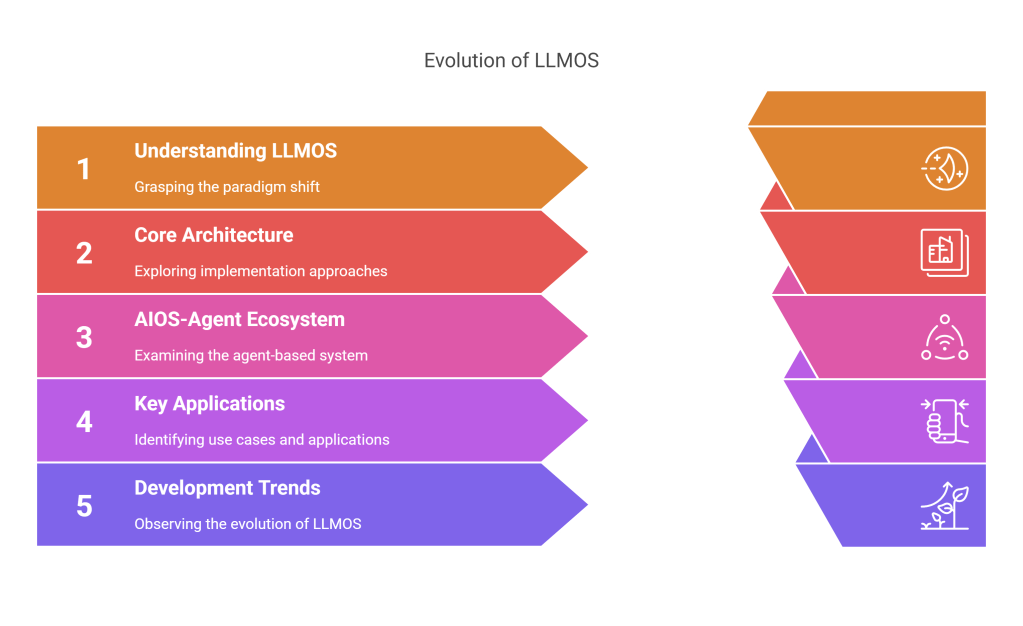

Table of Contents

- Introduction: The Dawn of Intelligent Computing

- Understanding LLMOS: A Paradigm Shift

- Core Architecture and Implementation Approaches

- The AIOS-Agent Ecosystem

- Key Applications and Use Cases

- Development Trends in LLMOS

- Technical Challenges and Solutions

- Essential Tools and Frameworks

- The Future Landscape

- Conclusion

Introduction: The Dawn of Intelligent Computing {#introduction}

The landscape of computing stands at a revolutionary crossroads. The integration of Large Language Models (LLMs) into operating systems, creating what we term Large Language Model Operating Systems (LLMOS), represents perhaps the most significant paradigm shift since the introduction of graphical user interfaces. This transformation promises to fundamentally alter how we interact with computers, bringing us closer to a natural, conversational relationship with our machines.

Andrej Karpathy, co-founder of OpenAI, eloquently captures this shift: “We’re entering a new computing paradigm with large language models acting like CPUs, using tokens instead of bytes, and having a context window instead of RAM.” This perspective highlights not just a technological evolution, but a complete reimagining of how operating systems function at their most basic level.

The Evolution of Human-Computer Interaction

To appreciate the revolutionary nature of LLMOS, we must first understand the evolution of human-computer interaction:

- Command-Line Interfaces (CLI): The earliest form of interaction requiring specific command syntax

- Graphical User Interfaces (GUI): Introduction of visual elements and mouse interactions

- Touch-Based Interfaces: Natural gestures and direct manipulation

- Voice Assistants: Basic natural language processing

- LLMOS: True conversational computing with context-aware intelligence

Each stage represented a step toward more natural interaction, but LLMOS marks the first time computers can truly understand human intent in natural language.

Understanding LLMOS: A Paradigm Shift {#understanding-llmos}

LLMOS represents the convergence of artificial intelligence and operating system design, creating an environment where intelligence is embedded into the core of system operations. This is not merely an enhancement of existing systems but a fundamental rearchitecting of how operating systems function.

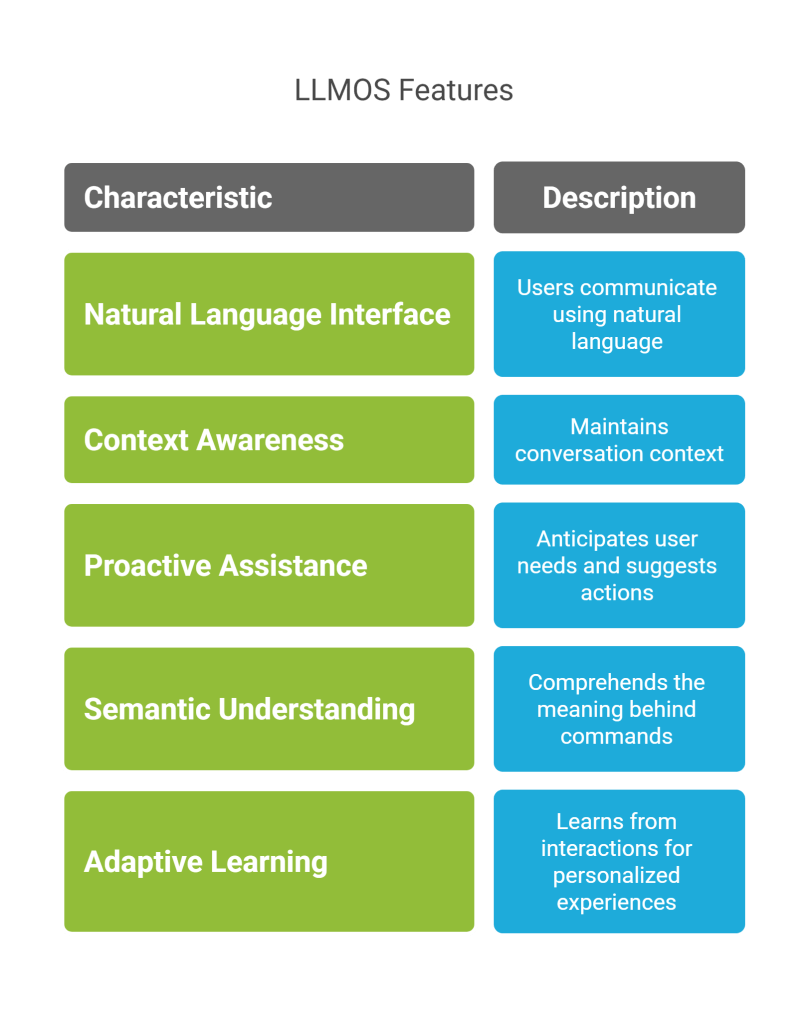

Key Principles of LLMOS

- Natural Language Processing as the Primary Interface: Users communicate with their systems using natural, conversational language rather than specific commands or GUI interactions.

- Context Awareness: The system maintains conversation context, understanding the relationship between commands and previous interactions.

- Proactive Assistance: Rather than merely responding to commands, LLMOS anticipates user needs and suggests actions.

- Semantic Understanding: The system comprehends the meaning behind commands rather than just processing syntax.

- Adaptive Learning: The system learns from user interactions to provide increasingly personalized experiences.

Technical Architecture

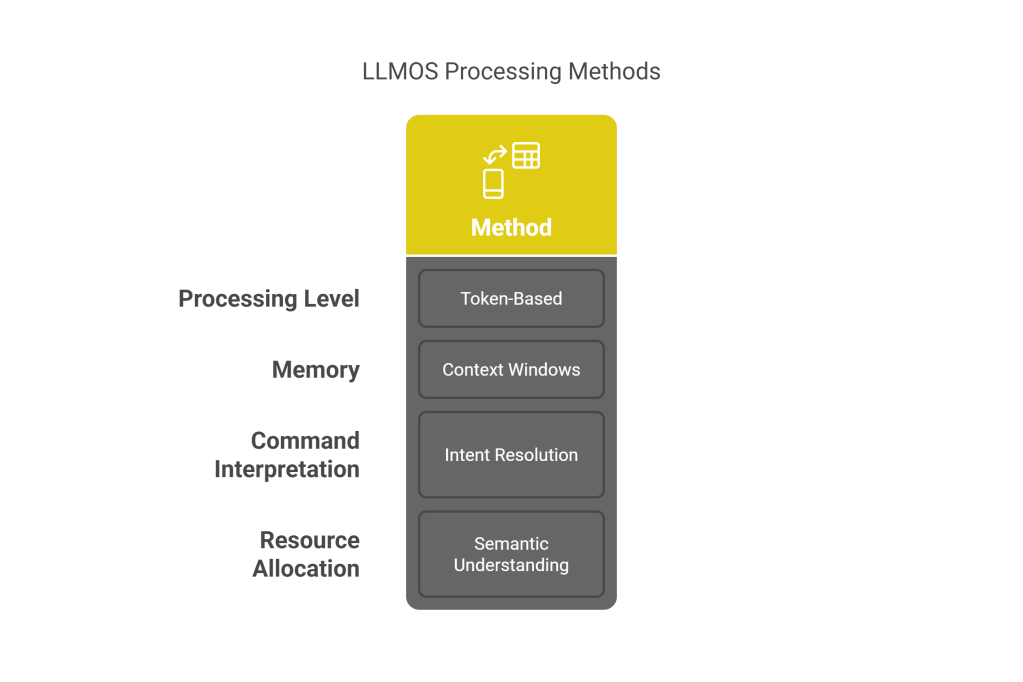

LLMOS operates on several fundamental principles that distinguish it from traditional operating systems:

- Token-Based Processing: Instead of byte-level operations, LLMOS processes language tokens, enabling semantic understanding.

- Context Windows: Rather than traditional RAM, LLMOS uses context windows to maintain conversation history and state.

- Intent Resolution: Commands are interpreted based on intended outcomes rather than strict syntax.

- Resource Management: System resources are allocated based on semantic understanding of task priority.

Core Architecture and Implementation Approaches {#core-architecture}

Subscribe to continue reading

Subscribe to get access to the rest of this post and other subscriber-only content.