Introduction: The Expanding Cosmos of AI

Artificial Intelligence represents one of humanity’s most transformative technological frontiers. What began as a theoretical concept has now evolved into a multi-layered ecosystem of technologies that are reshaping industries, societies, and our daily lives. The AI Universe encompasses a vast array of interconnected disciplines, methodologies, and applications that continue to expand at an unprecedented pace.

In this comprehensive exploration, we’ll journey through the concentric circles of AI development—from the broad concept of Artificial Intelligence to the specialized realms of Machine Learning, Neural Networks, Deep Learning, and finally to the cutting-edge domain of Generative AI. Each layer builds upon the previous one, creating an increasingly sophisticated framework for machines to perceive, learn, reason, and create.

Whether you’re a business leader seeking to understand how AI can transform your operations, a developer looking to deepen your technical knowledge, or simply a curious mind fascinated by the possibilities of intelligent machines, this guide will illuminate the complex and fascinating world of AI technologies.

The Outer Sphere: Artificial Intelligence

Artificial Intelligence, in its broadest sense, refers to the simulation of human intelligence processes by machines. The concept traces back to ancient myths of mechanical beings endowed with consciousness, but the formal field of AI research began in the mid-20th century, with the fundamental question: Can machines think?

Natural Language Processing (NLP)

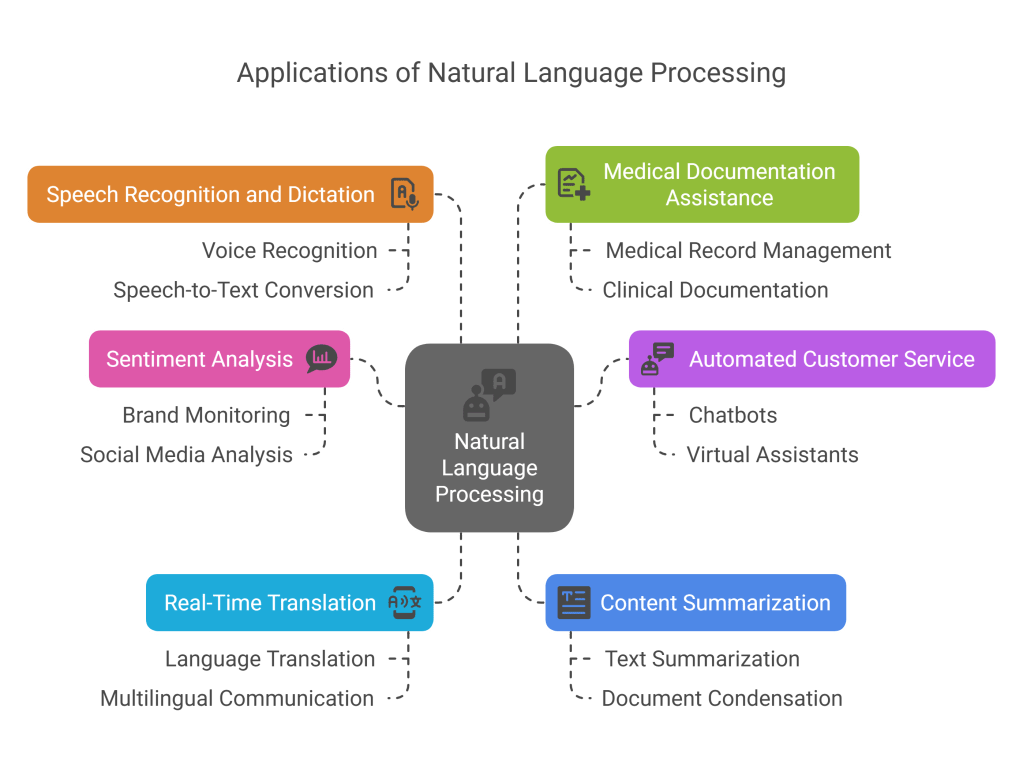

Natural Language Processing sits at the intersection of linguistics, computer science, and AI. It enables machines to understand, interpret, and generate human language in a valuable way. From simple spell-checkers to sophisticated chatbots and voice assistants, NLP applications have become ubiquitous in our digital landscape.

Modern NLP systems leverage machine learning and deep learning to analyze text and speech data, extract meaning, translate languages, and generate human-like responses. The ability of systems to understand context, sentiment, and even subtle nuances in communication continues to improve dramatically, driving advancements in areas such as:

- Sentiment analysis for brand monitoring

- Automated customer service

- Real-time translation

- Content summarization

- Speech recognition and dictation

- Medical documentation assistance

The development of transformer models like BERT, GPT, and their successors has revolutionized NLP, enabling unprecedented capabilities in language understanding and generation.

Computer Vision

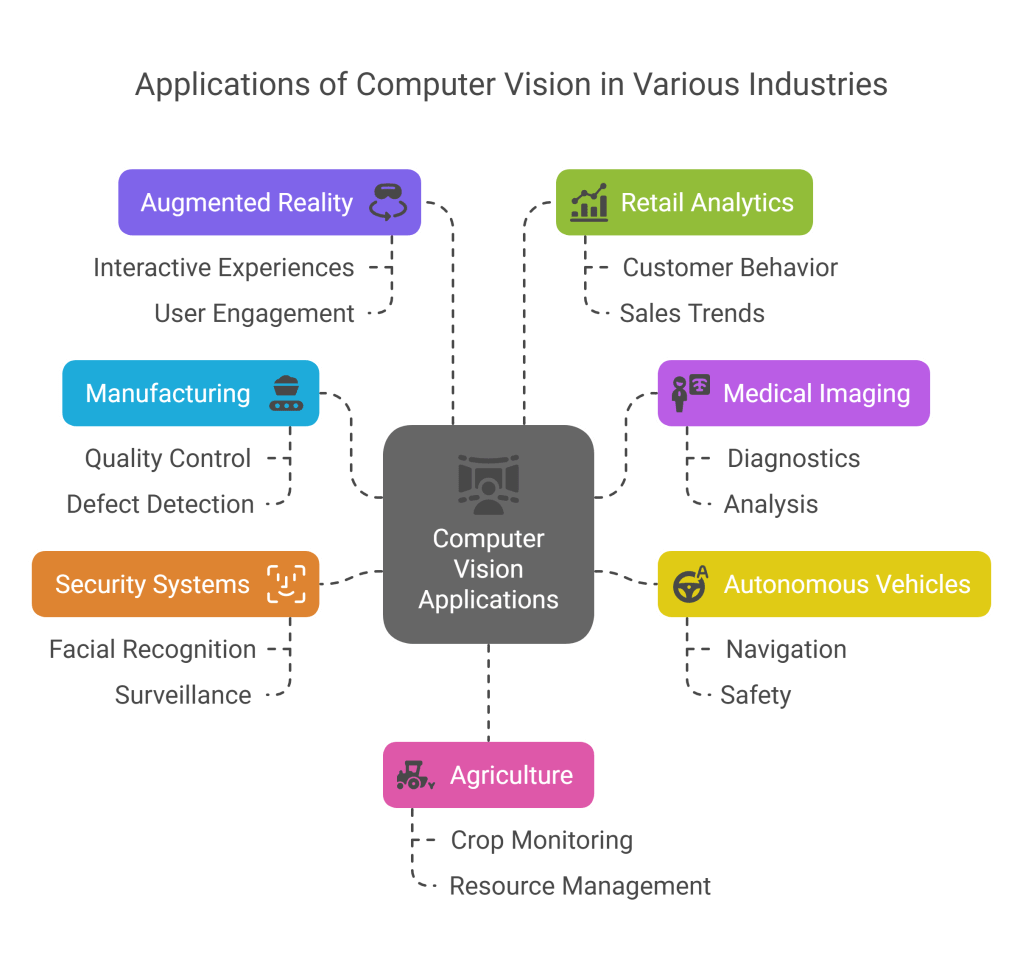

Computer Vision enables machines to “see” and interpret the visual world, processing and analyzing digital images or videos to extract meaningful information. This field combines camera hardware with sophisticated algorithms to recognize patterns, objects, faces, scenes, and activities.

Applications of computer vision span numerous industries:

- Manufacturing quality control and defect detection

- Medical imaging diagnostics

- Autonomous vehicles and drones

- Facial recognition security systems

- Augmented reality experiences

- Retail analytics for customer behavior

- Agricultural crop monitoring

Convolutional Neural Networks (CNNs) have been particularly instrumental in advancing computer vision capabilities, enabling machines to identify objects with human-level accuracy in many scenarios.

Expert Systems

Expert systems attempt to emulate the decision-making ability of a human expert in a specific domain. Unlike general AI systems, expert systems focus on solving complex problems within narrow fields of expertise, such as medical diagnosis, financial planning, or engineering troubleshooting.

These systems typically consist of two main components:

- A knowledge base containing domain-specific information

- An inference engine that applies rules to the knowledge base to solve problems

Though somewhat overshadowed by newer machine learning approaches, expert systems remain valuable in high-stakes environments where explainable, rule-based reasoning is essential.

Robotics

Robotics combines mechanical engineering, electronics, and AI to create machines capable of performing tasks in the physical world. Modern robotics ranges from industrial automation to humanoid companions, with applications spanning:

- Manufacturing assembly lines

- Warehouse fulfillment

- Healthcare assistance and surgery

- Space exploration

- Disaster response

- Agricultural harvesting

- Domestic help

The integration of AI with robotics has led to increasingly autonomous systems that can perceive their environment, make decisions, and adapt to changing conditions.

Automated Reasoning

Automated reasoning focuses on enabling computers to draw conclusions from available knowledge. This field encompasses:

- Logical deduction and theorem proving

- Planning and scheduling optimization

- Decision support systems

- Constraint satisfaction problems

- Probabilistic reasoning

These capabilities are essential for applications requiring logical inference and structured problem-solving, from legal analysis to complex logistics planning.

Fuzzy Logic

Fuzzy logic departs from classical binary logic (true/false) to handle the concept of partial truth. By allowing values between completely true and completely false, fuzzy logic provides a framework for reasoning about imprecise or uncertain information.

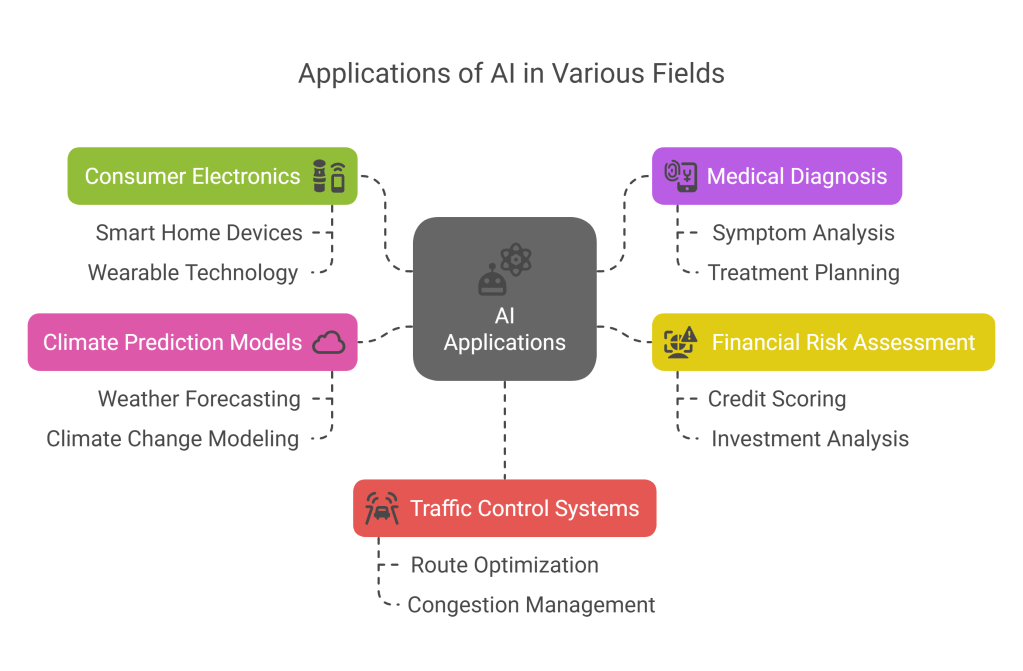

This approach is particularly useful for:

- Control systems in consumer electronics

- Medical diagnosis with uncertain symptoms

- Financial risk assessment

- Climate prediction models

- Traffic control systems

Fuzzy logic often excels in situations where human expertise involves subjective judgment rather than precise mathematical models.

Knowledge Representation

Knowledge representation concerns how to encode information about the world in a form that computers can use to solve complex tasks. This involves developing formal languages, ontologies, and data structures that capture:

- Facts about objects, events, and their properties

- Relationships between entities

- Rules governing domains

- Uncertainty and beliefs

- Temporal and spatial reasoning

Effective knowledge representation schemes underpin many AI systems, from semantic web technologies to advanced reasoning engines.

Planning and Scheduling

AI planning systems determine sequences of actions needed to achieve specific goals. These technologies optimize processes by considering constraints, resources, and objectives to produce efficient schedules and action plans. Applications include:

- Supply chain optimization

- Project management

- Transportation logistics

- Manufacturing production planning

- Workforce scheduling

- Mission planning for aerospace and defense

Advanced planning algorithms can handle uncertainty and adapt to changing conditions in real-time.

Speech Recognition

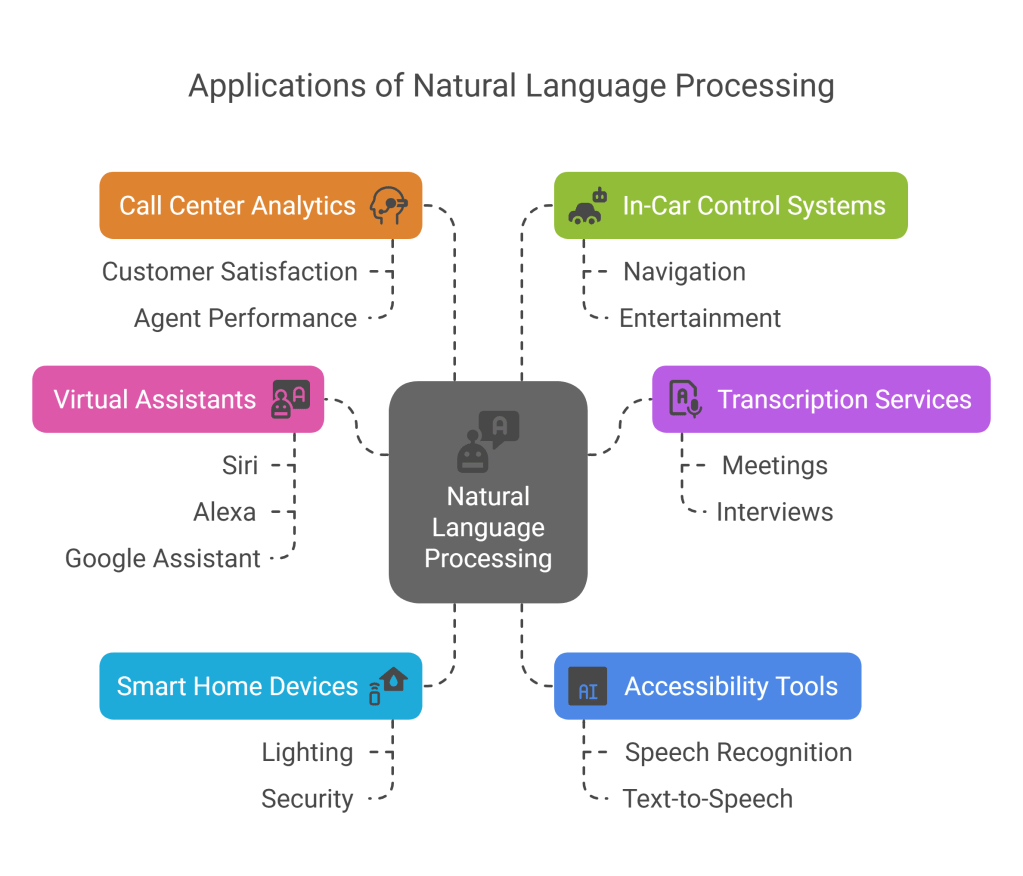

Speech recognition technologies convert spoken language into text, enabling voice-controlled interfaces and transcription services. Modern systems leverage deep learning to achieve remarkable accuracy across different accents, languages, and acoustic environments.

This technology powers:

- Virtual assistants (Siri, Alexa, Google Assistant)

- Transcription services for meetings and interviews

- Voice-controlled smart home devices

- Accessibility tools for those with disabilities

- Call center analytics

- In-car control systems

Recent advances have reduced error rates dramatically, making voice interfaces increasingly practical for everyday use.

AI Ethics

AI Ethics addresses the moral implications of creating and deploying intelligent systems. This interdisciplinary field examines questions such as:

- How to ensure AI systems are fair and unbiased

- Appropriate levels of transparency and explainability

- Privacy protection in data-hungry systems

- Accountability for AI decisions

- Impact of automation on employment

- Long-term risks of advanced AI

- Rights and responsibilities regarding autonomous systems

As AI becomes more powerful and widespread, ethical considerations have moved from theoretical discussions to practical governance frameworks and regulatory policies.

Cognitive Computing

Cognitive computing aims to simulate human thought processes, creating systems that can reason, remember, learn from experiences, and interact naturally with humans. These systems often integrate multiple AI approaches to achieve more human-like intelligence.

Cognitive systems typically feature:

- Contextual understanding

- Adaptive learning

- Human-computer interaction

- Pattern recognition across multiple data types

- Hypothesis generation and testing

IBM’s Watson exemplifies cognitive computing’s potential, with applications ranging from healthcare diagnostics to customer service.

The Second Sphere: Machine Learning

Machine Learning (ML) represents a paradigm shift from traditional programming approaches. Rather than following explicitly programmed instructions, ML systems learn patterns from data, improving their performance over time through experience.

Supervised Learning

Supervised learning involves training algorithms on labeled datasets, where the desired output is known. The system learns to map inputs to outputs, gradually improving its predictive accuracy. Common supervised learning tasks include:

- Classification (categorizing inputs into discrete classes)

- Regression (predicting continuous values)

- Sequence prediction (forecasting the next values in a series)

This approach powers countless applications, from spam filters and credit scoring to medical diagnosis and sales forecasting.

Unsupervised Learning

Unsupervised learning tackles problems where the training data is unlabeled. The algorithm must discover patterns, relationships, or structures within the data without explicit guidance. Key unsupervised learning techniques include:

- Clustering (grouping similar data points)

- Dimensionality reduction (simplifying data while preserving important features)

- Anomaly detection (identifying unusual patterns)

- Association rule learning (discovering relationships between variables)

These methods help uncover hidden patterns in customer behavior, scientific data, or network traffic, revealing insights that might otherwise remain invisible.

Semi-Supervised Learning

Semi-supervised learning bridges supervised and unsupervised approaches by using a small amount of labeled data alongside a larger pool of unlabeled data. This approach is particularly valuable when:

- Labeling data is expensive or time-consuming

- Limited labeled examples are available

- Abundant unlabeled data exists

By leveraging the structure in unlabeled data, semi-supervised learning can achieve performance comparable to fully supervised methods while requiring significantly less labeled data.

Reinforcement Learning

Reinforcement learning involves training agents to make sequences of decisions by rewarding desired behaviors and penalizing undesired ones. Unlike supervised learning, there’s no labeled dataset—instead, the agent learns through trial and error interactions with an environment.

This approach has yielded breakthroughs in:

- Game playing (chess, Go, video games)

- Robotics and control systems

- Autonomous vehicles

- Resource management

- Personalized recommendations

- Financial trading strategies

Reinforcement learning’s strength lies in domains where optimal behavior requires long-term planning and balancing exploration with exploitation.

Decision Trees

Decision trees are hierarchical models that make decisions by following a tree-like graph of choices and their possible consequences. Their interpretable structure makes them valuable for:

- Customer segmentation

- Fraud detection

- Medical diagnosis

- Risk assessment

- Product recommendation

More advanced versions include random forests and gradient boosting machines, which combine multiple trees for improved performance.

Support Vector Machines

Support Vector Machines (SVMs) find the optimal boundary (hyperplane) to separate different classes in a dataset. Known for their effectiveness in high-dimensional spaces, SVMs excel at:

- Text categorization

- Image classification

- Biological classification

- Financial analysis

While somewhat overshadowed by neural networks for certain tasks, SVMs remain powerful for problems with clear boundaries and limited training data.

Dimensionality Reduction

Dimensionality reduction techniques compress high-dimensional data into lower-dimensional representations while preserving important information. This addresses the “curse of dimensionality” that can hamper machine learning performance. Popular methods include:

- Principal Component Analysis (PCA)

- t-Distributed Stochastic Neighbor Embedding (t-SNE)

- Autoencoders

- Uniform Manifold Approximation and Projection (UMAP)

These techniques improve computational efficiency, visualization, and often the performance of subsequent machine learning tasks.

Ensemble Learning

Ensemble learning combines multiple models to obtain better predictive performance than any single model could achieve alone. By aggregating the predictions of diverse models, ensembles reduce errors and increase robustness. Common ensemble methods include:

- Bagging (training models on random subsets of data)

- Boosting (building sequential models that correct previous errors)

- Stacking (using a meta-model to combine base models)

Ensemble approaches consistently rank among the top performers in machine learning competitions and real-world applications.

Feature Engineering

Feature engineering involves transforming raw data into features that better represent the underlying problem, improving model performance. This crucial step includes:

- Creating interaction terms between variables

- Discretizing continuous variables

- Encoding categorical variables

- Extracting domain-specific features

- Normalizing and scaling data

- Managing missing values

Despite advances in automated feature learning, skilled feature engineering remains a competitive advantage in many machine learning projects.

Classification

Classification algorithms assign inputs to discrete categories or classes. This fundamental machine learning task has countless applications:

- Email spam detection

- Sentiment analysis

- Disease diagnosis

- Credit approval

- Image recognition

- Document categorization

The choice of classification algorithm depends on factors like dataset size, feature dimensionality, and interpretability requirements.

Regression

Regression predicts continuous quantities rather than discrete categories. These models estimate relationships among variables to forecast numerical outcomes such as:

- House prices

- Sales volume

- Temperature

- Financial metrics

- User engagement

- Resource consumption

From simple linear regression to complex nonlinear models, regression techniques form the backbone of predictive analytics across industries.

Clustering

Clustering algorithms group similar data points without prior labeling. These unsupervised techniques reveal natural structures within data, enabling:

- Customer segmentation

- Image compression

- Document organization

- Genetic sequence analysis

- Network topology detection

- Anomaly identification

Popular algorithms include K-means, hierarchical clustering, DBSCAN, and Gaussian mixture models.

The Third Sphere: Neural Networks

Neural networks, inspired by biological brain structures, consist of interconnected nodes (neurons) organized in layers. These powerful models excel at recognizing patterns in complex data.

Perceptrons

The perceptron, introduced in the 1950s, represents the simplest form of a neuron model for binary classification. While limited in isolation, perceptrons form the building blocks of more sophisticated neural architectures.

Multi-Layer Perceptron (MLP)

Multi-Layer Perceptrons extend the simple perceptron by adding hidden layers between input and output. This architecture enables MLPs to model complex non-linear relationships and solve problems beyond the capability of single perceptrons.

MLPs serve as universal function approximators, finding applications in:

- Financial prediction

- Speech recognition

- Medical diagnosis

- Control systems

- Pattern recognition

Backpropagation

Backpropagation, the workhorse of neural network training, efficiently calculates how each network parameter affects the overall error. By propagating error gradients backward through the network, this algorithm enables effective learning in deep architectures.

The development of efficient backpropagation algorithms in the 1980s helped overcome early limitations in neural network training, laying the groundwork for today’s deep learning revolution.

Activation Functions

Activation functions introduce non-linearity into neural networks, enabling them to learn complex patterns. Common functions include:

- ReLU (Rectified Linear Unit)

- Sigmoid

- Tanh

- Leaky ReLU

- Softmax (for output layers)

The choice of activation function significantly impacts network performance, with ReLU and its variants dominating modern architectures due to their computational efficiency and resistance to vanishing gradient problems.

Convolutional Neural Networks (CNNs)

CNNs specialize in processing grid-like data such as images. By using local receptive fields, shared weights, and pooling layers, these networks efficiently capture spatial hierarchies of features. CNNs have revolutionized:

- Image classification

- Object detection

- Face recognition

- Video analysis

- Medical imaging

- Document processing

The convolutional architecture’s inductive bias toward local patterns makes CNNs remarkably effective for visual and spatial data.

Long Short-Term Memory (LSTM)

LSTM networks address the challenge of modeling sequential data with long-term dependencies. By introducing memory cells with forget, input, and output gates, LSTMs can selectively remember or forget information over extended sequences. Applications include:

- Language modeling

- Speech recognition

- Machine translation

- Time series prediction

- Music generation

- Video captioning

LSTMs and their variants have been crucial for advances in natural language processing and other sequence modeling tasks.

Generative Adversarial Networks (GANs)

GANs introduce a novel training approach where two networks—a generator and discriminator—compete in a minimax game. The generator creates synthetic data while the discriminator attempts to distinguish real from fake examples. Through this adversarial process, GANs learn to generate remarkably realistic:

- Photorealistic images

- Art and design concepts

- Synthetic data for training other models

- Text-to-image transformations

- Video sequences

- Audio samples

The GAN framework has sparked numerous innovations in generative modeling and representation learning.

Recurrent Neural Networks (RNN)

RNNs process sequential data by maintaining an internal state that captures information about previous inputs. This architecture makes them suitable for:

- Text generation

- Sentiment analysis

- Speech recognition

- Machine translation

- Time series forecasting

- Anomaly detection in sequences

While largely superseded by LSTMs and transformers for many tasks, RNNs established the foundation for neural sequence modeling.

Self-Organizing Maps (SOMs)

Self-Organizing Maps perform dimensionality reduction while preserving topological relationships in the data. These unsupervised neural networks create feature maps where similar inputs activate nearby neurons. SOMs are valuable for:

- Visualization of high-dimensional data

- Cluster analysis

- Feature detection

- Exploratory data analysis

- Pattern recognition

- Document organization

Their ability to represent complex relationships in a visually interpretable form makes SOMs useful for data exploration.

The Fourth Sphere: Deep Learning

Deep Learning represents a subset of machine learning focused on artificial neural networks with multiple layers. The “deep” in deep learning refers to these numerous layers that progressively extract higher-level features from raw input.

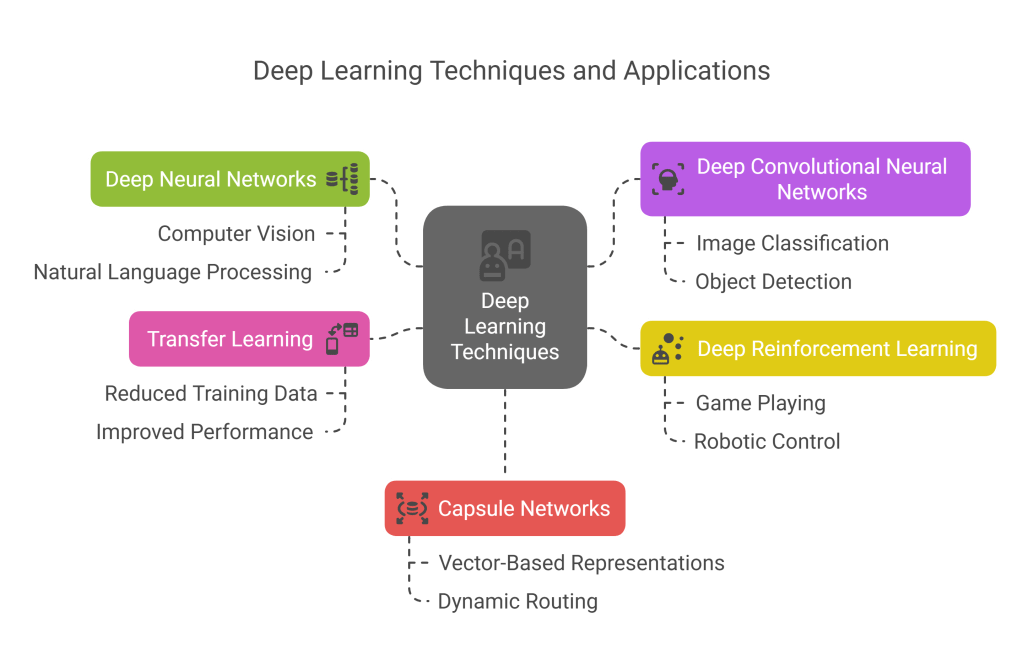

Deep Neural Networks (DNNs)

Deep Neural Networks contain multiple hidden layers between input and output, enabling them to learn hierarchical representations of data. This depth allows DNNs to automatically discover intricate structures in high-dimensional data without feature engineering.

The resurgence of DNNs in the 2010s—driven by increased computational power, larger datasets, and algorithmic improvements—has transformed the AI landscape, enabling previously impossible capabilities in computer vision, natural language processing, and reinforcement learning.

Deep Convolutional Neural Networks (DCNNs)

DCNNs extend conventional CNNs with greater depth, often incorporating specialized layers and architectures. Landmark models like AlexNet, VGGNet, ResNet, and Inception demonstrated that deeper convolutional architectures could achieve unprecedented performance on visual recognition tasks.

These networks have enabled:

- Near-human accuracy in image classification

- Real-time object detection

- Semantic segmentation

- Style transfer

- Super-resolution

- Medical image analysis

Innovations like residual connections, batch normalization, and depthwise separable convolutions have helped overcome the challenges of training very deep networks.

Deep Reinforcement Learning

Deep Reinforcement Learning combines reinforcement learning with deep neural networks, enabling agents to learn optimal behaviors directly from high-dimensional sensory inputs. This approach has produced remarkable results in:

- Game playing (defeating world champions in Go, Dota 2, StarCraft II)

- Robotic control

- Autonomous navigation

- Resource management

- Recommendation systems

- Scientific discovery

Companies like DeepMind have demonstrated that deep reinforcement learning can solve complex problems requiring both perception and long-term strategic planning.

Transfer Learning

Transfer learning leverages knowledge gained from solving one problem to improve performance on a related task. By fine-tuning pre-trained models rather than starting from scratch, this approach:

- Reduces training data requirements

- Accelerates learning

- Improves performance on tasks with limited data

- Enables adaptation across domains

The availability of powerful pre-trained models has democratized access to state-of-the-art AI capabilities, allowing organizations to implement advanced solutions with modest computational resources.

Capsule Networks

Capsule Networks attempt to address limitations of traditional CNNs by encoding spatial relationships between features. Unlike conventional networks that can lose important spatial hierarchies, capsule networks preserve these relationships through:

- Vector-based representations

- Dynamic routing between capsules

- Pose matrices

While still emerging, capsule networks show promise for improved robustness to perspective changes and more efficient learning from limited examples.

The Fifth Sphere: Generative AI

At the core of today’s AI revolution lies Generative AI—systems capable not just of analyzing existing data but creating new content that never existed before. This represents perhaps the most visible and transformative frontier in artificial intelligence.

Language Modeling

Modern language models learn probabilistic representations of text, capturing patterns in word sequences and semantic relationships. These models power:

- Text completion and generation

- Dialogue systems

- Content summarization

- Question answering

- Code generation

- Creative writing assistance

The scale and capability of language models have increased dramatically, from simple n-gram models to massive transformer-based architectures with hundreds of billions of parameters.

Transfer Learning (in Generative AI)

Transfer learning is particularly powerful in generative AI, where foundation models trained on vast datasets can be adapted to specific downstream tasks. This paradigm has:

- Reduced the data requirements for specialized applications

- Enabled rapid development of new capabilities

- Democratized access to state-of-the-art generative technologies

- Created an ecosystem of specialized adaptations of general models

Organizations can now fine-tune pre-trained generative models on domain-specific data to create customized solutions without prohibitive computational costs.

Transformer Architecture

The transformer architecture revolutionized machine learning with its attention mechanism, which allows models to weigh the importance of different parts of the input when generating each part of the output. Key advantages include:

- Parallel processing (unlike sequential RNNs)

- Ability to capture long-range dependencies

- Scalability to massive model sizes

- Effectiveness across multiple domains (text, images, audio)

Since their introduction in 2017, transformers have become the dominant architecture for state-of-the-art AI systems, powering breakthroughs in language models, multimodal systems, and even biological research.

Self-attention Mechanism

Self-attention allows models to focus on relevant parts of the input when generating each output element. This mechanism enables:

- Capturing long-distance relationships

- Processing context bidirectionally

- Learning complex patterns without explicit feature engineering

- Interpretable attention weights that reveal model focus

The self-attention mechanism’s flexibility and effectiveness have made it a cornerstone of modern deep learning architectures.

Natural Language Understanding

Natural Language Understanding goes beyond processing text to comprehending meaning, context, and nuance. Advanced generative models demonstrate capabilities including:

- Contextual comprehension

- Inference and reasoning

- Entity recognition and relationship extraction

- Sentiment and emotion understanding

- Cultural and social context awareness

These capabilities enable more natural and effective human-machine communication across applications from customer service to education.

Text Generation

Text generation capabilities have advanced from producing plausible-looking but incoherent text to creating contextually appropriate, stylistically consistent, and factually grounded content. Modern systems can generate:

- Creative fiction and poetry

- Technical documentation

- Marketing copy

- Personalized emails

- Educational materials

- Code and scripts

The quality of generated text continues to improve, with the latest models producing output that can be difficult to distinguish from human-written content.

Summarization

AI summarization condenses lengthy content while preserving key information and context. Advanced summarization models can:

- Extract essential points from documents

- Generate abstractive summaries using novel phrasing

- Maintain factual accuracy

- Adjust detail level to target length

- Preserve narrative flow and coherence

These capabilities help manage information overload by distilling critical insights from vast amounts of text.

Dialogue Systems

AI dialogue systems engage in contextual, multi-turn conversations that maintain coherence and relevance over extended interactions. Modern systems feature:

- Contextual memory of conversation history

- Persona consistency

- Domain expertise

- Ability to handle ambiguity and clarification requests

- Emotional intelligence and empathy

From customer service chatbots to digital companions, dialogue systems are becoming increasingly sophisticated and natural communication partners.

Conclusion: The Interconnected Nature of AI Technologies

The AI Universe isn’t a simple taxonomy but rather an interconnected ecosystem where technologies from different spheres continuously influence and enhance each other. Breakthroughs in neural network architectures enable new applications in computer vision; advances in reinforcement learning create possibilities for more sophisticated robotics; and improvements in natural language processing feed into more capable dialogue systems.

As we look to the future, several trends are shaping the continued evolution of the AI Universe:

- Multimodal AI that seamlessly integrates understanding across text, images, audio, and other data types

- AI-human collaboration frameworks that leverage the complementary strengths of artificial and human intelligence

- Edge AI that brings intelligent capabilities to devices without requiring cloud connectivity

- Neuromorphic computing that draws even closer inspiration from biological neural systems

- AI democratization through more accessible tools, platforms, and pre-trained models

For organizations looking to harness the power of AI, understanding this interconnected landscape is crucial. Rather than viewing AI technologies as isolated tools, successful implementation requires recognizing how different components can work together to create comprehensive solutions.

The AI Universe continues to expand at an extraordinary pace, creating new possibilities for innovation, efficiency, and human augmentation. By understanding the foundations—from broad artificial intelligence concepts to specialized generative models—we can better navigate this complex and fascinating technological frontier.

Whether you’re just beginning your AI journey or looking to deepen your existing expertise, the layered structure of the AI Universe provides a framework for contextualizing new developments and identifying opportunities at the intersection of different technologies. As these technologies continue to mature and converge, we can expect even more remarkable capabilities to emerge from this vibrant and dynamic field.

Discover more from SkillWisor

Subscribe to get the latest posts sent to your email.