Artificial Intelligence (AI) is no longer confined to the digital realm of cloud servers and massive data centers. A profound shift is underway, embedding intelligence directly into the hardware that powers our world. This fusion of AI and dedicated hardware is not just an incremental upgrade; it’s a fundamental transformation, unlocking unprecedented capabilities in efficiency, speed, responsiveness, and autonomy across countless sectors. From the smartphones in our pockets to the complex systems defending nations and the robots revolutionizing manufacturing, AI-powered hardware is the engine driving the next wave of technological innovation.

This in-depth exploration delves into the intricate world of embedded AI, examining how intelligence is woven into the silicon fabric of modern devices. We will explore the specialized processors making this possible, investigate the diverse applications transforming industries like defense, IoT, edge computing, and robotics, and consider the profound implications of this technological marriage.

Weaving Intelligence into Silicon: The Integration of AI into Hardware

Integrating sophisticated AI models into physical hardware is a multi-stage process, bridging the gap between complex algorithms trained in the cloud and the resource-constrained environments of edge devices. It involves careful development, optimization, and deployment strategies to ensure AI runs efficiently and effectively right where it’s needed.

- Model Development: The Cloud Foundation: The journey begins with training AI models, typically deep neural networks, using vast datasets. This computationally intensive phase usually occurs on powerful cloud servers equipped with high-performance GPUs or specialized AI accelerators. Frameworks like Google’s TensorFlow and Meta’s PyTorch provide the tools and libraries necessary to build, train, and validate these complex models, teaching them to recognize patterns, make predictions, or understand language. This foundational step creates the “brain” of the AI system.

- Model Optimization: Bridging the Cloud-Edge Gap: A fully trained AI model is often too large and computationally demanding for typical hardware like smartphones or embedded sensors. Therefore, a critical optimization phase is required to shrink the model without significantly compromising its accuracy. Techniques like quantization (reducing the precision of the model’s numerical weights), pruning (removing less important neural connections), and knowledge distillation are employed. Specialized tools and runtimes such as NVIDIA’s TensorRT, Apple’s Core ML, or the Open Neural Network Exchange (ONNX) format help convert and optimize these models, making them smaller, faster, and more energy-efficient – ready for deployment on diverse hardware platforms.

- Hardware Mapping: Choosing the Right Engine: The optimized AI model must then be mapped onto specific hardware designed to execute its computations efficiently. This isn’t a one-size-fits-all process. The choice of hardware depends heavily on the application’s requirements for performance, power consumption, and cost.

- CPUs (Central Processing Units): While versatile, standard CPUs are generally less efficient for the parallel computations inherent in many AI tasks. They handle general-purpose tasks within the device.

- GPUs (Graphics Processing Units): Originally designed for rendering graphics, GPUs excel at parallel processing, making them well-suited for training and running certain types of AI models, particularly deep learning inference.

- NPUs/TPUs/AI Accelerators (Neural Processing Units, Tensor Processing Units): These are the specialized champions of embedded AI. Chips like Google’s Edge TPU, Apple’s Neural Engine, Intel’s Movidius VPUs, and various ASICs (Application-Specific Integrated Circuits) are custom-built to accelerate neural network operations like matrix multiplications and convolutions at high speed and low power. They often form the core of AI capabilities in modern devices.

- Edge Integration: Embedding the Brain: With the model optimized and the hardware selected, the AI-enabled chip is embedded into the final product – a smartphone, a security camera, an industrial sensor, a drone, a smart speaker, or a vehicle. This integration brings the intelligence directly to the point where data is generated and action is needed. This concept, known as edge computing, contrasts with traditional cloud-based AI where data must be sent away for processing.

- Runtime Execution: Intelligence in Action: Once integrated, the device operates, collecting real-time data through its sensors – images, sound waves, temperature readings, GPS coordinates, etc. The embedded AI model processes this incoming data locally on the device’s specialized hardware. For instance, a smart camera might analyze video frames to detect a person, or a voice assistant processes audio to understand a command, all without necessarily needing to constantly communicate with a remote server. This local processing dramatically reduces latency (delay), enhances privacy (as sensitive data may not need to leave the device), and allows the device to function even without a stable internet connection.

- Feedback Loop and Continuous Learning: Some advanced embedded AI systems incorporate a feedback loop for improvement. This can take two forms:

- On-device learning: The AI model can subtly adapt and learn over time based on new data encountered directly on the device, personalizing its behavior without cloud intervention.

- Cloud-assisted improvement: Alternatively, aggregated or anonymized data (or model performance metrics) might be sent back to the cloud periodically. This data helps developers further refine and retrain the central AI model. Updated, improved models can then be pushed out to the fleet of devices, creating a cycle of continuous enhancement.

This intricate process transforms hardware from passive components into active, intelligent participants in our digital and physical worlds.

AI-Optimized Processors: The Heartbeat of Embedded Intelligence

The magic of embedded AI hinges on the development of specialized processors explicitly designed to handle the unique computational demands of artificial intelligence algorithms efficiently. These AI-optimized chips go beyond traditional CPU and GPU architectures, incorporating dedicated hardware and features that unlock real-time performance, energy savings, and adaptability.

- Dedicated AI Cores and Parallel Processing: At the core of these processors lie specialized units often referred to as NPUs (Neural Processing Units), TPUs (Tensor Processing Units), or simply AI engines. These cores are hardware-accelerated for the mathematical operations fundamental to neural networks, particularly matrix multiplications and vector operations. They work in parallel, processing vast amounts of data simultaneously, which is crucial for tasks like image recognition or natural language processing. This architectural specialization allows AI tasks to run significantly faster and more efficiently than on general-purpose processors. Furthermore, these chips are often optimized at the silicon level to work seamlessly with popular AI development frameworks like TensorFlow and PyTorch, ensuring that software models translate effectively into hardware execution.

- Real-Time Decision Making via On-Device Inference: A key advantage of AI-optimized hardware is its ability to perform inference (the process of using a trained AI model to make predictions on new data) directly on the device. By embedding inference engines like the Apple Neural Engine or the processing units within NVIDIA’s Jetson platform, devices can analyze sensor data and make decisions locally in milliseconds. This eliminates the latency associated with sending data to the cloud and waiting for a response, which is critical for applications requiring immediate action, such as autonomous driving collision avoidance or real-time object tracking in augmented reality glasses. Specialized Real-Time Processing Units (RTUs) within these chips are often dedicated to handling these time-sensitive inputs and outputs instantly.

- Energy Efficiency: Doing More with Less: Power consumption is a critical constraint for most embedded devices, especially battery-powered ones like smartphones, wearables, and IoT sensors. AI-optimized processors tackle this challenge through several strategies. AI-based dynamic voltage and frequency scaling (DVFS) techniques allow the chip to intelligently adjust its clock speed and voltage based on the current workload, consuming minimal power during idle periods and ramping up only when necessary. AI algorithms can also predict future usage patterns, proactively optimizing the performance-per-watt ratio. Furthermore, many designs incorporate low-power modes, offloading AI tasks from the main CPU to highly efficient, dedicated AI cores like those found in Qualcomm’s AI Engine, thereby saving significant energy.

- Adaptability and Task-Specific Optimization: Modern AI hardware is increasingly adaptable. AI itself can be used to manage how hardware resources are allocated, optimizing performance for the specific task at hand – dedicating more power to computer vision when analyzing an image versus natural language processing when listening for a voice command. Many processors now support modular AI models, allowing them to dynamically load and unload specific AI functionalities based on the current context or user needs, rather than keeping all possible models active simultaneously. Chips like Intel’s Movidius series or ARM’s Ethos-N processors are designed to reconfigure their processing pathways in real-time, adapting their performance characteristics based on the complexity and type of the AI workload they are currently executing.

These advancements in processor design are not just making AI possible on hardware; they are making it practical, paving the way for more intelligent, responsive, and efficient devices across the board.

Transforming Industries: Applications of AI-Powered Hardware

The integration of AI directly into hardware unlocks transformative potential across a vast spectrum of industries. By bringing intelligence to the edge, devices gain autonomy, speed, and efficiency, leading to entirely new capabilities and optimized operations.

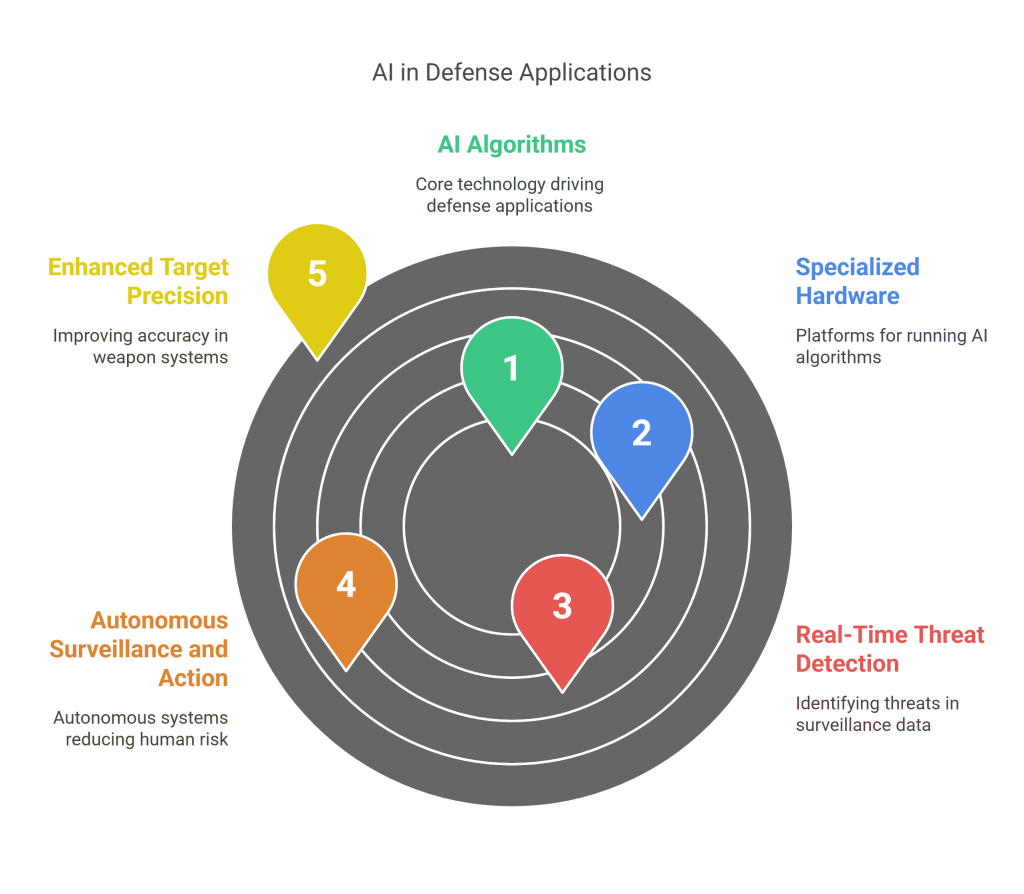

1. AI in Defense: Enhancing Security and Situational Awareness

The defense sector is rapidly adopting AI-powered hardware to gain a strategic edge, improve operational effectiveness, and enhance personnel safety.

- Real-Time Threat Detection: AI algorithms, particularly Convolutional Neural Networks (CNNs) for image analysis and Recurrent Neural Networks (RNNs) for sequence analysis, are run on specialized hardware embedded in drones, satellites, and ground-based sensor systems. This allows for the real-time processing of vast amounts of surveillance data to automatically identify potential threats, such as missile launches, troop movements, or unusual activity, far faster than human analysts could. Project Maven, for instance, integrated AI-hardware solutions to accelerate the identification of objects of interest in drone video feeds.

- Autonomous Surveillance and Action: AI-driven hardware enables new levels of autonomy in surveillance and response systems. Drones equipped with onboard AI processors running object recognition algorithms (like YOLO – You Only Look Once) can autonomously patrol borders, track targets, and even engage them based on pre-defined rules of engagement, reducing risk to human operators. Examples include systems like Israel’s Harpy loitering munition and the U.S. Replicator Initiative, which focuses on deploying large numbers of low-cost, autonomous AI-powered drones for tasks like swarm-based monitoring.

- Enhanced Target Precision: Embedded AI chips in advanced weapon systems, such guided missiles or targeting pods on aircraft like the F-35 (using systems potentially similar to Gorgon Stare concepts), utilize sophisticated algorithms like reinforcement learning and Kalman filters. These systems continuously refine trajectory calculations and guidance based on real-time sensor feedback, significantly increasing accuracy and minimizing collateral damage.

- Cybersecurity Defense: The battlefield extends to cyberspace. AI-hardware hybrid systems are being developed to protect critical defense networks. Specialized servers running AI algorithms like Isolation Forest can analyze network traffic patterns in real-time to detect and block sophisticated zero-day attacks (previously unseen exploits) within milliseconds, far exceeding human response times. DARPA has explored concepts like AI “hacker bots” designed for automated cyber defense.

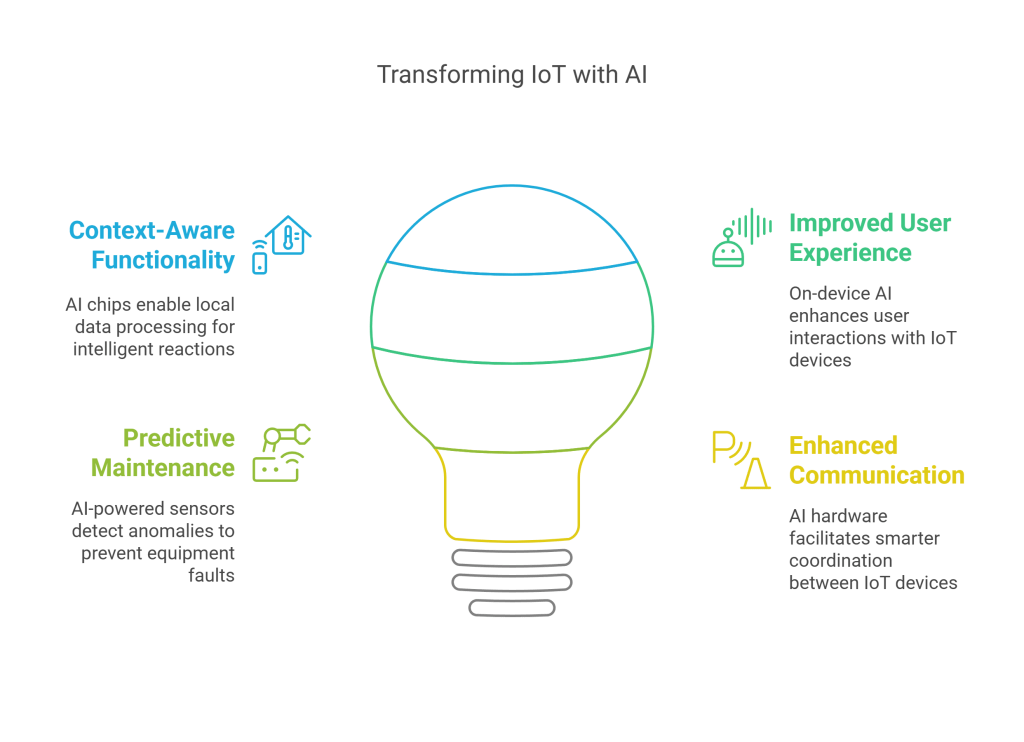

2. AI in IoT Devices: Creating Smarter, More Responsive Environments

The Internet of Things (IoT) – the vast network of connected devices – becomes significantly more powerful when infused with edge AI capabilities.

- Context-Aware Functionality: AI chips like Google’s Edge TPU allow IoT sensors to process environmental data locally, enabling them to understand their context and react intelligently without constant cloud communication. A prime example is the Nest Thermostat, which uses embedded machine learning (specifically reinforcement learning) to learn user preferences and occupancy patterns, automatically adjusting heating and cooling schedules for optimal comfort and energy savings.

- Improved User Experience (UX): On-device AI processing enhances how users interact with IoT devices. Amazon’s Echo smart speakers utilize the AZ1 Neural Edge processor to run Natural Language Processing (NLP) models locally, enabling faster responses to voice commands and more personalized interactions. Similarly, smart lighting systems like Philips Hue can learn user routines and preferences, potentially using edge-based collaborative filtering algorithms to automate lighting scenes without explicit programming.

- Predictive Maintenance and Anomaly Detection: In industrial IoT (IIoT), AI-powered edge gateways and sensors play a crucial role in predictive maintenance. Siemens’ industrial sensors, for example, can use embedded autoencoder algorithms to analyze vibration, temperature, or acoustic data from factory machinery in real-time, flagging subtle anomalies that indicate potential equipment faults before they cause costly downtime. Similar technology is used in wind turbines, where on-device AI analyzes sensor data to predict component failures.

- Enhanced Communication and Coordination: AI hardware facilitates smarter communication and coordination between IoT devices. NVIDIA’s Jetson modules, often used in edge AI applications, can employ techniques like federated learning to allow devices to collaboratively train models without sharing raw sensitive data. In smart city initiatives, like those implemented in Barcelona, AI-hardware grids analyze traffic flow data from numerous sensors and intelligently synchronize traffic lights across the city, demonstrably reducing congestion and improving urban mobility.

3. AI in Edge Computing: Processing Power Where It’s Needed Most

Edge computing, fundamentally enabled by AI-powered hardware, involves processing data closer to where it is generated, rather than relying solely on centralized cloud infrastructure[cite: 44]. This offers distinct advantages:

- Real-Time Processing: For applications where latency is critical, edge computing is indispensable. Embedded AI chips running algorithms like CNNs can make split-second decisions based on local sensor input. Autonomous vehicles are a key example, needing to process LiDAR, radar, and camera data instantly on board to detect obstacles, pedestrians, and other vehicles to navigate safely and avoid collisions.

- Reduced Bandwidth Usage: Transmitting raw data from potentially thousands or millions of edge devices to the cloud consumes enormous bandwidth and can be costly. On-device AI, powered by efficient hardware like Intel’s Movidius Vision Processing Units (VPUs), can perform initial data filtering and analysis locally. Techniques like model pruning help reduce the computational load. For example, a smart security camera network can analyze video streams locally and only send footage to the cloud when the AI detects a specific event of interest (e.g., an intruder), significantly reducing data transmission needs.

- Improved Privacy and Security: Processing sensitive data locally on the device enhances privacy and security. Google’s Coral Edge TPUs, for instance, are designed to facilitate on-device AI, including federated learning approaches where models are trained across devices without exchanging raw user data. Medical wearables can analyze patient vital signs (heart rate, ECG) directly on the device, providing immediate insights or alerts while minimizing the need to transmit potentially sensitive health information to the cloud.

- Offline Functionality: Many edge devices operate in environments with intermittent or non-existent internet connectivity. AI accelerators, such as those from Qualcomm, enable devices like autonomous drones to function effectively even when offline. Agricultural drones, for example, can use edge-based machine learning models running on onboard hardware to analyze multispectral imagery of crops, identify areas of stress or disease, and make decisions about targeted treatments, all while flying over remote fields far from network coverage.

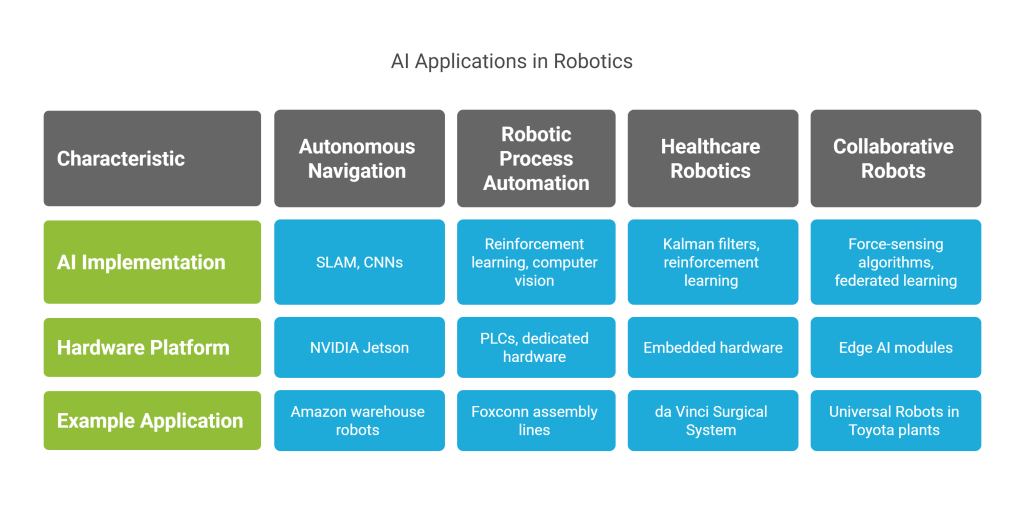

4. AI and Robotics: Enabling Greater Autonomy and Collaboration

AI-powered hardware is revolutionizing robotics, moving beyond pre-programmed automation towards machines that can perceive, reason, learn, and interact with the physical world in sophisticated ways.

- Autonomous Navigation and Perception: Robots operating in dynamic environments require advanced navigation capabilities. AI chips like the NVIDIA Jetson platform are commonly used to run complex algorithms such as SLAM (Simultaneous Localization and Mapping) alongside deep learning models (CNNs) for object recognition and obstacle avoidance. Amazon’s warehouse robots (e.g., Proteus) utilize real-time LiDAR data processed by onboard AI to navigate complex, constantly changing warehouse floors safely and efficiently alongside human workers.

- Robotic Process Automation (RPA) and Manufacturing: In manufacturing, AI enhances traditional automation. Programmable Logic Controllers (PLCs) from companies like Siemens are integrating reinforcement learning capabilities, allowing assembly lines to dynamically optimize their processes for efficiency and throughput. Robotic arms, like those used by Foxconn, leverage powerful computer vision algorithms (e.g., YOLOv7) running on dedicated hardware to perform intricate tasks like sorting electronic components at speeds significantly faster than human capabilities.

- Healthcare Robotics: AI is bringing unprecedented precision and capability to medical robotics. Advanced surgical robots, such as the da Vinci Surgical System, utilize AI algorithms, potentially including Kalman filters for motion smoothing and reinforcement learning for optimizing tool movements, running on embedded hardware. These AI-guided tools enhance surgeon control, reduce tremors, and can lead to improved patient outcomes, with studies suggesting significant reductions in human error rates for certain procedures like prostate surgeries.

- Collaborative Robots (Cobots): The next generation of robots is designed to work safely alongside humans. Cobots, like those from Universal Robots, integrate sophisticated force-sensing algorithms with edge AI modules (potentially leveraging platforms like Microsoft Azure AI Edge). This allows them to detect unexpected contact and stop or adjust their motion instantly. In automotive plants like Toyota’s, cobots might use federated learning techniques to continuously adapt their movements and behaviors based on interactions with human co-workers, improving both safety and collaborative efficiency.

The Horizon Beckons: Future Trends in Embedded AI Hardware

The field of embedded AI is evolving rapidly, driven by continuous innovation in algorithms, software, and hardware. Key future trends include:

- More Powerful and Efficient Edge AI Chips: Expect continued improvements in the performance-per-watt of NPUs, TPUs, and other AI accelerators. Hardware will become even more specialized for specific AI tasks (e.g., vision, speech). Architectures like RISC-V are gaining traction for customizable, open-standard embedded processors.

- TinyML and Ultra-Low-Power AI: The push towards running AI on extremely resource-constrained devices (like simple sensors or microcontrollers) is driving the field of TinyML. This involves developing highly optimized models (e.g., MobileNetV3, YOLO-Nano) and hardware capable of performing meaningful AI tasks using milliwatts of power or less.

- Federated Learning and On-Device Learning: Techniques that allow AI models to learn and adapt directly on the edge device or collaboratively across devices without sending raw data to the cloud will become more prevalent. This enhances privacy and enables continuous improvement of models based on real-world usage.

- Hybrid AI Models and Neuromorphic Computing: Combining traditional AI accelerators with novel approaches like neuromorphic computing (inspired by the human brain’s structure and efficiency) could lead to breakthroughs in low-power, adaptive AI processing at the edge. Initial integrations into edge devices for self-learning capabilities are anticipated. Quantum computing, while further out, may also influence future embedded systems, particularly in areas like cryptography and optimization.

- Enhanced Security Hardware: As security threats evolve, hardware-based security features (secure enclaves, hardware encryption, physically unclonable functions – PUFs) will become standard in embedded AI systems. Quantum-resistant cryptography will also become increasingly important. AI itself will be used more extensively for real-time threat detection directly on the device (IDS/IPS).

- Standardization and Open Source: Efforts to standardize AI model formats (like ONNX) and the increasing adoption of open-source hardware (RISC-V) and software (Embedded Linux) will likely accelerate development and interoperability in the embedded AI ecosystem.

- AI for Hardware Design: AI itself is being used to optimize the design of next-generation AI chips, creating a virtuous cycle of improvement.

The Moral Compass: Ethical Considerations in Embedded AI

As AI becomes deeply embedded in devices that interact with us and make decisions affecting our lives, careful consideration of the ethical implications is paramount.

- Privacy: Embedded devices, equipped with sensors and AI, can collect vast amounts of potentially sensitive data about individuals and their environments. While edge processing can enhance privacy by keeping data local, the potential for misuse remains. Ensuring data minimization, anonymization where possible, transparency about data collection, and robust security are critical. Regulations like GDPR emphasize user consent and data protection.

- Bias and Fairness: AI models can inherit biases present in their training data or algorithms, leading to unfair or discriminatory outcomes. Embedded AI systems making decisions in areas like facial recognition, hiring (if used in embedded recruitment tools), or even autonomous vehicle interactions must be rigorously tested for bias across different demographic groups. Ensuring fairness requires careful data curation, algorithm design, and ongoing audits.

- Accountability and Responsibility: Determining who is responsible when an embedded AI system makes an error or causes harm can be complex. Is it the developer, the manufacturer, the owner, or the AI itself? Establishing clear lines of accountability and mechanisms for redress is essential, especially for critical applications in healthcare, transportation, and defense.

- Transparency and Explainability: Many deep learning models operate as “black boxes,” making it difficult to understand why they reach a particular decision. Lack of transparency hinders trust and makes it hard to debug errors or identify biases. While challenging for complex models, developing interpretable AI techniques (like LIME or decision trees where applicable) and standards requiring explainability are important steps.

- Security and Reliability: As discussed in challenges, ensuring the security and reliability of embedded AI is an ethical imperative, especially when system failures could lead to physical harm or significant disruption (e.g., autonomous vehicles, medical devices, critical infrastructure control). Robust testing, fail-safes, and secure development practices are non-negotiable.

- Autonomy and Human Control: How much autonomy should be granted to embedded AI systems, particularly in critical decision-making contexts like defense (autonomous weapons) or healthcare? Striking the right balance between autonomous capabilities and meaningful human oversight is a crucial ethical debate.

- Societal Impact: The widespread adoption of embedded AI will have broad societal consequences, including potential job displacement due to automation, changes in human interaction, and the potential to exacerbate existing inequalities if benefits are not distributed equitably.

Addressing these ethical considerations requires a multi-stakeholder approach involving developers, manufacturers, policymakers, ethicists, and the public to establish guidelines, regulations (like the EU AI Act), and best practices for responsible AI development and deployment.

Conclusion: The Embedded Intelligence Era

The convergence of Artificial Intelligence and specialized hardware marks the dawn of the embedded intelligence era. Moving AI from the cloud to the edge – directly onto the devices that interact with our physical world – is unlocking capabilities previously confined to science fiction. AI-optimized processors provide the necessary computational power and energy efficiency, while sophisticated integration techniques embed this intelligence seamlessly into everything from consumer electronics to critical infrastructure.

The impact is broad and deep: defense systems gain unprecedented situational awareness and autonomy; IoT devices become truly smart and context-aware, enhancing our daily lives and industrial processes; edge computing delivers real-time insights and robust offline functionality; and robotics evolves towards more capable, adaptable, and collaborative machines.

While challenges remain in areas like standardization, security, and ethical considerations, the trajectory is clear. AI-powered hardware is not just a niche technology; it is rapidly becoming a foundational element of modern innovation. As chips become more powerful, algorithms more refined, and integration methods more sophisticated, we can expect embedded AI to continue its transformative journey, reshaping industries and redefining our relationship with technology in profound ways. The intelligence is no longer just out there; it’s embedded within the very fabric of our world.

Discover more from SkillWisor

Subscribe to get the latest posts sent to your email.