In today’s rapidly evolving AI landscape, large language models (LLMs) have become powerful building blocks for creating intelligent agents that can solve complex problems, automate workflows, and enhance productivity. This comprehensive guide explores the essential patterns for designing and implementing effective LLM-based AI agents, covering everything from architecture to security considerations.

Understanding AI Agents: The New Frontier of Automation

AI agents represent the next evolutionary step in artificial intelligence applications. Unlike simple LLM-powered chatbots, these agents can perform complex tasks, make decisions, and interact with both digital environments and other agents to achieve specific goals. By combining the reasoning capabilities of LLMs with the ability to execute actions, these agents are transforming how we approach automation and problem-solving.

Functional Patterns for LLM-Based Agents

Agent Architecture Patterns

Solo Agent Architecture

The solo agent pattern represents the simplest form of LLM-based agents. In this architecture, a single agent handles the entire workflow from start to finish. This approach works exceptionally well for straightforward tasks that don’t require complex decision trees or specialized knowledge across multiple domains.

Key characteristics:

- Single point of control and execution

- Streamlined communication flow

- Reduced complexity in implementation

- Best suited for well-defined tasks with clear parameters

Implementation example: A solo agent for email management might analyze incoming messages, categorize them by priority, draft responses for approval, and schedule follow-ups—all within a single agent instance.

Agent-to-Agent Handoff

As tasks become more complex, the agent-to-agent handoff pattern offers greater flexibility and specialization. This pattern involves multiple agents passing control between them as the task progresses through different stages, similar to a relay race.

Key characteristics:

- Specialized agents for different task components

- Clear handoff protocols between agents

- Multi-turn conversations between cooperating agents

- Enhanced modularity and maintainability

Implementation example: In a customer service scenario, an initial triage agent might handle the first interaction, then pass the conversation to a technical support agent for specific troubleshooting, before handing off to a satisfaction follow-up agent to conclude the interaction.

Hierarchical Agent Structure

The hierarchical agent pattern introduces a layered approach where a controller agent orchestrates the activities of multiple specialized sub-agents. This pattern excels in complex scenarios requiring coordination across diverse capabilities.

Key characteristics:

- Modular sub-agents handling specialized subtasks

- Independent operation with coordination when necessary

- Scalable architecture for complex workflows

- Centralized oversight with distributed execution

Implementation example: In a research assistant application, a primary agent might coordinate between sub-agents specialized in literature review, data analysis, visualization, and report generation, calling each as needed while maintaining the overall project context.

Agent Actions Patterns

Function Calling

The function calling pattern enables agents to trigger external functions or tools using structured syntax. This capability allows LLM-based agents to bridge the gap between natural language understanding and programmatic execution.

Key characteristics:

- Direct calls to external functions/APIs

- Structured parameter passing

- Clear separation between reasoning and execution

- Enhanced capability through tool integration

Implementation example: An agent assisting with travel planning might call functions to check flight availability, compare hotel prices, or retrieve weather forecasts, incorporating this real-time data into its recommendations.

Generated Code Execution

Taking function calling a step further, the generated code execution pattern allows agents to write and optionally execute code to solve problems. This dramatically expands an agent’s problem-solving capabilities, particularly for data analysis and computational tasks.

Key characteristics:

- Dynamic code generation based on context

- Support for multiple programming languages

- Runtime execution in sandboxed environments

- Iterative improvement based on execution results

Implementation example: A data analyst agent might generate Python code to clean and visualize a dataset, showing the transformation steps and explaining insights discovered through the analysis.

API Tool Use

The API tool use pattern focuses on connecting agents to external services through well-defined API interfaces. This pattern is crucial for agents that need to retrieve or manipulate data from existing services.

Key characteristics:

- Structured prompts for API interactions

- Authentication and permission handling

- Response processing and error management

- Integration with diverse external systems

Implementation example: A productivity agent might connect to calendar APIs to schedule meetings, email APIs to send notifications, and project management APIs to update task statuses, all while maintaining context across these different systems.

Agent Process Patterns

Prescribed Plan Execution

Some agent scenarios benefit from following a predetermined, step-by-step plan. The prescribed plan pattern works well when the workflow is well-understood and relatively stable.

Key characteristics:

- Fixed task sequence defined in advance

- Clear checkpoints and progress tracking

- Predictable behavior and outputs

- Simplified monitoring and debugging

Implementation example: An onboarding agent might follow a prescribed sequence to collect new employee information, configure accounts, schedule training sessions, and distribute welcome materials.

Dynamic Plan Generation

More flexible than prescribed plans, the dynamic plan generation pattern allows agents to create execution strategies based on specific goals and contextual factors. This approach is particularly valuable for autonomous agents tackling varied scenarios.

Key characteristics:

- Goal-oriented planning capabilities

- Contextual adaptation of execution steps

- Replanning when facing obstacles

- Balance between structure and flexibility

Implementation example: A project management agent might dynamically generate plans for completing deliverables based on available resources, deadlines, dependencies, and team member capabilities.

Multi-hop Question Answering (MHQA)

The MHQA pattern combines reasoning with sequential actions to address complex queries that require multiple steps of information gathering and analysis.

Key characteristics:

- Breaking complex questions into sub-questions

- Sequential information gathering

- Integration of intermediate findings

- Comprehensive answer synthesis

Implementation example: A research agent might decompose “How would climate change affect agriculture in the Midwest by 2050?” into sub-queries about climate projections, agricultural dependencies, and regional factors before synthesizing a complete answer.

Collaborating Agents

When multiple agents actively communicate to solve tasks together, they follow the collaborating agents pattern. This approach leverages collective intelligence and specialization.

Key characteristics:

- Active inter-agent communication

- Shared context and goals

- Complementary capabilities

- Parallel or sequential collaboration models

Implementation example: In a software development scenario, collaborating agents specialized in requirements analysis, system design, coding standards, and testing might work together to create a comprehensive solution.

Human-in-the-Loop

The human-in-the-loop pattern incorporates human judgment at critical decision points, combining AI efficiency with human expertise and oversight.

Key characteristics:

- Clear handoff points between agent and human

- Contextual information preservation during transfers

- Verification workflows and approval processes

- Progressive automation with learning

Implementation example: A content creation agent might draft articles based on outlines, pause for human review and feedback at key milestones, and then refine the content based on the guidance received.

Orchestrated Agents

Similar to hierarchical architecture but focused on process flow, the orchestrated agents pattern uses controller agents to delegate and coordinate tasks across a team of specialized agents.

Key characteristics:

- Centralized coordination with distributed execution

- Task delegation based on agent capabilities

- Progress monitoring and intervention when needed

- Complex workflow management

Implementation example: An executive assistant agent might orchestrate calendar management, travel booking, email triage, and meeting preparation agents, maintaining overall awareness while leveraging specialized capabilities.

LLM Interaction Patterns

ReAct (Reason + Act) Loop

The ReAct pattern implements a cyclical process of reasoning followed by action, allowing agents to think through problems step-by-step while interacting with tools or environments.

Key characteristics:

- Alternating reasoning and action phases

- Explicit thought processes

- Tool utilization based on reasoning

- Progressive problem-solving

Implementation example: A diagnostic agent might reason about potential causes of a system failure, act by running a diagnostic test, reason about the results, and act again with more targeted troubleshooting steps.

Chain of Thought

The chain of thought pattern encourages step-by-step reasoning within LLM responses, leading to more reliable results for complex problems that benefit from explicit reasoning.

Key characteristics:

- Explicit intermediate reasoning steps

- Logical progression of thoughts

- Transparency in decision-making

- Reduced reasoning errors

Implementation example: A math tutor agent might work through algebra problems by explicitly showing each step of the solution process, explaining the reasoning behind each transformation.

Structured Response

For consistent and parseable outputs, the structured response pattern ensures agents deliver information in predefined formats optimized for downstream processing.

Key characteristics:

- Consistent output formatting (JSON, tables, etc.)

- Schema adherence for processing

- Information organization for clarity

- Balanced structure and readability

Implementation example: A financial analysis agent might always present investment recommendations in a structured format with clearly delineated sections for risk assessment, expected returns, and market conditions.

Reflexion

The reflexion pattern enables agents to evaluate their own outputs and improve through self-reflection, creating a feedback loop that enhances performance over time.

Key characteristics:

- Self-evaluation of previous responses

- Identification of weaknesses or errors

- Progressive improvement strategies

- Meta-cognitive capabilities

Implementation example: A writing assistant agent might review its previously generated content, identify areas where clarity could be improved, and incorporate these insights into subsequent drafts.

Retry Limits

To prevent infinite loops or excessive resource consumption, the retry limits pattern establishes boundaries on repetitive attempts to solve problems or generate responses.

Key characteristics:

- Defined maximum retry attempts

- Escalation paths when limits are reached

- Prevention of runaway processes

- Resource utilization control

Implementation example: A translation agent might attempt to refine a difficult passage up to three times before flagging it for human review rather than entering an endless refinement loop.

Agent Memory Patterns

Retrieval-Augmented Generation (RAG)

The RAG pattern enhances LLM capabilities by retrieving relevant information from vector stores before generating responses, combining the benefits of knowledge bases with generative capabilities.

Key characteristics:

- Vector embedding of knowledge base content

- Similarity-based information retrieval

- Context augmentation before generation

- Dynamic knowledge incorporation

Implementation example: A customer support agent might use RAG to retrieve product specifications, previous customer interactions, and known issues before generating a response to a technical question.

Memory Longevity

The memory longevity pattern defines how long different types of information persist in an agent’s memory, balancing comprehensive context with computational efficiency.

Key characteristics:

- Session-based short-term memory

- Persistent long-term memory strategies

- Memory prioritization frameworks

- Database integration for retention

Implementation example: A personal assistant agent might maintain short-term memory of the current conversation, medium-term memory of recent interactions, and long-term memory of key preferences and important dates.

Memory Scope

Complementing longevity, the memory scope pattern defines what specific types of information an agent remembers, focusing retention on relevant contextual elements.

Key characteristics:

- Selective memory of user preferences

- Task history retention

- Contextual information preservation

- Interaction pattern recognition

Implementation example: A coding assistant agent might remember user code style preferences, previously discussed architectural decisions, and common patterns in the codebase to provide more consistent and contextually relevant suggestions.

Operational Patterns

User-in-the-Loop Evaluation

The user-in-the-loop evaluation pattern actively incorporates user feedback to assess and improve agent performance, creating a human-centered quality control mechanism.

Key characteristics:

- Explicit feedback collection mechanisms

- User rating systems

- Preference incorporation

- Continuous improvement based on feedback

Implementation example: A content recommendation agent might periodically ask users to rate suggestions, provide preference updates, and explicitly indicate which recommendations were most helpful.

LLM-as-a-Judge

When objective assessment is needed, the LLM-as-a-judge pattern employs a separate LLM instance to evaluate the quality and appropriateness of outputs from primary agents.

Key characteristics:

- Independent evaluation LLM

- Defined quality metrics

- Comparative assessment capabilities

- Feedback loop to primary agents

Implementation example: A writing system might use a judge LLM to evaluate different drafts produced by a content generation agent, selecting the most appropriate or suggesting specific improvements.

Deterministic Evaluation

For scenarios requiring absolute consistency, the deterministic evaluation pattern applies rule-based or code-based logic to evaluate agent outputs against predefined criteria.

Key characteristics:

- Explicit evaluation rules

- Code-based verification

- Objective pass/fail criteria

- Consistent assessment regardless of context

Implementation example: A financial advisor agent might apply deterministic checks to ensure all investment recommendations comply with regulatory requirements and stay within client-specified risk parameters.

Security & Identity Patterns

Identity Token Propagation

To maintain security across agent interactions, the identity token propagation pattern ensures user authentication and authorization information flows appropriately through agent chains.

Key characteristics:

- Secure identity verification

- Permission propagation across agents

- Authentication context preservation

- Authorization boundary enforcement

Implementation example: In an enterprise environment, agents handling sensitive information might pass authenticated user contexts through each handoff, ensuring appropriate access controls throughout the process.

LLM Guardrails

The guardrails pattern implements constraints to prevent hallucinations, unsafe outputs, or data leaks, creating boundaries that ensure agent reliability and safety.

Key characteristics:

Implementation example: A legal assistant agent might employ guardrails that verify citations against known case law databases, prevent advice on unauthorized practice areas, and flag potentially outdated legal interpretations.

Practical Implementation Strategies

Choosing the Right Architecture

Selecting appropriate agent architecture depends on task complexity, specialization requirements, and coordination needs:

- For simple, focused tasks: Solo agent architecture provides simplicity and efficiency

- For workflows with distinct phases: Agent-to-agent handoff enables specialization

- For complex, multi-faceted problems: Hierarchical or orchestrated agents offer coordination advantages

The key consideration is balancing complexity with capability requirements—start with the simplest architecture that can handle the task effectively.

Balancing Autonomy and Control

Determining the right level of agent autonomy involves weighing efficiency against oversight needs:

- Higher autonomy: Increases efficiency but may reduce predictability

- Stronger controls: Improve reliability but may slow execution

- Hybrid approaches: Often implement dynamic autonomy levels based on task criticality

Consider implementing escalation pathways for exceptional cases and clear boundaries for autonomous decision-making.

Implementing Effective Memory Systems

Agent memory implementation typically involves multiple complementary approaches:

- Vector databases: For semantic retrieval capabilities

- Key-value stores: For structured information access

- Session contexts: For conversation continuity

- External knowledge bases: For factual grounding

The most effective implementations often layer these approaches, with different retention policies and access patterns for different types of information.

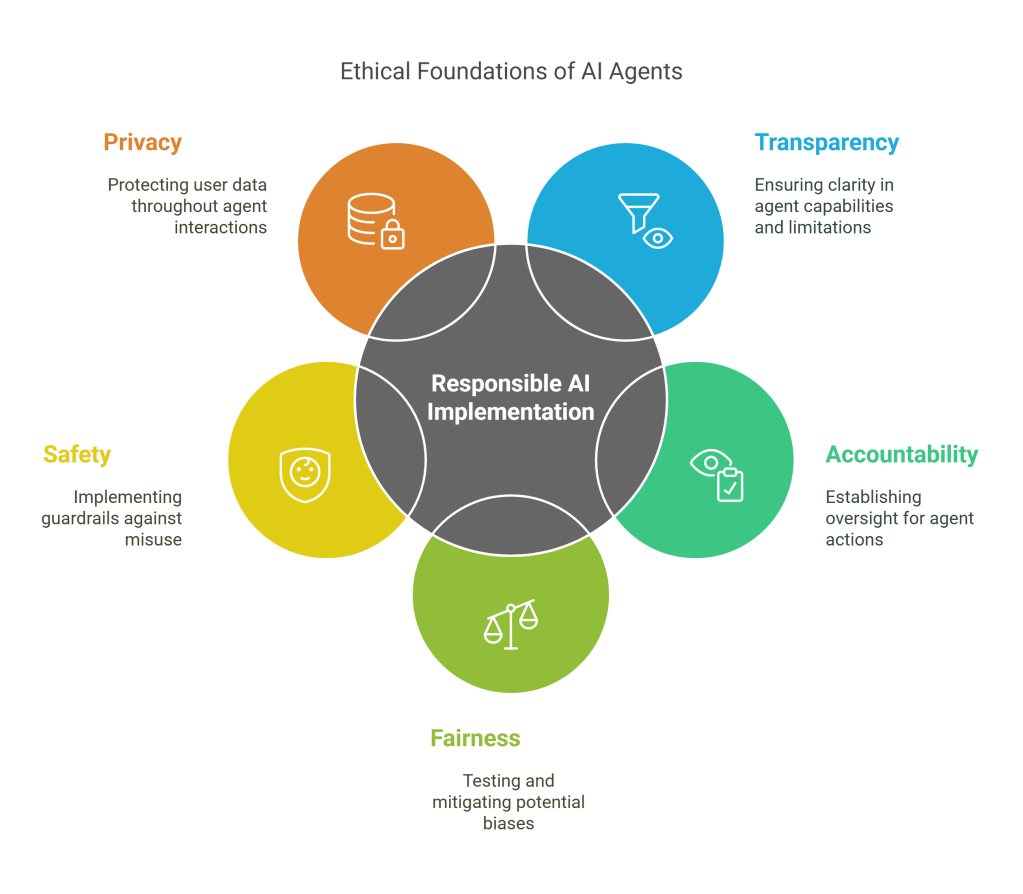

Ensuring Responsible AI Principles

Responsible implementation of LLM-based agents requires attention to:

These considerations should be built into the design process rather than added as afterthoughts.

Future Directions in LLM-based Agents

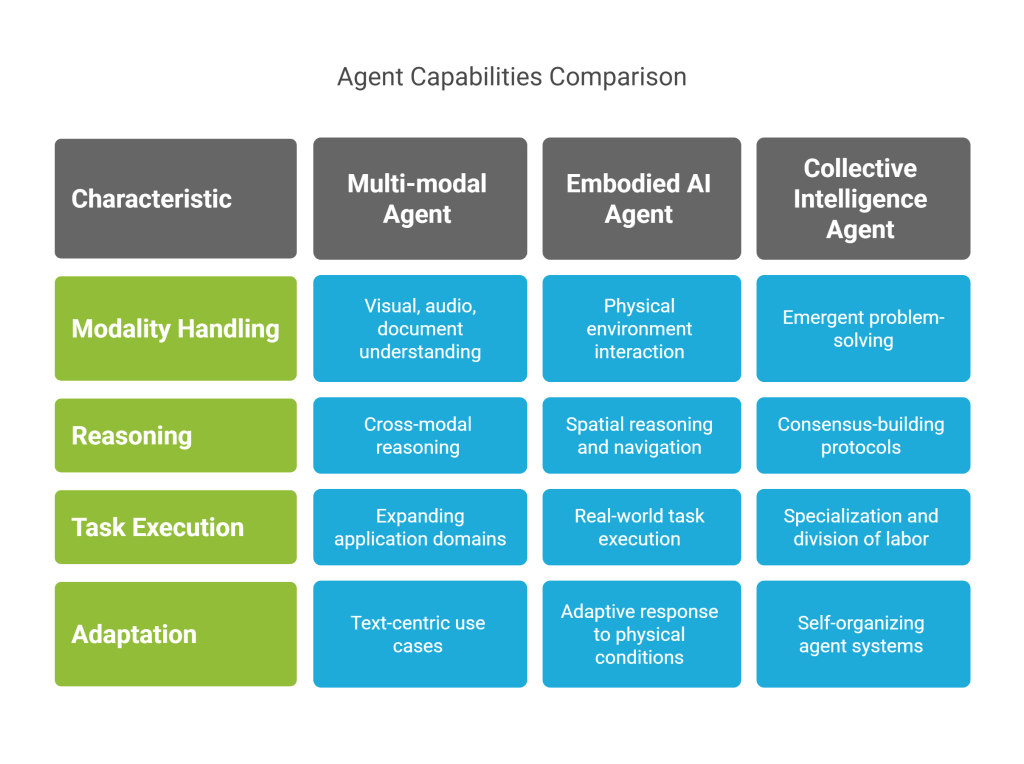

Multi-modal Agent Capabilities

As LLMs evolve to handle multiple modalities, agents are increasingly incorporating:

- Visual understanding and generation

- Audio processing and response

- Document analysis with spatial awareness

- Cross-modal reasoning capabilities

These capabilities are expanding the potential application domains for LLM-based agents beyond text-centric use cases.

Embodied AI and Physical Interaction

The integration of LLM-based agents with robotics and IoT systems is creating new possibilities for:

- Physical environment interaction

- Spatial reasoning and navigation

- Real-world task execution

- Adaptive response to physical conditions

This convergence represents a significant frontier for agent development, particularly in manufacturing, healthcare, and home automation.

Collective Intelligence and Agent Swarms

Beyond individual agents, research into agent collectives is exploring:

- Emergent problem-solving through agent collaboration

- Specialization and division of labor among agent teams

- Consensus-building protocols for agent groups

- Self-organizing agent systems

These approaches show promise for tackling highly complex problems beyond the capabilities of individual agents.

Conclusion

The development of LLM-based AI agents represents a transformative approach to automation, problem-solving, and human-AI collaboration. By applying the patterns outlined in this guide—from architectural considerations to security implementations—developers can create agents that are not only powerful but also reliable, secure, and aligned with human needs.

As these technologies continue to evolve, the most successful implementations will likely be those that thoughtfully combine multiple patterns to address specific use cases, while maintaining strong guardrails and human oversight where appropriate. The future of LLM-based agents lies not just in their autonomous capabilities, but in their ability to complement and enhance human work through thoughtful integration into existing workflows and processes.

Whether you’re building your first experimental agent or designing enterprise-grade agent systems, these patterns provide a foundation for creating solutions that harness the full potential of large language models while addressing the practical challenges of real-world implementation.

Discover more from SkillWisor

Subscribe to get the latest posts sent to your email.