Artificial Intelligence (AI) has evolved significantly over the past decade, with AI agents emerging as a pivotal innovation in the field. These autonomous systems are designed to perceive their environment, process data, and take actions to achieve specific goals, often interacting seamlessly with humans and other systems. This article provides a detailed exploration of AI agents, covering their definition, functionality, key components, underlying technologies, types, system architectures, and real-world applications. By the end, you’ll have a thorough understanding of AI agents and their transformative potential.

What Are AI Agents?

An AI agent is an autonomous system capable of perceiving its environment, processing data, and taking actions to achieve predefined goals. Unlike traditional software that follows rigid instructions, AI agents operate with a degree of independence, adapting to dynamic environments and making decisions based on real-time data. They can interact with humans, applications, and other AI systems, making them versatile tools for automating tasks and solving complex problems.

For example, consider a virtual assistant like Siri or Alexa. These AI agents perceive user voice commands (environment), process the input to understand the request (data processing), and respond by playing music, setting reminders, or providing information (action). Their ability to learn from user interactions over time further enhances their effectiveness, showcasing the core essence of an AI agent. Another example could be an AI agent in an e-commerce platform that observes a user’s browsing patterns, purchase history (environment), processes this data to understand preferences (data processing), and then recommends personalized products or offers (action).

How Do AI Agents Work?

The functionality of AI agents can be broken down into a cyclical process involving several key steps:

- Perception: The agent gathers data from its environment using sensors or input mechanisms. For instance, a chatbot perceives user text input, while a self-driving car’s sensors detect road conditions, and an agricultural AI agent might use drone imagery to assess crop health.

- Processing and Decision-Making: The agent uses algorithms, often powered by machine learning (ML) or natural language processing (NLP), to interpret the data and decide on the next action. For example, a recommendation system on a streaming platform processes user viewing history to suggest new shows, or a logistics AI agent processes real-time traffic and weather data to optimize delivery routes.

- Action: The agent executes its decision, which could involve generating a response, performing a task, or interacting with external systems. A robotic arm in a factory, for instance, might adjust its position to assemble a product, or a smart thermostat agent might adjust the temperature.

- Learning and Adaptation: Many AI agents incorporate a learning mechanism to improve performance over time. By analyzing past actions and outcomes, they refine their decision-making processes. A spam email filter, for example, learns to identify new spam patterns based on user feedback. Similarly, an AI agent controlling a manufacturing robot might learn to optimize its movements for speed and precision by analyzing sensor feedback from previous tasks.

This workflow is often supported by additional capabilities, such as memory (to store past interactions and learned knowledge), reactivity (to respond swiftly to immediate environmental changes), and access to external tools (e.g., APIs for weather information, databases for product specifications, or code interpreters for executing complex calculations). Together, these elements enable AI agents to operate autonomously and efficiently.

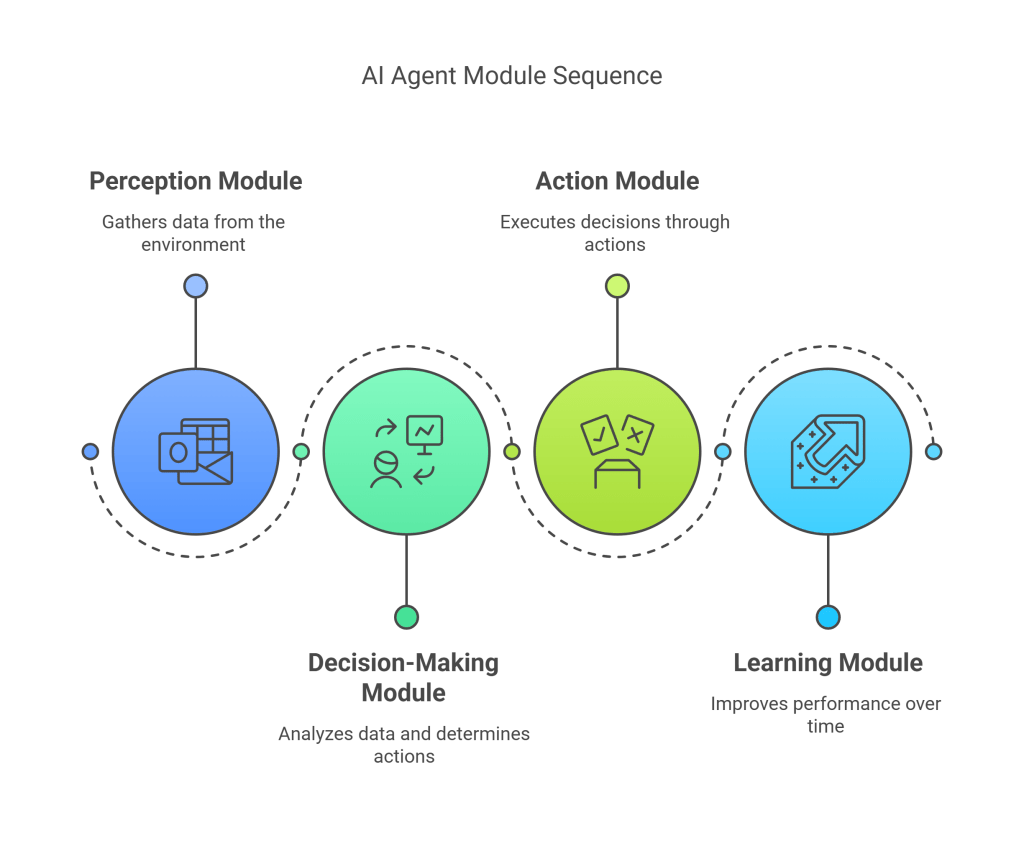

Key Components of AI Agents

AI agents are built on four fundamental modules, each serving a distinct purpose in their operation:

- Perception Module: The perception module is responsible for gathering data from the environment. This could involve processing text, images, audio, video streams, or sensor data (like temperature, pressure, proximity, GPS coordinates), depending on the agent’s purpose. For example, in a smart home system, the perception module might use temperature sensors, motion detectors, and microphone arrays to detect changes in the room’s climate and occupancy, enabling the agent to adjust the thermostat and lighting accordingly. An autonomous drone’s perception module would integrate data from cameras, LiDAR, and inertial measurement units (IMUs).

- Decision-Making Module: The decision-making module is the brain of the AI agent, using AI/ML algorithms to analyze data from the perception module and determine the best course of action. This module often relies on techniques like reinforcement learning, probabilistic reasoning, planning algorithms, or knowledge graphs. For instance, a chess-playing AI agent evaluates possible moves and selects the one most likely to lead to victory based on its decision-making algorithms, which might involve searching through millions of potential game states. A supply chain optimization agent might use predictive analytics and optimization algorithms to decide on inventory levels and shipping routes.

- Action Module: The action module executes the decisions made by the agent, either through autonomous actions or user interaction. This can involve physical actions (e.g., moving a robot arm, steering a vehicle) or digital actions (e.g., sending an email, updating a database, displaying information on a screen). In a customer service chatbot, the action module might generate a text response to a user query, or escalate the query to a human agent. In a robotic vacuum cleaner, it might direct the device to navigate around obstacles and clean specific areas.

- Learning Module: The learning module enables the agent to improve its performance over time by analyzing past actions, their outcomes, and new data from the environment. This is often achieved through machine learning techniques like supervised learning (learning from labeled examples), unsupervised learning (finding patterns in unlabeled data), or reinforcement learning (learning through trial and error with rewards or penalties). A recommendation system on an e-commerce platform, for example, learns user preferences by analyzing purchase history, browsing behavior, and explicit feedback (ratings), refining its suggestions with each interaction. An industrial robot might use its learning module to refine its welding technique by analyzing the quality of previous welds.

These components work together in a continuous loop to create a cohesive system capable of autonomous operation, intelligent decision-making, and continuous improvement.

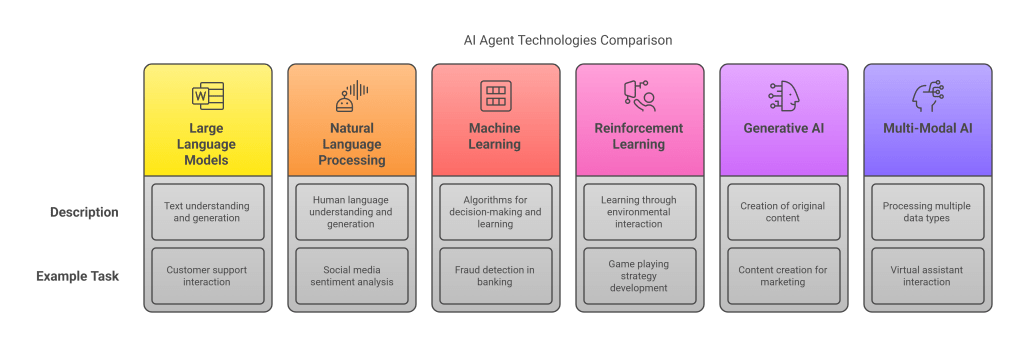

Key Technologies Behind AI Agents

AI agents rely on a combination of advanced technologies to function effectively. Here’s an overview of the primary technologies powering these systems:

- Large Language Models (LLMs): LLMs, such as ChatGPT, Gemini, or Claude, are a cornerstone of many AI agents, particularly those focused on language-intensive tasks. These models are trained on vast datasets of text and code to understand, generate, and manipulate human-like text. This enables agents to engage in nuanced conversations, answer complex questions, summarize documents, write creative content, and even generate code. For example, a customer support agent powered by an LLM can understand and respond to user inquiries in natural language with remarkable fluency, improving user experience and handling a wider range of issues than traditional scripted chatbots.

- Natural Language Processing (NLP): NLP is a broader field of AI that enables AI agents to understand, interpret, and generate human language, including text and speech. It encompasses tasks like sentiment analysis (determining the emotional tone of text), entity recognition (identifying key entities like names, dates, locations), part-of-speech tagging, machine translation, and speech recognition/synthesis. A social media monitoring agent, for instance, might use NLP to analyze user posts and comments to detect sentiment towards a brand, identify emerging trends, or flag inappropriate content.

- Machine Learning (ML): ML provides the foundational algorithms for decision-making, pattern recognition, and learning in AI agents. Supervised learning (e.g., image classification, spam detection), unsupervised learning (e.g., customer segmentation, anomaly detection), and reinforcement learning are commonly used to train agents for specific tasks. A fraud detection agent in the banking sector, for example, might use ML algorithms trained on historical transaction data to identify unusual patterns indicative of potential fraud, flagging these transactions for further investigation.

- Reinforcement Learning (RL): Reinforcement learning is a type of ML where agents learn by interacting with their environment and receiving rewards or penalties for their actions. The agent’s goal is to learn a policy (a mapping from states to actions) that maximizes its cumulative reward over time. This technique is widely used in gaming (e.g., teaching an AI to play complex board games or video games), robotics (e.g., teaching a robot to walk or grasp objects), and control systems (e.g., optimizing energy consumption in a smart grid). For instance, DeepMind’s AlphaGo used RL to master the game of Go, learning optimal strategies through self-play and trial and error.

- Generative AI: Generative AI enables agents to create new, original content, such as text, images, audio, video, or even synthetic data. This technology is often based on models like Generative Adversarial Networks (GANs) or diffusion models, in addition to LLMs for text generation. It’s used in creative applications, like generating marketing copy, designing artwork, composing music, or creating virtual environments. An AI agent for content creation might use generative AI to draft blog posts tailored to a specific audience, generate product descriptions, or create variations of an image for A/B testing, significantly speeding up content production.

- Multi-Modal AI: Multi-modal AI allows agents to process, understand, integrate, and generate information from multiple types of data (modalities) simultaneously, such as text, images, audio, video, and sensor readings. This is crucial for applications requiring a holistic understanding of the environment or more human-like interaction. For example, a virtual assistant like Google Assistant or a more advanced AI agent can process a voice command (“Show me pictures of my dog playing in the park from last summer”) and respond by retrieving and displaying relevant images, combining voice processing, natural language understanding, image recognition, and potentially even date/location metadata analysis. A medical diagnostic agent might analyze a patient’s X-ray (image), their medical history (text), and their reported symptoms (text/speech) to provide a more accurate assessment. Multi-modal agents can also generate multi-modal content, such as creating a video with a voiceover from a text script. This capability allows for richer, more contextual interactions and a deeper understanding of complex scenarios.

These technologies are often used in combination, collectively enabling AI agents to perform a wide range of tasks, from sophisticated language understanding and generation to complex decision-making, creative content generation, and nuanced interaction with the physical and digital worlds.

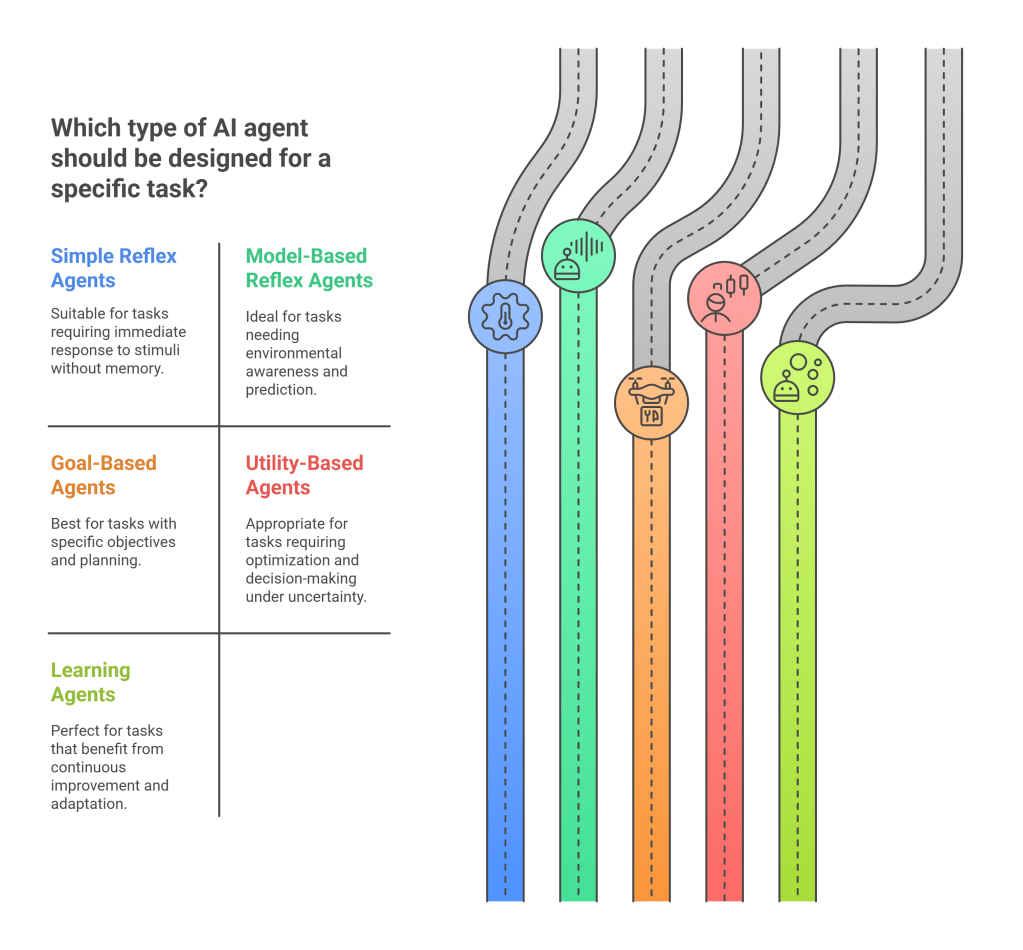

Types of AI Agents

AI agents can be categorized based on their complexity, capabilities, and operational mechanisms. Here are the main types:

- Simple Reflex Agents: Simple reflex agents operate based on predefined condition-action rules (if-then rules) and respond directly to specific environmental stimuli without maintaining an internal state or memory of past perceptions. They are suitable for simple tasks where the current percept provides all necessary information for action. For example, a thermostat is a simple reflex agent that turns on the heater when the temperature drops below a set threshold. An automated email filter that moves messages with certain keywords to the spam folder is another example.

- Model-Based Reflex Agents: Model-based reflex agents maintain an internal model or representation of the environment, which helps them keep track of the current state of the world even when it’s not fully observable from the current percept. This internal model allows them to handle partially observable environments and make more informed decisions based on how the world evolves independently of the agent, and how the agent’s own actions affect the world. A robotic vacuum cleaner that builds and updates a map of a room to navigate efficiently and remember cleaned areas is an example of a model-based reflex agent. A self-driving car agent needs a model of how other cars move and how its own steering affects its trajectory.

- Goal-Based Agents: Goal-based agents are designed to achieve specific objectives. They use their internal model along with information about their goals to evaluate multiple possible sequences of actions and determine which sequence will lead to a desired goal state. This often involves search and planning algorithms. For instance, a delivery drone might calculate the shortest or most energy-efficient route to its destination, considering obstacles and weather conditions. A travel planning agent might search for flights and accommodations that meet a user’s budget and date preferences.

- Utility-Based Agents: Utility-based agents go a step further than goal-based agents by optimizing decisions for maximum “utility” or “happiness,” often using a utility function to evaluate the desirability of different states or outcomes. This allows them to make rational decisions in situations where there are conflicting goals or uncertainty about outcomes. A stock trading agent, for example, might analyze market data to maximize financial returns while managing risk, weighing the potential utility (profit) of different trades against their probabilities of success and potential losses. A personalized news recommendation agent might select articles based on a utility function that considers the user’s interests, the recency of the news, and the diversity of topics.

- Learning Agents: Learning agents continuously improve their performance by adapting to new experiences, data, and feedback. They have a learning element that allows them to modify their internal models, decision-making rules, or utility functions over time. They can learn from their successes and failures, becoming more effective and efficient. A spam filter that learns to identify new spam patterns based on user feedback (marking emails as spam or not spam) is a classic example of a learning agent. An AI agent controlling a game character might use reinforcement learning to improve its strategies by playing the game repeatedly.

Each type of agent is suited to specific tasks and environments, with complexity and capabilities generally increasing from simple reflex agents to sophisticated learning agents capable of autonomous improvement and complex reasoning.

AI Agent System Architecture

The architecture of an AI agent system determines how agents interact with each other, their environment, and potentially with humans. There are three primary architectures:

- Single Agent: In a single-agent architecture, the agent operates independently as a standalone system to perform its tasks and achieve its goals. It perceives its environment and acts upon it without direct collaboration or communication with other agents. Many personal assistants or specialized automation tools fall into this category. For example, a virtual assistant like Siri or Google Assistant on a smartphone handles user requests autonomously, performing tasks like setting reminders, answering questions, or controlling device settings. A standalone AI writing assistant that helps a user draft an email is also a single agent.

- Multi-Agent Systems (MAS): Multi-agent systems involve multiple autonomous agents working together, either collaboratively or competitively, to achieve individual or common goals. Each agent may have specialized roles, capabilities, and knowledge. They communicate and coordinate their actions using defined protocols. MAS are used for complex problems that can be decomposed into smaller, manageable tasks or that require distributed intelligence. In a smart traffic management system, for instance, multiple AI agents might control traffic lights at different intersections, communicating with each other to optimize traffic flow across a city and reduce congestion. In e-commerce, a team of agents might handle inventory management, pricing, and customer interaction.

- Human-Machine Teaming (or Human-in-the-Loop): Human-machine architectures involve AI agents interacting closely with humans, where the AI augments human capabilities, provides assistance, or collaborates on tasks. The human can provide input, override decisions, or take over when the AI encounters situations beyond its capabilities. This is common in critical applications where human oversight is essential. For example, in a medical diagnosis system, an AI agent might analyze patient data (scans, lab results, history) and suggest potential diagnoses or treatment options, but a human doctor reviews the suggestions, incorporates their own expertise, and makes the final decision. Pilots using advanced autopilot systems, or analysts using AI tools to sift through large datasets for intelligence gathering, are other examples.

These architectures highlight the flexibility of AI agents, enabling them to operate independently, as part of a larger collective intelligence, or in synergistic partnership with humans, depending on the complexity and requirements of the task.

Real-World Applications of AI Agents

AI agents are transforming industries by automating tasks, enhancing decision-making, improving efficiency, and creating new services. Here are some notable applications:

- Healthcare:

- Diagnostics: AI agents analyze medical images (X-rays, CT scans, MRIs) to detect diseases like cancer or diabetic retinopathy, often with accuracy comparable to or exceeding human experts.

- Treatment Planning: Systems like IBM Watson Health use NLP and ML to analyze vast amounts of medical literature, patient records, and clinical trial data to suggest personalized treatment options for cancer patients.

- Patient Monitoring: Wearable devices and smart sensors with AI agents can monitor patients’ vital signs in real-time, alerting healthcare providers to anomalies or emergencies, especially for chronic disease management or post-operative care.

- Drug Discovery: AI agents accelerate the process of discovering new drugs by analyzing complex biological data, predicting molecular interactions, and identifying potential drug candidates.

- Finance:

- Fraud Detection: AI agents monitor financial transactions in real-time, identifying patterns indicative of fraudulent activity (e.g., unauthorized credit card use, money laundering) and flagging them for review or blocking them.

- Algorithmic Trading: AI agents analyze market data, news sentiment, and economic indicators at high speed to execute trades, aiming to maximize profits or minimize risks based on predefined strategies.

- Robo-Advisors: Automated investment platforms use AI agents to provide personalized financial advice, manage investment portfolios, and perform tasks like tax-loss harvesting based on a user’s financial goals and risk tolerance.

- Credit Scoring: AI agents assess creditworthiness by analyzing a wider range of data points than traditional methods, potentially offering fairer and more accurate lending decisions.

- Customer Service:

- Chatbots and Virtual Assistants: AI-powered agents handle customer inquiries 24/7 through text or voice, providing information, resolving common issues, processing orders or returns, and guiding users through processes, reducing wait times and operational costs.

- Personalized Recommendations: E-commerce and streaming platforms use AI agents to analyze user behavior and preferences to offer tailored product or content recommendations, enhancing user experience and driving sales.

- Sentiment Analysis: Agents analyze customer feedback from surveys, reviews, and social media to gauge satisfaction and identify areas for improvement.

- Autonomous Vehicles:

- Self-driving cars, trucks, and drones rely on a suite of AI agents to perceive their environment (using cameras, LiDAR, radar, GPS), make critical driving decisions (lane keeping, obstacle avoidance, navigation), and control the vehicle safely. These agents process vast amounts of sensor data in real-time to navigate complex and dynamic road conditions.

- Robotics (Industrial, Service, and Social):

- Manufacturing: AI-powered robots perform tasks like assembly, welding, painting, and quality inspection with high precision and efficiency. Learning agents in robots can adapt to new tasks or variations in products with minimal reprogramming. For example, a robot arm might use computer vision and reinforcement learning to pick and place unfamiliar objects.

- Logistics and Warehousing: Autonomous mobile robots (AMRs) navigate warehouses to pick, sort, and transport goods, optimizing routes and collaborating with human workers and other robots.

- Service Robots: AI agents power robots used in hospitality (e.g., delivery robots in hotels), healthcare (e.g., assisting elderly patients), and domestic settings (e.g., advanced robotic vacuum cleaners and lawnmowers).

- Social Robots: Agents enable robots to interact with humans in a more natural and engaging way, understanding social cues, expressing emotions (to some extent), and providing companionship or assistance. Examples include robots used in education or as companions for the elderly.

- Gaming:

- AI agents create realistic and challenging non-player characters (NPCs) that can adapt their strategies, learn from player behavior, and provide dynamic and engaging gameplay experiences.

- Procedural content generation (PCG) driven by AI agents can create vast and unique game worlds, levels, and narratives.

- Smart Homes and Cities:

- Smart Homes: AI agents manage lighting, temperature, security systems, and appliances based on user preferences, occupancy, and environmental conditions to enhance comfort, convenience, and energy efficiency.

- Smart Cities: AI agents are used for optimizing traffic flow, managing public transportation, monitoring environmental conditions (air quality, noise pollution), improving energy distribution, and enhancing public safety through smart surveillance.

- Content Creation and Personalization:

- AI agents can generate articles, marketing copy, social media posts, and even scripts.

- They personalize news feeds, music recommendations, and advertising content based on individual user profiles and behavior.

Challenges and Future Directions

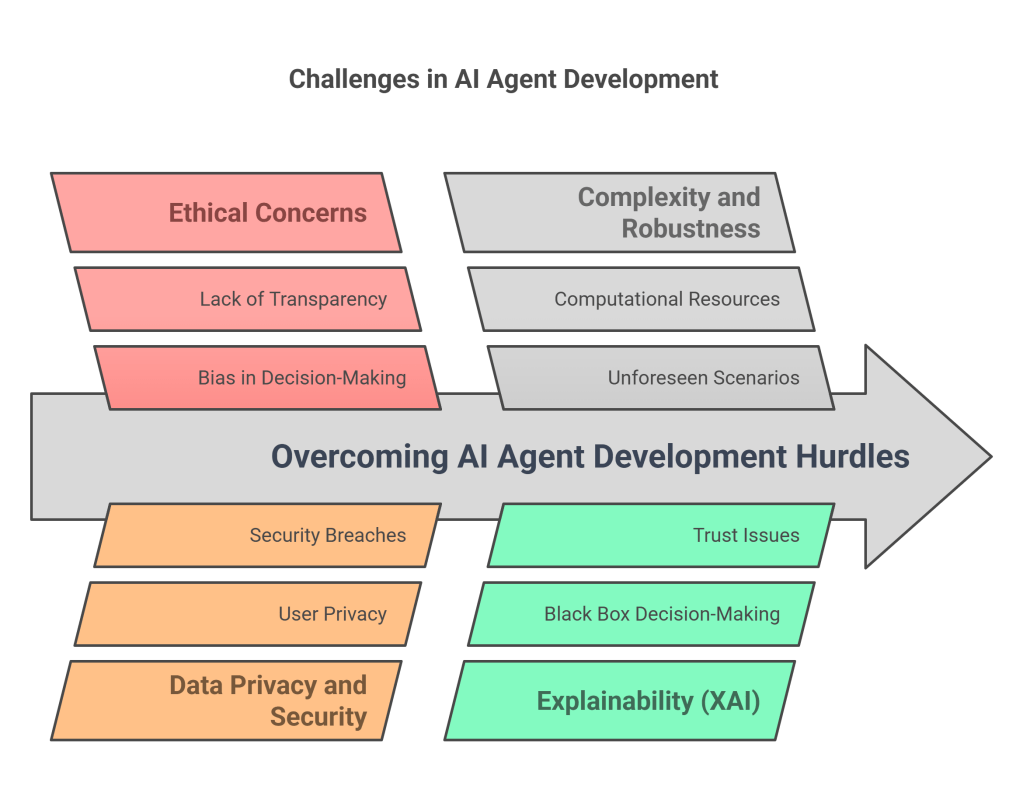

While AI agents offer immense potential, they also face several challenges:

- Ethical Concerns: AI agents must be designed and trained to avoid bias (e.g., in hiring, loan applications, or law enforcement) and ensure fairness, transparency, and accountability in their decision-making processes.

- Data Privacy and Security: Agents often require access to large amounts of personal or sensitive data, raising significant concerns about user privacy, data protection, and the potential for misuse or security breaches.

- Complexity and Robustness: Developing highly capable and robust AI agents, especially those operating in complex, unpredictable environments or in multi-agent systems, requires significant computational resources, sophisticated algorithms, and extensive testing. Ensuring they can handle unforeseen scenarios gracefully and avoid catastrophic failures is critical.

- Explainability (XAI): For many advanced AI agents, particularly those using deep learning, their decision-making processes can be “black boxes,” making it difficult to understand why a particular decision was made. Improving explainability is crucial for trust and debugging, especially in critical applications.

- Human-Agent Interaction: Designing intuitive and effective ways for humans to interact, collaborate, and trust AI agents is an ongoing challenge.

Looking ahead, the future of AI agents is incredibly promising. Advances in areas like:

- Enhanced Multi-modal AI: Agents will become even more adept at understanding and generating information across a wider range of modalities, leading to more natural and comprehensive interactions.

- Sophisticated Reinforcement Learning: RL techniques will enable agents to learn more complex behaviors with less data and adapt more quickly to changing environments.

- Generative AI: The ability of agents to create high-quality, novel content will continue to expand, impacting numerous creative and professional fields.

- Federated Learning and Edge AI: These will allow agents to learn from decentralized data sources while preserving privacy, and to perform more processing locally on devices, reducing latency and reliance on cloud connectivity.

- Agent-to-Agent Communication and Collaboration: Standards and protocols for more sophisticated inter-agent communication will enable more powerful multi-agent systems capable of tackling larger, more complex problems.

The integration of AI agents into emerging technologies like the Internet of Things (IoT), 5G/6G networks, quantum computing (for certain types of processing), and the metaverse will further expand their capabilities, paving the way for smarter, more autonomous, and more deeply integrated systems across all aspects of life.

Conclusion

AI agents represent a significant leap forward in artificial intelligence, offering a powerful framework for automating tasks, solving complex problems, and enhancing human capabilities. By understanding their core components, the underlying technologies that power them, the different types of agents, and their system architectures, we can better appreciate their rapidly expanding role in transforming industries and shaping the future. As technology continues its rapid evolution, AI agents will undoubtedly play an increasingly central role in driving innovation and creating a more efficient, intelligent, and interconnected world.

Whether it’s a chatbot assisting with customer queries, a self-driving car navigating city streets, an industrial robot optimizing a manufacturing line, or a medical agent supporting doctors in diagnosis, the impact of AI agents is already profound and continues to grow. As we continue to explore their potential and address the associated challenges, the possibilities for AI agents are limited only by our imagination and our ability to guide their development responsibly.

Discover more from SkillWisor

Subscribe to get the latest posts sent to your email.