Introduction

Named Entity Recognition (NER) is a fundamental task in natural language processing (NLP) that involves identifying and classifying named entities within text. These entities represent real-world objects such as people, organizations, locations, dates, monetary values, and other specific items that carry semantic meaning. As one of the core components of information extraction, NER serves as a bridge between unstructured text and structured data, making it invaluable for numerous applications in the modern digital landscape.

What is Named Entity Recognition?

Named Entity Recognition is a subtask of information extraction that seeks to locate and classify named entities mentioned in unstructured text into predefined categories. The process involves two main steps: first, identifying boundaries of named entities in text (entity detection), and second, classifying these entities into appropriate categories (entity classification).

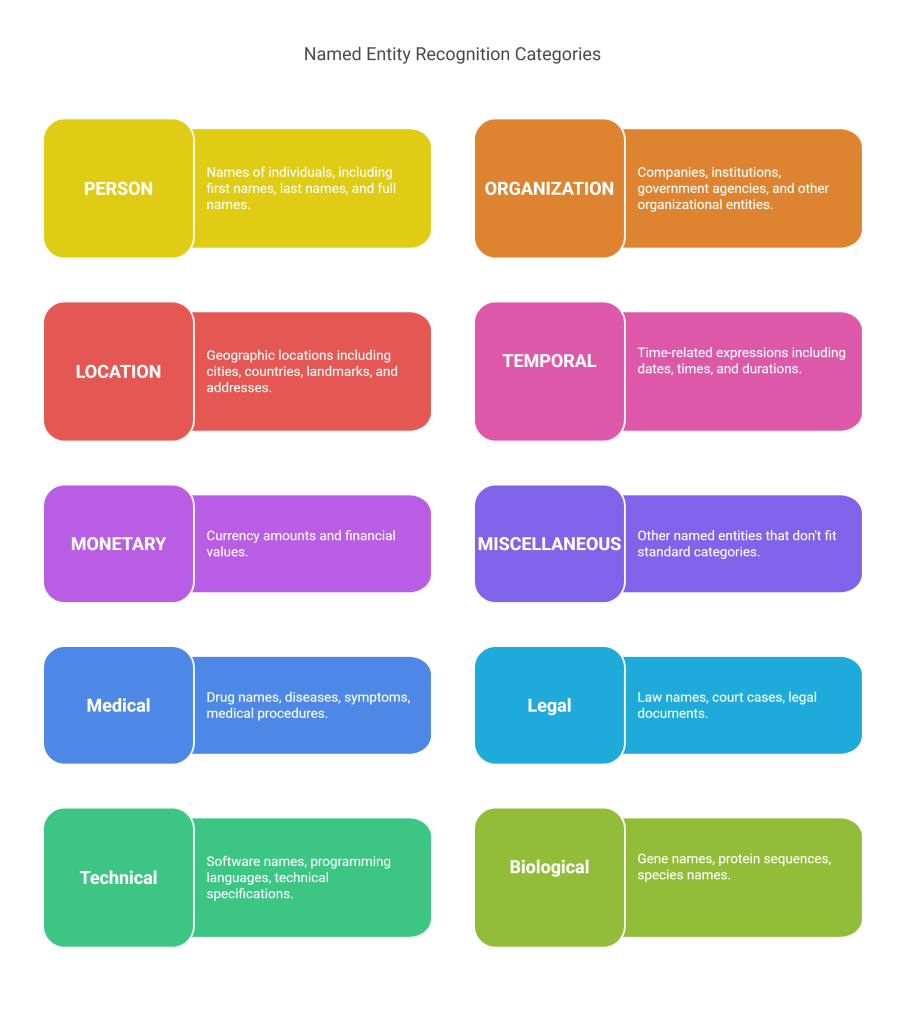

Common Entity Types

The most widely recognized entity categories include:

PERSON: Names of individuals, including first names, last names, and full names

- Examples: “John Smith”, “Marie Curie”, “Einstein”

ORGANIZATION: Companies, institutions, government agencies, and other organizational entities

- Examples: “Apple Inc.”, “Harvard University”, “United Nations”

LOCATION: Geographic locations including cities, countries, landmarks, and addresses

- Examples: “New York City”, “Mount Everest”, “123 Main Street”

TEMPORAL: Time-related expressions including dates, times, and durations

- Examples: “January 15, 2024”, “3:30 PM”, “last week”

MONETARY: Currency amounts and financial values

- Examples: “$1,000”, “€500”, “fifty dollars”

MISCELLANEOUS: Other named entities that don’t fit standard categories

- Examples: product names, event names, languages, nationalities

Beyond Standard Categories

Modern NER systems often extend beyond these basic categories to include domain-specific entities such as:

- Medical: Drug names, diseases, symptoms, medical procedures

- Legal: Law names, court cases, legal documents

- Technical: Software names, programming languages, technical specifications

- Biological: Gene names, protein sequences, species names

Why is Named Entity Recognition Important?

Information Extraction and Knowledge Management

NER transforms unstructured text into structured information, enabling organizations to extract valuable insights from documents, emails, reports, and other textual content. This capability is crucial for knowledge management systems that need to organize and retrieve information efficiently.

Search and Information Retrieval Enhancement

By identifying entities within documents, NER significantly improves search capabilities. Users can search for specific people, places, or organizations rather than relying solely on keyword matching. This leads to more precise and relevant search results.

Content Analysis and Business Intelligence

Organizations use NER to analyze large volumes of text data for business intelligence purposes. For example, companies can monitor news articles and social media posts to track mentions of their brand, competitors, or industry-related topics.

Automated Content Processing

NER enables automated processing of documents for various purposes, including:

- Document classification: Categorizing documents based on entities they contain

- Compliance monitoring: Identifying regulated entities in financial or legal documents

- Content recommendation: Suggesting related content based on entity similarity

Foundation for Advanced NLP Tasks

NER serves as a preprocessing step for more complex NLP tasks such as:

- Relation extraction: Identifying relationships between entities

- Question answering: Understanding what entities a question refers to

- Machine translation: Preserving entity names across languages

- Text summarization: Maintaining important entity information in summaries

How Does Named Entity Recognition Work?

Traditional Approaches

Rule-Based Systems

Early NER systems relied heavily on hand-crafted rules and regular expressions. These systems used patterns such as:

- Capitalization patterns (proper nouns typically start with capital letters)

- Contextual clues (titles like “Mr.”, “Dr.”, “Inc.”)

- Gazetteers (lists of known entities)

- Part-of-speech patterns

While rule-based systems can achieve high precision in specific domains, they require extensive manual effort and struggle with ambiguity and variability in natural language.

Statistical Methods

Statistical approaches introduced machine learning to NER, using features such as:

- Lexical features: Word forms, capitalization, prefixes, suffixes

- Contextual features: Surrounding words, part-of-speech tags

- Orthographic features: Presence of digits, hyphens, special characters

- Gazetteer features: Membership in entity lists

Popular statistical models included Hidden Markov Models (HMMs), Maximum Entropy models, and Conditional Random Fields (CRFs).

Modern Deep Learning Approaches

Recurrent Neural Networks (RNNs)

RNN-based models, particularly Long Short-Term Memory (LSTM) networks and Bidirectional LSTMs (BiLSTMs), revolutionized NER by capturing sequential dependencies in text. These models can learn complex patterns and representations automatically.

Transformer-Based Models

The advent of transformer architectures, particularly BERT (Bidirectional Encoder Representations from Transformers) and its variants, has achieved state-of-the-art performance in NER tasks. These models leverage:

- Contextual embeddings: Word representations that change based on context

- Attention mechanisms: Focusing on relevant parts of the input sequence

- Pre-training: Learning from large amounts of unlabeled text before fine-tuning on NER tasks

Sequence Labeling Approaches

Most modern NER systems frame the problem as sequence labeling, using tagging schemes such as:

BIO Tagging:

- B (Beginning): First token of an entity

- I (Inside): Continuation of an entity

- O (Outside): Not part of an entity

BILOU Tagging:

- B (Beginning): First token of a multi-token entity

- I (Inside): Continuation of a multi-token entity

- L (Last): Final token of a multi-token entity

- O (Outside): Not part of an entity

- U (Unit): Single-token entity

Implementation Pipeline

A typical NER implementation involves several steps:

1. Text Preprocessing

- Tokenization: Breaking text into individual words or subwords

- Sentence segmentation: Dividing text into sentences

- Normalization: Handling capitalization, punctuation, and special characters

2. Feature Engineering (for traditional approaches)

- Extracting relevant features from text

- Creating feature vectors for machine learning models

3. Model Training

- Preparing annotated training data

- Training the NER model on labeled examples

- Hyperparameter tuning and validation

4. Post-processing

- Applying business rules or constraints

- Resolving conflicts between overlapping entities

- Normalizing entity mentions to canonical forms

Evaluation Metrics

NER systems are typically evaluated using:

Precision: Percentage of predicted entities that are correct Recall: Percentage of actual entities that are correctly identified F1-Score: Harmonic mean of precision and recall

Evaluation can be performed at different levels:

- Token-level: Evaluating individual token classifications

- Entity-level: Evaluating complete entity spans

- Exact match: Requiring perfect boundary and type matching

- Partial match: Allowing some overlap between predicted and actual entities

Challenges in Named Entity Recognition

Ambiguity and Context Dependency

Many words can serve as both common nouns and named entities depending on context. For example, “Apple” could refer to the fruit or the technology company. Resolving such ambiguities requires sophisticated understanding of context.

Entity Boundary Detection

Determining where entities begin and end can be challenging, especially for:

- Multi-word entities: “New York City”, “World Health Organization”

- Nested entities: “University of California, Los Angeles” contains both an organization and a location

- Discontinuous entities: Entities split across multiple non-contiguous tokens

Domain Adaptation

NER models trained on one domain often perform poorly on others due to:

- Different entity types and distributions

- Varying linguistic patterns and terminology

- Domain-specific abbreviations and conventions

Multilingual and Cross-lingual Challenges

Working with multiple languages introduces additional complexity:

- Different writing systems and character sets

- Varying capitalization conventions

- Language-specific entity structures

- Limited annotated data for low-resource languages

Evolving Language and New Entities

Language constantly evolves, introducing new entities and changing existing ones:

- Emerging organizations, products, and technologies

- Social media and informal language patterns

- Abbreviations, hashtags, and internet slang

Tools and Frameworks

Open Source Libraries

spaCy: A popular industrial-strength NLP library offering pre-trained NER models for multiple languages, with easy-to-use APIs and excellent performance.

NLTK: The Natural Language Toolkit provides basic NER functionality and serves as a good starting point for learning and experimentation.

Stanford NER: A Java-based NER system offering robust performance and multiple pre-trained models.

Flair: A modern NLP framework featuring state-of-the-art contextual string embeddings and transformer-based models.

Hugging Face Transformers: Provides access to numerous pre-trained transformer models for NER, including BERT, RoBERTa, and domain-specific variants.

Commercial Solutions

Google Cloud Natural Language API: Offers entity recognition as part of a comprehensive NLP service with support for multiple languages and entity types.

Amazon Comprehend: Provides NER capabilities along with other text analysis features, with options for custom entity recognition.

Microsoft Azure Text Analytics: Includes named entity recognition with support for various entity categories and languages.

IBM Watson Natural Language Understanding: Offers entity recognition and analysis capabilities as part of a broader NLP platform.

Evaluation Datasets

CoNLL-2003: A widely-used benchmark dataset for English and German NER, featuring news articles with person, location, organization, and miscellaneous entities.

OntoNotes 5.0: A large-scale multilingual dataset covering multiple languages and domains with rich entity annotations.

WikiNER: An automatically annotated dataset derived from Wikipedia, covering multiple languages and entity types.

Best Practices and Implementation Tips

Data Preparation

Quality training data is crucial for NER success. Key considerations include:

- Consistent annotation guidelines: Ensure annotators follow clear, consistent rules

- Sufficient data volume: Aim for thousands of annotated examples per entity type

- Balanced representation: Include diverse examples across different contexts and domains

- Quality control: Implement inter-annotator agreement measures and quality checks

Model Selection and Training

Choose appropriate models based on your requirements:

- For high accuracy: Use transformer-based models like BERT or its variants

- For speed and efficiency: Consider lighter models like spaCy’s statistical models

- For custom domains: Plan for domain adaptation and fine-tuning strategies

- For multilingual needs: Select models trained on relevant languages

Handling Imbalanced Data

NER datasets often suffer from class imbalance, with some entity types being much rarer than others. Address this through:

- Weighted loss functions: Assign higher weights to rare entity types

- Data augmentation: Generate additional examples for underrepresented classes

- Ensemble methods: Combine multiple models to improve rare class detection

Post-processing and Validation

Implement robust post-processing steps:

- Consistency checks: Ensure entities follow expected patterns

- Gazetteer validation: Cross-reference against known entity lists

- Confidence thresholding: Filter out low-confidence predictions

- Business rule application: Apply domain-specific constraints

Future Directions and Trends

Few-Shot and Zero-Shot Learning

Research increasingly focuses on reducing annotation requirements through:

- Few-shot learning: Training effective models with minimal labeled examples

- Zero-shot learning: Recognizing entity types never seen during training

- Transfer learning: Leveraging knowledge from related tasks and domains

Multilingual and Cross-lingual Models

Development of truly multilingual NER systems that can:

- Handle code-switching and mixed-language text

- Transfer knowledge across languages

- Work effectively with low-resource languages

Integration with Knowledge Graphs

Combining NER with structured knowledge to:

- Improve entity disambiguation

- Enable more sophisticated entity linking

- Support complex reasoning about entities and their relationships

Continual Learning

Developing systems that can:

- Adapt to new entity types without forgetting existing ones

- Learn from streaming data in real-time

- Handle concept drift and evolving language patterns

Conclusion

Named Entity Recognition remains a cornerstone technology in natural language processing, enabling machines to understand and extract structured information from unstructured text. As language models become more sophisticated and applications more diverse, NER continues to evolve, incorporating new techniques and addressing emerging challenges.

The field has progressed from simple rule-based systems to sophisticated deep learning models capable of understanding context and handling ambiguity. Modern transformer-based approaches have achieved remarkable performance, while ongoing research focuses on reducing annotation requirements, improving multilingual capabilities, and integrating with broader knowledge systems.

For practitioners looking to implement NER solutions, the abundance of open-source tools and pre-trained models makes it easier than ever to get started. However, success still requires careful attention to data quality, model selection, and domain-specific adaptation. As the field continues to advance, we can expect even more powerful and flexible NER systems that better understand the nuances of human language and the entities that populate our world.

Whether used for information extraction, search enhancement, content analysis, or as a foundation for more complex NLP tasks, Named Entity Recognition continues to play a vital role in helping machines understand and process human language at scale.

Discover more from SkillWisor

Subscribe to get the latest posts sent to your email.