In the world of natural language processing (NLP), text preprocessing is a crucial step that can significantly impact the performance of your models. Two fundamental techniques that often confuse practitioners are stemming and lemmatization. While both serve the purpose of reducing words to their base forms, they approach this task in fundamentally different ways. Understanding when to use each technique can make the difference between a mediocre and exceptional NLP system.

What is Stemming?

Stemming is a rule-based process that removes suffixes from words to reduce them to their root form, called a “stem.” Think of it as a crude but fast method of chopping off word endings based on predefined rules. The stem doesn’t necessarily have to be a valid word in the language—it’s simply the remaining portion after the suffix removal process.

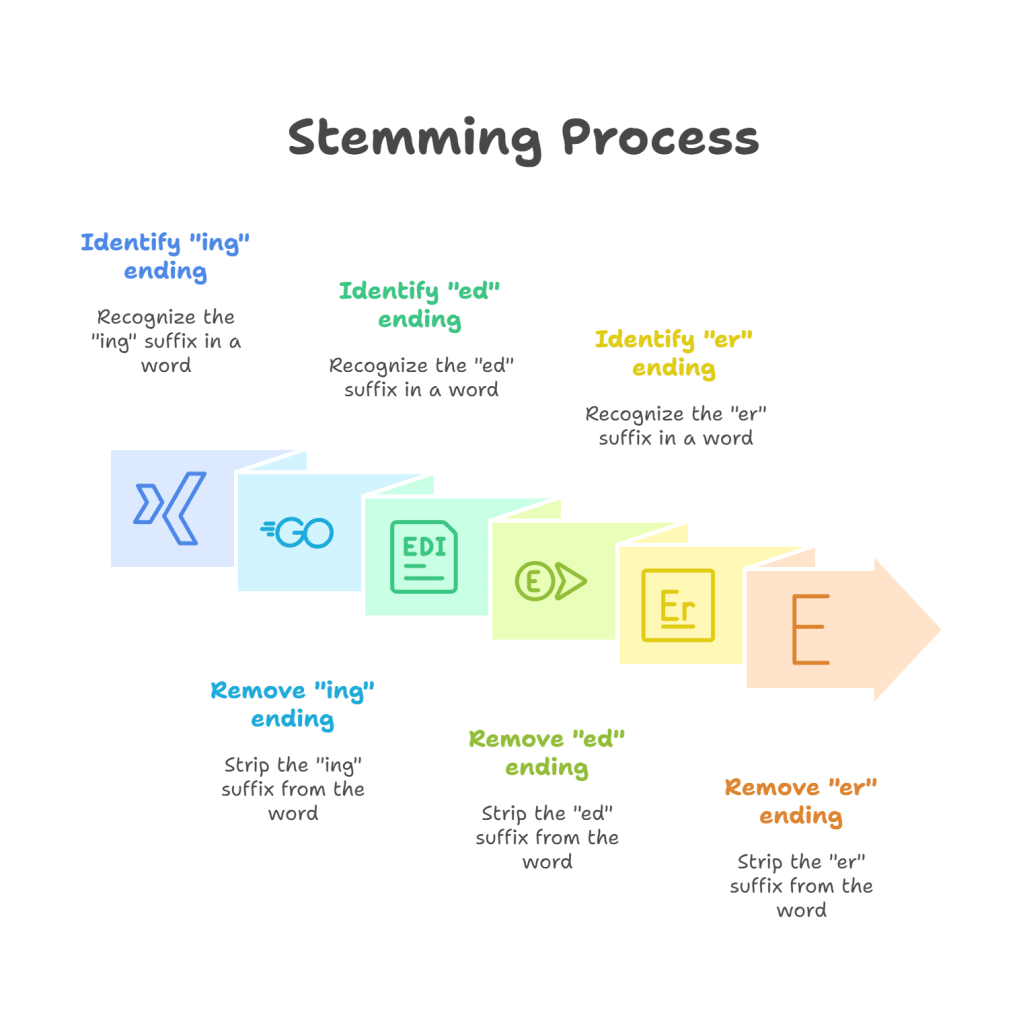

How Stemming Works

Stemming algorithms apply a series of transformation rules to strip common suffixes. The most popular stemming algorithm, the Porter Stemmer, uses rules like:

- Remove “ing” endings: “running” → “runn”

- Remove “ed” endings: “walked” → “walk”

- Remove “er” endings: “better” → “bett”

Popular Stemming Algorithms

Porter Stemmer: The most widely used algorithm, known for its simplicity and speed. It applies about 60 rules in five sequential steps.

Snowball Stemmer: An improved version of Porter Stemmer that handles more languages and provides better accuracy.

Lancaster Stemmer: More aggressive than Porter, often producing shorter stems but with higher error rates.

Stemming Examples

Original Word → Stemmed Form

running → run

flies → fli

dogs → dog

better → bett

studies → studi

What is Lemmatization?

Lemmatization is a more sophisticated approach that reduces words to their canonical form, known as a “lemma.” Unlike stemming, lemmatization considers the word’s part of speech and meaning, ensuring that the result is always a valid word in the language. This process requires linguistic knowledge and often involves dictionary lookups.

How Lemmatization Works

Lemmatization algorithms analyze the word’s morphological structure and context to determine its base form. This process involves:

- Part-of-speech tagging: Identifying whether a word is a noun, verb, adjective, etc.

- Morphological analysis: Understanding the word’s structure and inflections

- Dictionary lookup: Ensuring the lemma is a valid dictionary word

Lemmatization Examples

Original Word → Lemmatized Form

running → run (verb)

ran → run (verb)

better → good (adjective)

studies → study (noun/verb)

mice → mouse (noun)

Key Differences Between Stemming and Lemmatization

Accuracy and Precision

Stemming operates on crude rules and often produces non-words. It may group unrelated words together or fail to group related ones. For example, “better” becomes “bett,” which isn’t a real word.

Lemmatization produces linguistically correct base forms. “Better” correctly becomes “good,” maintaining semantic meaning and ensuring the result exists in the language.

Speed and Computational Requirements

Stemming is significantly faster because it relies on simple pattern matching and rule application. It requires minimal computational resources and no external dictionaries.

Lemmatization is slower due to its need for part-of-speech tagging, morphological analysis, and dictionary lookups. It requires more memory and processing power.

Context Awareness

Stemming is context-blind. It applies the same rules regardless of the word’s meaning or usage in a sentence.

Lemmatization considers context and part of speech. The same word can have different lemmas depending on its grammatical role.

Language Support

Stemming algorithms are typically language-specific but easier to develop for new languages.

Lemmatization requires extensive linguistic resources and is more challenging to implement for less-studied languages.

When to Use Stemming

Information Retrieval Systems

Stemming excels in search engines and document retrieval systems where speed is crucial and minor accuracy losses are acceptable. Users searching for “running” should find documents containing “run,” “runs,” or “runner.”

Large-Scale Text Processing

When processing millions of documents, stemming’s speed advantage becomes critical. The computational savings often outweigh the accuracy trade-offs.

Exploratory Data Analysis

For quick text analysis and getting a general sense of your data, stemming provides rapid insights without the overhead of complex linguistic processing.

Resource-Constrained Environments

In mobile applications or embedded systems with limited computational resources, stemming’s lightweight nature makes it the practical choice.

When to Use Lemmatization

Sentiment Analysis

Understanding emotional tone requires precise word meanings. Lemmatization ensures that “better” is properly connected to “good,” maintaining the positive sentiment relationship.

Machine Translation

Translation systems benefit from lemmatization’s linguistic accuracy. Proper base forms help maintain meaning across languages and improve translation quality.

Question Answering Systems

When users ask specific questions, the system needs to understand precise word relationships. Lemmatization’s accuracy helps match questions with relevant answers.

Text Classification

For categorizing documents based on content, lemmatization’s semantic preservation often leads to better classification accuracy.

Language Learning Applications

Educational tools require linguistically correct forms to teach proper language usage. Lemmatization ensures students see valid dictionary words.

Performance Comparison

Speed Benchmarks

In typical scenarios:

- Stemming processes 10,000 words in approximately 0.1 seconds

- Lemmatization processes the same number in 2-5 seconds

Accuracy Metrics

Studies show that lemmatization typically achieves 85-95% accuracy in producing correct base forms, while stemming achieves 60-80% accuracy, depending on the language and algorithm used.

Hybrid Approaches

Some systems combine both techniques to balance speed and accuracy:

Cascaded Processing

Apply fast stemming first, then use lemmatization only for critical words or uncertain cases.

Domain-Specific Rules

Use stemming for general vocabulary and lemmatization for domain-specific terms that require precision.

Adaptive Systems

Switch between techniques based on real-time performance requirements and accuracy needs.

Implementation Considerations

Popular Libraries

Python: NLTK, spaCy, TextBlob Java: Stanford CoreNLP, OpenNLP R: tm, textstem packages

Memory Requirements

Stemming requires minimal memory (few KB for rules), while lemmatization needs substantial memory for dictionaries and models (10-100+ MB).

Preprocessing Pipeline Integration

Consider how each technique fits into your broader text processing pipeline. Lemmatization works better after part-of-speech tagging, while stemming can be applied independently.

Making the Right Choice

The decision between stemming and lemmatization ultimately depends on your specific use case:

Choose Stemming When:

- Speed is your primary concern

- Working with large-scale data

- Building search or retrieval systems

- Operating under resource constraints

- Conducting exploratory analysis

Choose Lemmatization When:

- Accuracy is paramount

- Working on semantic analysis tasks

- Building language learning tools

- Developing translation systems

- Quality matters more than speed

Consider Hybrid Approaches When:

- You need both speed and accuracy

- Different parts of your system have different requirements

- Working with mixed content types

Conclusion

Both stemming and lemmatization have their place in the NLP toolkit. Stemming offers speed and simplicity, making it ideal for large-scale processing and search applications. Lemmatization provides accuracy and linguistic correctness, essential for applications requiring semantic understanding.

The key is understanding your requirements: if you need quick processing of large volumes of text and can tolerate some imprecision, stemming is your friend. If you need precise semantic analysis and can afford the computational cost, lemmatization is the way to go.

As NLP continues to evolve, we’re seeing more sophisticated approaches that combine the benefits of both techniques. The future likely holds even more nuanced methods that can dynamically choose the best approach based on context, but for now, understanding when and how to use these fundamental techniques remains crucial for any NLP practitioner.

Remember, the best choice is the one that aligns with your specific goals, constraints, and quality requirements. Start with understanding your use case, then let that guide your decision between these powerful text normalization techniques.

Discover more from SkillWisor

Subscribe to get the latest posts sent to your email.