The mobile technology landscape is undergoing a fundamental transformation as artificial intelligence capabilities migrate from distant cloud servers to the devices in our pockets. This shift toward edge AI represents more than just a technological advancement—it’s a paradigm change that promises enhanced privacy, reduced latency, and unprecedented mobile intelligence that works anywhere, anytime.

Understanding Edge AI: The Foundation of Mobile Intelligence

Edge AI refers to artificial intelligence processing that occurs locally on a device rather than relying on cloud-based servers. This approach fundamentally changes how AI interacts with users by bringing computational intelligence directly to the point of data generation. Unlike traditional cloud AI models that require constant internet connectivity and involve data transmission to remote servers, edge AI operates independently, processing information entirely within the device.

The significance of this shift extends beyond mere convenience. Edge AI addresses critical concerns about data privacy, network dependency, and response times that have limited the practical applications of AI in mobile environments. By processing data locally, edge AI ensures that sensitive personal information never leaves the device, while simultaneously delivering near-instantaneous responses to user requests.

Technical Architecture of Edge AI in Mobile Devices

Modern edge AI implementation relies on sophisticated hardware architectures specifically designed to handle the computational demands of artificial intelligence workloads. The foundation of this capability lies in specialized processing units that work in concert with traditional mobile processors.

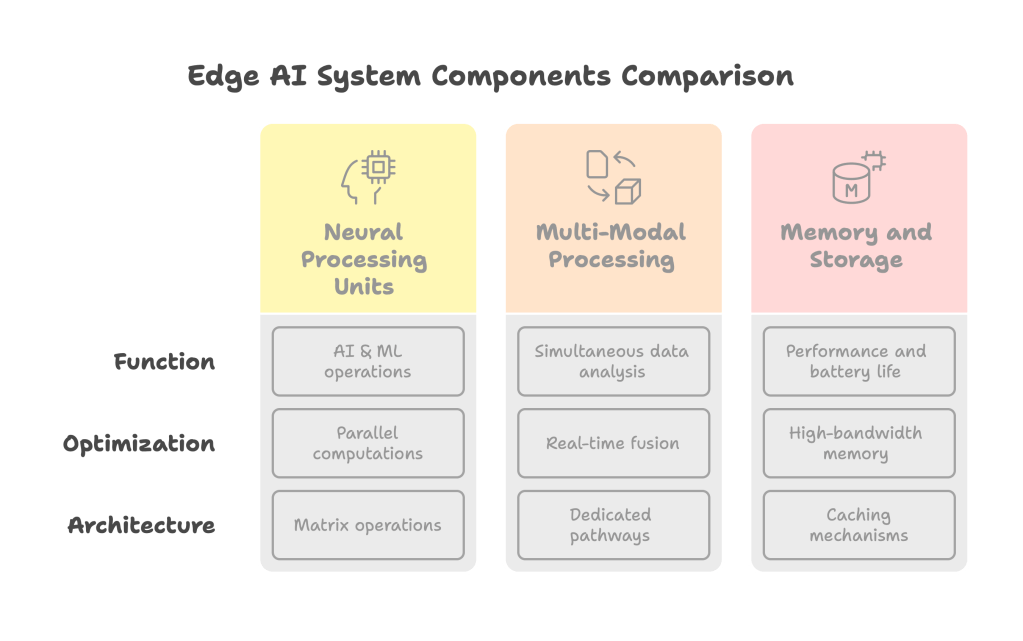

Neural Processing Units (NPUs)

At the core of edge AI capabilities are Neural Processing Units, specialized chips designed exclusively for AI and machine learning operations. These processors are optimized for the parallel computations required by neural networks, offering significantly better performance per watt compared to general-purpose processors when handling AI tasks.

NPUs utilize unique architectures that excel at matrix operations, the mathematical foundation of neural network computations. Unlike traditional CPUs that process instructions sequentially, NPUs can perform thousands of simple operations simultaneously, making them ideally suited for the pattern recognition and data processing tasks that define modern AI applications.

Multi-Modal Processing Capabilities

Advanced edge AI systems support multi-modal processing, enabling simultaneous analysis of different data types including audio, visual, and textual information. This capability allows for sophisticated AI applications that can understand context across multiple input formats, creating more intuitive and natural user interactions.

The technical implementation involves dedicated processing pathways for each data type, with sophisticated coordination mechanisms that enable real-time fusion of information from multiple sources. This architecture supports applications like real-time translation with visual context, voice-activated image editing, and contextual search that considers multiple input modalities simultaneously.

Memory and Storage Optimization

Edge AI processing requires careful optimization of memory usage and data flow to maintain performance while preserving battery life. Modern implementations utilize high-bandwidth memory architectures that minimize data movement between processing units, reducing both latency and power consumption.

Advanced caching mechanisms ensure that frequently used AI models remain readily accessible, while dynamic loading systems manage memory allocation based on active applications and user patterns. This approach enables complex AI operations without overwhelming device resources or significantly impacting overall system performance.

Privacy and Security Advantages of Edge AI

The privacy implications of edge AI represent one of its most compelling advantages. By processing data locally, edge AI eliminates many of the privacy concerns associated with cloud-based AI services. Personal information, conversation data, photos, and behavioral patterns remain entirely within the user’s device, never transmitted to external servers for processing.

This local processing model addresses regulatory compliance requirements while providing users with granular control over their data. Advanced edge AI implementations offer users the ability to specify which operations can utilize cloud resources and which must remain entirely on-device, providing unprecedented transparency and control over personal data handling.

Security benefits extend beyond privacy to include reduced attack surface areas. With less data transmission and fewer external dependencies, edge AI systems present fewer opportunities for interception or unauthorized access. The local processing model also enables AI functionality in secure environments where internet connectivity may be restricted or unavailable.

Performance Characteristics and Optimization

Edge AI systems must balance computational capability with power efficiency, a challenge that has driven significant innovations in mobile processor design. Modern implementations achieve this balance through several key strategies.

Dynamic power management systems adjust processor performance based on the complexity of active AI tasks, ensuring that battery life is preserved during less demanding operations while providing full computational power when needed. Thermal management systems prevent overheating during sustained AI processing, maintaining consistent performance without throttling.

Intelligent workload distribution algorithms automatically determine the optimal processing unit for each AI task, whether that’s the NPU, GPU, or CPU, based on the specific requirements and current system load. This approach maximizes efficiency while minimizing resource conflicts between AI operations and other device functions.

Real-World Applications and Use Cases

Edge AI enables a wide range of applications that were previously impractical due to latency, privacy, or connectivity constraints. Real-time language translation, advanced photography features, personalized content recommendations, and intelligent automation all benefit from local processing capabilities.

Professional applications include medical imaging analysis, industrial inspection systems, and augmented reality applications that require immediate response times and absolute data privacy. Consumer applications span entertainment, productivity, and communication tools that adapt to user preferences and behavioral patterns without compromising personal data.

Galaxy AI: A Comprehensive Implementation Example

Samsung’s Galaxy AI system exemplifies the practical implementation of advanced edge AI in consumer devices. This comprehensive AI ecosystem demonstrates how sophisticated artificial intelligence can be seamlessly integrated into everyday mobile experiences while maintaining user privacy and delivering exceptional performance.

Galaxy AI encompasses a broad range of intelligent features designed to enhance productivity, creativity, and communication. The system includes real-time language translation supporting over 40 languages, advanced photo editing capabilities, intelligent text assistance, and personalized content recommendations. What distinguishes Galaxy AI is its emphasis on on-device processing, utilizing advanced mobile processors to deliver these capabilities without compromising user privacy or requiring constant internet connectivity.

The implementation prioritizes local processing for sensitive operations while maintaining the flexibility to utilize cloud resources for computationally intensive tasks when users explicitly consent. This hybrid approach ensures optimal performance while respecting user privacy preferences through granular control settings.

Privacy-First Architecture in Galaxy AI

Galaxy AI implements multiple layers of privacy protection that go beyond simple on-device processing. The system includes data minimization protocols that process only necessary information, automatic deletion of temporary files after processing completion, and transparent indicators that inform users when data is being processed locally versus in the cloud.

Users maintain complete control over data handling through comprehensive settings that specify which features can utilize cloud processing and which must remain entirely on-device. This approach provides transparency about data handling while enabling users to make informed decisions about their privacy preferences.

Snapdragon Elite: Powering Next-Generation Edge AI

The Qualcomm Snapdragon Elite processor represents a significant advancement in mobile AI processing capabilities, specifically designed to support sophisticated edge AI applications. The Snapdragon 8 Elite Mobile Platform, which earned recognition as the 2024 Best Edge AI Processor Product of the Year, introduces groundbreaking improvements in on-device AI performance and efficiency.

Advanced NPU Architecture

The Snapdragon Elite features a dedicated Neural Processing Unit optimized for the computational demands of modern AI applications. This specialized processor delivers exceptional performance per watt ratios, enabling complex AI operations while preserving battery life. The NPU architecture supports advanced neural network models with optimized execution pathways for different AI workloads.

The Qualcomm AI Engine integrated into the Snapdragon Elite enables multi-modal AI operations directly on smartphones, supporting simultaneous processing of voice, text, and image data. This capability enables sophisticated applications like real-time translation with visual context, voice-controlled image editing, and contextual search that considers multiple input types.

Hexagon DSP Technology

The Hexagon Digital Signal Processor technology provides the computational foundation for AI operations within the Snapdragon Elite. This architecture delivers the parallel processing capabilities necessary for real-time AI inference while maintaining energy efficiency. The Hexagon architecture is specifically optimized for the mathematical operations required by neural networks, providing significant performance advantages for AI workloads.

Advanced scheduling algorithms within the Hexagon DSP ensure optimal resource utilization across different AI tasks, preventing bottlenecks while maintaining consistent performance. The architecture supports dynamic scaling based on computational requirements, adjusting performance and power consumption in real-time.

Integration and Optimization

The Snapdragon Elite demonstrates sophisticated integration between different processing units, enabling seamless coordination between the NPU, CPU, GPU, and other specialized processors. This integration ensures that AI operations don’t interfere with other device functions while maximizing overall system efficiency.

Thermal management systems within the Snapdragon Elite prevent performance throttling during sustained AI operations, maintaining consistent processing capabilities even during intensive tasks. Advanced power management ensures that AI features don’t significantly impact battery life, enabling practical all-day usage of sophisticated AI applications.

Industry Impact and Future Implications

The advancement of edge AI capabilities in mobile devices has significant implications for the broader technology industry. With AI smartphones expected to account for over 30% of total shipments by 2025, the emphasis on on-device processing capabilities is becoming a standard requirement for flagship devices.

This trend influences software development practices, with applications increasingly designed to leverage local AI capabilities rather than relying on cloud services. The shift also impacts network infrastructure requirements, as reduced dependency on cloud processing alleviates bandwidth demands while enabling AI functionality in areas with limited connectivity.

Privacy regulations and user awareness are driving demand for local processing capabilities, making edge AI not just a performance advantage but a competitive necessity. Companies that can deliver sophisticated AI capabilities while maintaining strict privacy standards will likely gain significant market advantages.

Technical Challenges and Solutions

Implementing effective edge AI systems requires addressing several technical challenges. Limited computational resources compared to cloud servers necessitate careful optimization of AI models and processing algorithms. Advanced techniques like model compression, quantization, and pruning enable complex AI models to operate efficiently within mobile device constraints.

Battery life considerations require sophisticated power management strategies that balance AI performance with energy consumption. Dynamic scaling, intelligent task scheduling, and specialized low-power processing modes enable practical all-day usage of AI features without compromising device functionality.

Memory and storage limitations require innovative approaches to model management and data handling. Advanced caching systems, dynamic model loading, and efficient data structures enable sophisticated AI capabilities within the constrained resources of mobile devices.

The Future of Edge AI in Mobile Computing

The continued advancement of edge AI capabilities will likely transform mobile devices from reactive tools to proactive intelligent assistants. Future developments may include more sophisticated contextual understanding, predictive functionality that anticipates user needs, and seamless integration across device ecosystems.

Advancements in processor technology will enable even more complex AI operations on mobile devices, potentially matching or exceeding current cloud-based capabilities while maintaining the privacy and performance advantages of local processing. The integration of specialized AI processors with traditional mobile computing architectures will continue to evolve, creating new possibilities for mobile intelligence.

The success of implementations like Galaxy AI powered by Snapdragon Elite processors demonstrates the viability and advantages of edge AI approaches. As this technology matures, we can expect edge AI to become the standard for mobile artificial intelligence, delivering sophisticated capabilities while respecting user privacy and maintaining optimal performance.

Conclusion

The convergence of advanced mobile processors and sophisticated AI algorithms represents a fundamental shift in mobile computing paradigms. Edge AI, exemplified by implementations like Galaxy AI running on Snapdragon Elite processors, demonstrates that powerful artificial intelligence can exist entirely on mobile devices without compromising functionality, privacy, or user experience.

This transformation addresses longstanding concerns about AI privacy and performance while enabling new categories of mobile applications that were previously impractical. As the technology continues to mature, edge AI will likely become the foundation for the next generation of intelligent mobile devices, delivering unprecedented capabilities while maintaining the privacy and performance standards that users demand.

The success of current edge AI implementations provides a roadmap for the future of mobile intelligence, where sophisticated AI capabilities enhance every aspect of device interaction while respecting user privacy and delivering optimal performance. This evolution represents not just a technological advancement, but a fundamental reimagining of how artificial intelligence can enhance human capabilities through mobile technology.

Discover more from SkillWisor

Subscribe to get the latest posts sent to your email.