Artificial intelligence is transforming how we interact with technology, from the smartphones in our pockets to the smart cars on our roads. While most people are familiar with cloud-based AI services like ChatGPT or Google Assistant, there’s another form of AI that’s quietly revolutionizing our daily lives: Edge AI.

Edge AI represents a fundamental shift in how artificial intelligence operates, bringing computational power closer to where data is generated and decisions need to be made. Instead of sending information to distant data centers for processing, Edge AI enables devices to think and act locally, creating faster, more private, and more reliable AI experiences.

Understanding Edge AI: The Basics

Edge AI, also known as AI at the edge, refers to artificial intelligence algorithms that are processed locally on a hardware device rather than in a centralized cloud computing facility or data center. The “edge” in Edge AI refers to the edge of the network, where devices like smartphones, tablets, IoT sensors, autonomous vehicles, and smart cameras operate.

To understand this concept better, imagine traditional cloud AI as a conversation with someone far away via telephone. You speak into the phone, your voice travels through various networks to reach the other person, they think about your question, and then send their response back through the same networks. This process, while effective, introduces delays and requires a stable connection.

Edge AI, on the other hand, is like having that intelligent conversation partner sitting right next to you. The processing happens locally, responses are immediate, and you don’t need to worry about network connectivity or privacy concerns associated with sending your data elsewhere.

The Evolution from Cloud to Edge

The journey toward Edge AI began with the limitations of cloud-based AI systems. Traditional cloud AI architecture follows a simple pattern: data is collected by edge devices, transmitted to cloud servers for processing, and results are sent back to the device. While this approach has enabled many AI breakthroughs, it comes with significant drawbacks.

Network latency is perhaps the most obvious limitation. When an autonomous vehicle needs to detect an obstacle, every millisecond counts. Sending camera data to a cloud server, processing it, and receiving instructions back could mean the difference between a safe stop and a collision. Similarly, when a factory robot needs to adjust its movements based on visual feedback, cloud processing delays could result in damaged products or safety hazards.

Bandwidth constraints present another challenge. As the number of connected devices grows exponentially, the amount of data being transmitted to cloud servers increases dramatically. High-resolution cameras, multiple sensors, and continuous monitoring generate massive data streams that can overwhelm network infrastructure and become prohibitively expensive to transmit.

Privacy and security concerns have also driven the shift toward edge processing. Consumers and businesses are increasingly uncomfortable with sending sensitive data to third-party cloud providers. Medical devices processing patient information, security cameras monitoring private spaces, and personal assistants listening to intimate conversations all benefit from local processing that keeps sensitive data on-device.

How Edge AI Works: The Technical Foundation

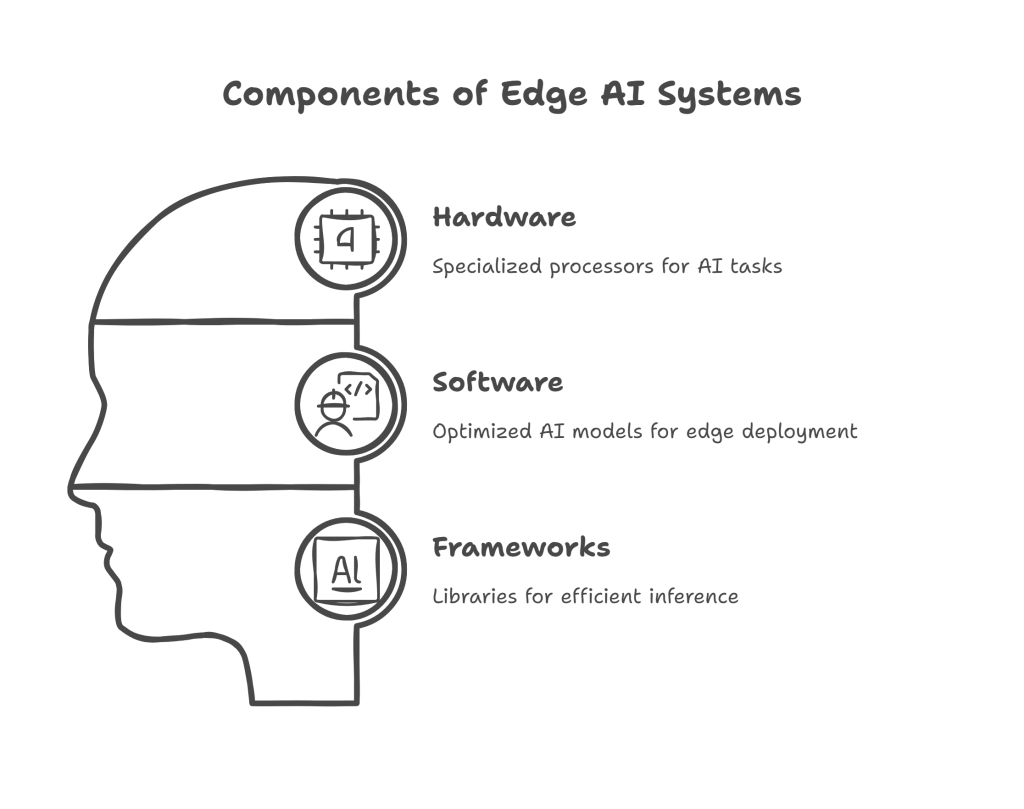

Edge AI systems combine specialized hardware and optimized software to perform artificial intelligence tasks locally. The hardware component typically consists of processors designed specifically for AI workloads, such as Graphics Processing Units (GPUs), Tensor Processing Units (TPUs), or dedicated AI chips like Neural Processing Units (NPUs).

These processors are optimized for the parallel computations required by neural networks and machine learning algorithms. Unlike traditional CPUs that excel at sequential processing, AI-specific processors can handle thousands of simple operations simultaneously, making them ideal for tasks like image recognition, natural language processing, and pattern detection.

The software component involves AI models that have been specifically optimized for edge deployment. This optimization process, called model compression or model quantization, reduces the size and computational requirements of AI models without significantly impacting their performance. Techniques include pruning unnecessary connections in neural networks, reducing the precision of numerical calculations, and using knowledge distillation to create smaller models that mimic larger ones.

Edge AI devices also employ specialized frameworks and libraries designed for efficient inference on resource-constrained hardware. These frameworks, such as TensorFlow Lite, ONNX Runtime, and OpenVINO, provide optimized implementations of common AI operations and enable developers to deploy models across different hardware platforms.

Key Advantages of Edge AI

The benefits of Edge AI extend far beyond simply avoiding cloud dependency. These advantages are reshaping industries and enabling entirely new categories of applications.

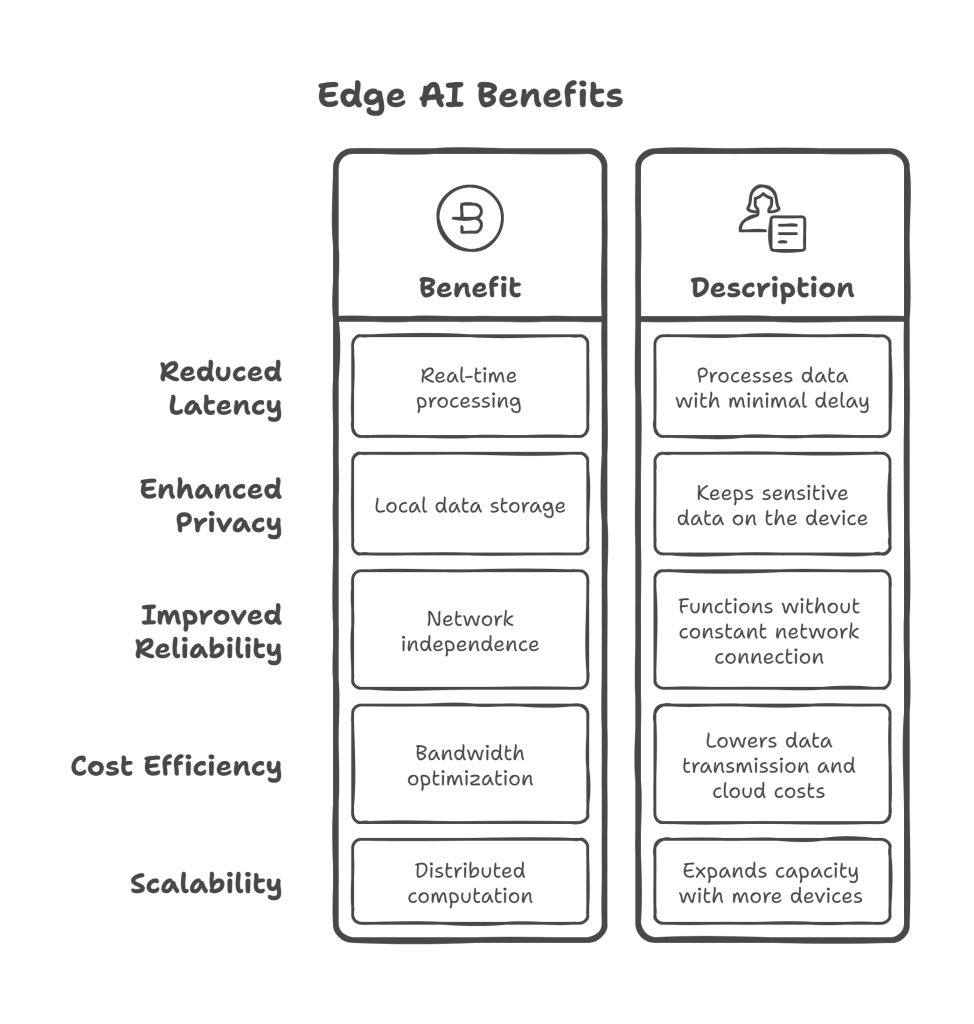

Reduced Latency and Real-Time Processing

Perhaps the most significant advantage of Edge AI is its ability to process information in real-time. By eliminating the round-trip to cloud servers, Edge AI can respond to inputs in milliseconds rather than seconds. This capability is crucial for applications requiring immediate responses, such as autonomous vehicles detecting pedestrians, industrial robots adjusting to production line changes, or medical devices monitoring patient vital signs.

Enhanced Privacy and Security

Edge AI keeps sensitive data local to the device, dramatically reducing privacy risks. Personal information, biometric data, and confidential business information never leave the device, eliminating concerns about data breaches during transmission or storage in third-party cloud services. This local processing approach aligns with increasingly strict data protection regulations like GDPR and CCPA.

Improved Reliability and Reduced Dependency

Devices with Edge AI capabilities can continue functioning even when network connectivity is poor or unavailable. This independence is particularly valuable in remote locations, during network outages, or in mission-critical applications where reliability is paramount. Emergency response systems, industrial control systems, and medical devices all benefit from this self-sufficiency.

Cost Efficiency and Bandwidth Optimization

By processing data locally, Edge AI reduces the need for expensive data transmission and cloud computing resources. Organizations can significantly lower their ongoing operational costs while avoiding the bandwidth limitations that constrain cloud-based solutions. This is particularly beneficial for applications generating large amounts of data, such as video surveillance systems or industrial IoT sensors.

Scalability and Performance

Edge AI enables better scalability by distributing computational load across multiple devices rather than centralizing it in cloud data centers. As the number of devices increases, the total computational capacity of the system grows proportionally, avoiding the bottlenecks associated with centralized processing.

Real-World Applications and Use Cases

Edge AI is already transforming numerous industries and applications, often in ways that users don’t immediately recognize.

Smartphones and Consumer Electronics

Modern smartphones extensively use Edge AI for features like facial recognition, voice assistants, camera enhancements, and predictive text. When you unlock your phone with Face ID or use Google Assistant offline, you’re experiencing Edge AI in action. Smart speakers, fitness trackers, and wireless earbuds also incorporate Edge AI to provide responsive, personalized experiences without constant internet connectivity.

Autonomous Vehicles and Transportation

Self-driving cars represent one of the most demanding Edge AI applications. These vehicles must process inputs from multiple cameras, lidar sensors, and radar systems to make split-second decisions about steering, braking, and acceleration. Edge AI enables these vehicles to detect obstacles, recognize traffic signs, predict pedestrian behavior, and navigate complex environments without relying on cloud connectivity.

Industrial IoT and Manufacturing

Smart factories use Edge AI for predictive maintenance, quality control, and process optimization. AI-enabled sensors can detect equipment anomalies before failures occur, computer vision systems can identify product defects in real-time, and intelligent robots can adapt to variations in production requirements. This local processing ensures that critical industrial operations continue functioning even during network disruptions.

Healthcare and Medical Devices

Medical applications of Edge AI include wearable devices that monitor patient vital signs, diagnostic equipment that analyzes medical images, and surgical robots that provide real-time feedback to surgeons. The privacy and reliability benefits of Edge AI are particularly important in healthcare, where patient data protection and system availability can be matters of life and death.

Smart Cities and Infrastructure

Urban environments increasingly rely on Edge AI for traffic management, public safety, and resource optimization. Intelligent traffic lights adjust timing based on real-time vehicle flow, smart parking systems guide drivers to available spots, and security cameras can detect unusual activities or safety hazards without compromising citizen privacy through cloud-based processing.

Retail and Customer Experience

Retail environments use Edge AI for inventory management, customer analytics, and personalized shopping experiences. Smart shelves can detect when products need restocking, checkout systems can recognize items without traditional barcodes, and digital displays can adapt content based on customer demographics while preserving individual privacy.

Challenges and Limitations

Despite its many advantages, Edge AI faces several significant challenges that limit its widespread adoption and effectiveness.

Hardware Constraints and Power Limitations

Edge devices typically have limited computational power, memory, and battery life compared to cloud servers. This constrains the complexity and size of AI models that can run effectively on edge hardware. Developers must carefully balance model accuracy with resource requirements, often accepting reduced performance to meet hardware limitations.

Model Accuracy and Capability Trade-offs

The optimization techniques used to make AI models suitable for edge deployment often result in reduced accuracy compared to their cloud-based counterparts. Smaller models with fewer parameters may struggle with complex tasks or edge cases that larger models handle effectively. This trade-off between efficiency and capability remains a significant challenge for Edge AI implementations.

Development and Deployment Complexity

Creating Edge AI applications requires specialized knowledge of both AI model optimization and embedded systems development. Developers must understand hardware constraints, power management, thermal considerations, and real-time processing requirements. This complexity increases development time and costs while requiring diverse technical expertise.

Security and Update Management

While Edge AI improves data privacy by keeping information local, it also creates new security challenges. Edge devices may be physically accessible to attackers, making them vulnerable to tampering or reverse engineering. Additionally, updating AI models on distributed edge devices is more complex than updating centralized cloud services, potentially leaving devices running outdated or vulnerable software.

Limited Computational Resources

Despite advances in edge hardware, local devices cannot match the computational power available in cloud data centers. This limitation restricts the types of AI tasks that can be performed effectively at the edge, particularly those requiring large models or extensive computational resources.

The Technology Stack: Hardware and Software Components

Understanding the Edge AI technology stack helps clarify how these systems achieve their capabilities within resource constraints.

Edge AI Processors and Chips

The foundation of Edge AI lies in specialized processors designed for efficient AI computation. Traditional CPUs, while versatile, are not optimized for the parallel matrix operations that dominate AI workloads. GPUs provide better parallel processing capabilities but often consume too much power for edge applications.

Purpose-built AI chips address these limitations by optimizing for specific AI operations while minimizing power consumption. These chips include features like dedicated matrix multiplication units, optimized memory hierarchies, and specialized instruction sets for common AI operations. Companies like Qualcomm, Apple, Google, and Intel have developed AI chips specifically for edge applications, each with different strengths and target markets.

AI Model Optimization Techniques

Deploying AI models on edge devices requires sophisticated optimization techniques that maintain functionality while reducing computational and memory requirements.

Quantization reduces the precision of model parameters, typically from 32-bit floating-point numbers to 8-bit integers. This technique can reduce model size by up to 75% while maintaining acceptable accuracy for many applications.

Pruning removes unnecessary connections and neurons from neural networks, creating sparser models that require fewer computations. Advanced pruning techniques can eliminate 90% or more of model parameters while preserving most of the original accuracy.

Knowledge distillation creates smaller “student” models that learn to mimic the behavior of larger “teacher” models. This approach often achieves better results than simply training small models from scratch.

Edge AI Frameworks and Development Tools

Software frameworks specifically designed for edge deployment provide the tools necessary to optimize, deploy, and manage AI models on resource-constrained devices. These frameworks handle the complexity of model optimization, hardware abstraction, and runtime optimization.

TensorFlow Lite, developed by Google, provides tools for deploying TensorFlow models on mobile and embedded devices. It includes model optimization tools, hardware acceleration support, and lightweight runtime libraries.

ONNX Runtime, backed by Microsoft, offers cross-platform support for deploying models from various AI frameworks. Its focus on performance optimization and broad hardware support makes it popular for edge applications.

OpenVINO, developed by Intel, specializes in optimizing models for Intel hardware, including CPUs, GPUs, and specialized AI accelerators. It provides comprehensive tools for model optimization and deployment across Intel’s processor ecosystem.

Edge AI vs. Cloud AI: A Detailed Comparison

Understanding the differences between Edge AI and Cloud AI helps organizations choose the appropriate approach for their specific requirements.

Performance and Latency

Cloud AI typically offers superior raw computational performance due to access to powerful server hardware and unlimited scalability. However, network latency can significantly impact the user experience, particularly for real-time applications. Edge AI provides consistent, low-latency responses but with limited computational capabilities.

Cost Considerations

Cloud AI involves ongoing operational costs for data transmission and cloud computing resources, which can become substantial for high-volume applications. Edge AI requires higher upfront hardware costs but lower ongoing operational expenses. The cost comparison depends heavily on usage patterns, data volumes, and application requirements.

Privacy and Compliance

Edge AI provides inherent privacy advantages by keeping data local, making it easier to comply with data protection regulations. Cloud AI requires careful consideration of data governance, third-party access policies, and international data transfer regulations.

Scalability and Maintenance

Cloud AI offers easier scalability, as additional computational resources can be allocated on-demand. Edge AI scalability requires deploying additional hardware devices but provides more predictable performance characteristics. Maintenance and updates are generally easier with cloud AI but may require more complex coordination for edge deployments.

Industry Applications and Impact

The impact of Edge AI extends across virtually every industry, creating new possibilities and transforming existing processes.

Healthcare Revolution

Edge AI is revolutionizing healthcare by enabling real-time patient monitoring, diagnostic assistance, and treatment optimization. Wearable devices can continuously monitor vital signs and detect health anomalies without transmitting sensitive medical data to cloud servers. Medical imaging equipment uses Edge AI to assist radiologists in detecting cancers and other conditions, often with accuracy matching or exceeding human specialists.

Surgical applications include AI-assisted robotic surgery systems that provide real-time feedback and guidance to surgeons. These systems must operate with minimal latency to ensure patient safety, making Edge AI essential for their effectiveness.

Automotive and Transportation

The automotive industry’s shift toward autonomous vehicles depends heavily on Edge AI capabilities. Modern vehicles incorporate dozens of sensors and cameras that generate terabytes of data daily. Processing this information locally enables immediate responses to changing road conditions while reducing the infrastructure requirements for vehicle connectivity.

Advanced driver assistance systems (ADAS) use Edge AI for features like automatic emergency braking, lane departure warnings, and adaptive cruise control. These safety-critical systems cannot rely on cloud connectivity, making edge processing essential for their reliability.

Manufacturing and Industry 4.0

Smart manufacturing leverages Edge AI for predictive maintenance, quality control, and process optimization. AI-enabled sensors can detect subtle changes in equipment vibration, temperature, or acoustic signatures that indicate impending failures. This early detection capability prevents costly equipment downtime and reduces maintenance costs.

Computer vision systems inspect products for defects in real-time, ensuring quality standards while maintaining production speeds. These systems must process high-resolution images quickly, making Edge AI ideal for the task.

Smart Cities and Urban Planning

Urban environments increasingly rely on Edge AI for traffic optimization, public safety, and resource management. Intelligent traffic management systems adjust signal timing based on real-time traffic flow, reducing congestion and emissions. Smart parking systems guide drivers to available spaces while optimizing parking facility utilization.

Public safety applications include AI-enabled surveillance systems that can detect unusual activities, crowd formations, or potential security threats. These systems balance public safety benefits with privacy concerns by processing video locally rather than transmitting it to centralized servers.

Future Trends and Developments

The Edge AI landscape continues evolving rapidly, with several key trends shaping its future development.

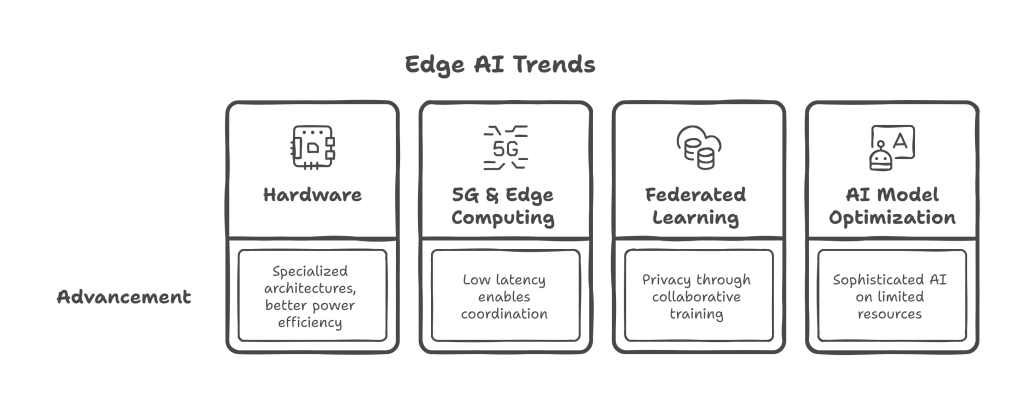

Hardware Advancement and Miniaturization

AI chip manufacturers are continuously improving the performance and efficiency of edge processors. Future developments include more specialized AI architectures, improved power efficiency, and better integration with existing processor designs. Neuromorphic computing, which mimics the structure and function of biological neural networks, promises even greater efficiency for AI workloads.

5G and Edge Computing Integration

The rollout of 5G networks creates new possibilities for hybrid edge-cloud AI architectures. 5G’s low latency and high bandwidth enable more sophisticated coordination between edge devices and cloud resources, allowing for dynamic workload distribution based on current conditions and requirements.

Federated Learning and Distributed AI

Federated learning enables multiple edge devices to collaboratively train AI models without sharing raw data. This approach combines the privacy benefits of Edge AI with the improved accuracy that comes from training on larger, more diverse datasets. Devices can learn from collective experiences while maintaining data privacy and security.

AI Model Compression and Optimization

Continued advances in model compression techniques will enable more sophisticated AI capabilities on resource-constrained edge devices. New architectures designed specifically for edge deployment, such as MobileNets and EfficientNets, demonstrate that smaller models can achieve impressive performance when designed with edge constraints in mind.

Getting Started with Edge AI

Organizations and developers interested in exploring Edge AI have several entry points and resources available.

Development Platforms and Tools

Major technology companies provide comprehensive development platforms for Edge AI applications. These platforms typically include model optimization tools, hardware abstraction layers, and deployment utilities that simplify the development process.

NVIDIA’s Jetson platform provides complete hardware and software solutions for Edge AI development, including development boards, software frameworks, and extensive documentation. Google’s Coral platform offers similar capabilities with a focus on TensorFlow Lite deployment.

Learning Resources and Community

The Edge AI community provides extensive educational resources, including online courses, tutorials, and open-source projects. Organizations like the Edge AI and Vision Alliance offer industry insights, technical resources, and networking opportunities for professionals working in the field.

Pilot Project Considerations

Organizations should start with pilot projects that demonstrate clear value while managing complexity and risk. Ideal initial projects have well-defined success criteria, manageable scope, and clear paths to larger-scale deployment. Common starting points include predictive maintenance for critical equipment, quality control in manufacturing processes, or customer experience enhancement in retail environments.

Conclusion: The Future is at the Edge

Edge AI represents a fundamental shift in how artificial intelligence integrates with our daily lives and business operations. By bringing computational intelligence closer to where data is generated and decisions are made, Edge AI enables faster, more private, and more reliable AI experiences.

The technology’s advantages in latency reduction, privacy protection, and operational independence make it essential for many emerging applications. From autonomous vehicles requiring split-second decision-making to medical devices processing sensitive patient data, Edge AI enables capabilities that would be impossible with cloud-only approaches.

However, Edge AI is not a complete replacement for cloud-based AI systems. The optimal approach often involves hybrid architectures that leverage the strengths of both edge and cloud processing. Simple, time-sensitive tasks benefit from edge processing, while complex analysis and model training may still require cloud resources.

As Edge AI technology continues maturing, we can expect to see more sophisticated capabilities, improved efficiency, and broader adoption across industries. The convergence of better hardware, more efficient algorithms, and improved development tools will make Edge AI accessible to a wider range of applications and organizations.

For businesses and developers, now is an excellent time to begin exploring Edge AI opportunities. Starting with pilot projects and gradually building expertise will position organizations to capitalize on the growing Edge AI ecosystem. The future of artificial intelligence is not just in the cloud—it’s at the edge, bringing intelligence closer to where it’s needed most.

The journey toward ubiquitous Edge AI is just beginning, but its potential to transform how we interact with technology and solve complex problems is already becoming clear. As we continue to generate more data and demand more responsive AI experiences, Edge AI will play an increasingly central role in the technology landscape, making our devices smarter, our systems more efficient, and our digital experiences more personal and private.

Discover more from SkillWisor

Subscribe to get the latest posts sent to your email.