The landscape of artificial intelligence has evolved rapidly, presenting developers and organizations with multiple pathways to optimize model performance for specific use cases. Two primary approaches have emerged as the most practical and effective: fine-tuning and prompt engineering. Understanding when to deploy each strategy, and how to combine them effectively, has become crucial for maximizing AI system performance while managing costs and complexity.

Understanding the Fundamentals

Prompt engineering involves crafting specific instructions, examples, and context within the input to guide a pre-trained model toward desired outputs. This approach leverages the existing knowledge and capabilities of foundation models without modifying their underlying parameters. The technique relies on careful construction of prompts that include task descriptions, formatting requirements, examples, and contextual information.

Fine-tuning, conversely, involves training a pre-existing model on a specialized dataset to adapt its parameters for specific tasks or domains. This process creates a customized version of the original model that has learned patterns and behaviors specific to the target application. Fine-tuning can range from lightweight parameter-efficient methods to full model retraining.

When to Choose Prompt Engineering

Prompt engineering serves as the ideal starting point for most AI implementation projects due to its accessibility and rapid iteration capabilities. This approach proves particularly effective when working with well-established tasks that fall within the general knowledge domain of foundation models.

Immediate deployment scenarios benefit significantly from prompt engineering. When organizations need to quickly prototype solutions or test concepts, crafting effective prompts allows for near-instantaneous results without the time investment required for model training. This rapid feedback loop enables teams to validate assumptions and refine approaches before committing to more resource-intensive methods.

Limited data availability makes prompt engineering especially attractive. Many organizations struggle with insufficient training data for fine-tuning, but can still achieve impressive results through well-crafted prompts that include relevant examples and context. Few-shot learning through prompts can often match or exceed the performance of models trained on limited datasets.

Dynamic requirements also favor prompt engineering approaches. When task specifications frequently change or when handling diverse use cases within a single system, the flexibility of modifying prompts proves invaluable. This adaptability allows organizations to respond quickly to changing business needs without retraining models.

Cost-conscious implementations often find prompt engineering more economical, particularly for lower-volume applications. The absence of training costs and infrastructure requirements makes this approach accessible to organizations with limited AI budgets.

When Fine-tuning Becomes Essential

Fine-tuning emerges as the superior choice when prompt engineering reaches its limitations or when specific performance requirements demand model specialization. Several scenarios clearly indicate the need for fine-tuning approaches.

Domain-specific expertise requirements often necessitate fine-tuning. When working with highly specialized fields such as medical diagnosis, legal document analysis, or technical manufacturing processes, the nuanced understanding required typically exceeds what prompt engineering can achieve. Fine-tuning allows models to internalize domain-specific terminology, patterns, and reasoning approaches.

Consistent formatting and behavior demands make fine-tuning attractive for production systems. While prompts can specify desired output formats, fine-tuned models demonstrate more reliable adherence to structural requirements and behavioral guidelines. This consistency proves crucial for systems requiring predictable outputs for downstream processing.

Performance optimization at scale often requires fine-tuning intervention. When prompt engineering approaches plateau in performance metrics, fine-tuning can push beyond these limitations by fundamentally altering model behavior rather than merely guiding it through instructions.

Proprietary data integration represents another compelling use case for fine-tuning. Organizations with unique datasets, internal processes, or specialized knowledge bases can leverage fine-tuning to create models that understand and utilize this proprietary information effectively.

Cost optimization for high-volume applications may favor fine-tuning despite higher upfront costs. When processing thousands or millions of requests, the reduced token usage of fine-tuned models can result in significant operational savings compared to lengthy, detailed prompts.

Hybrid Strategies: Maximizing Both Approaches

The most sophisticated AI implementations often combine both techniques in complementary ways, leveraging the strengths of each approach while mitigating their individual limitations.

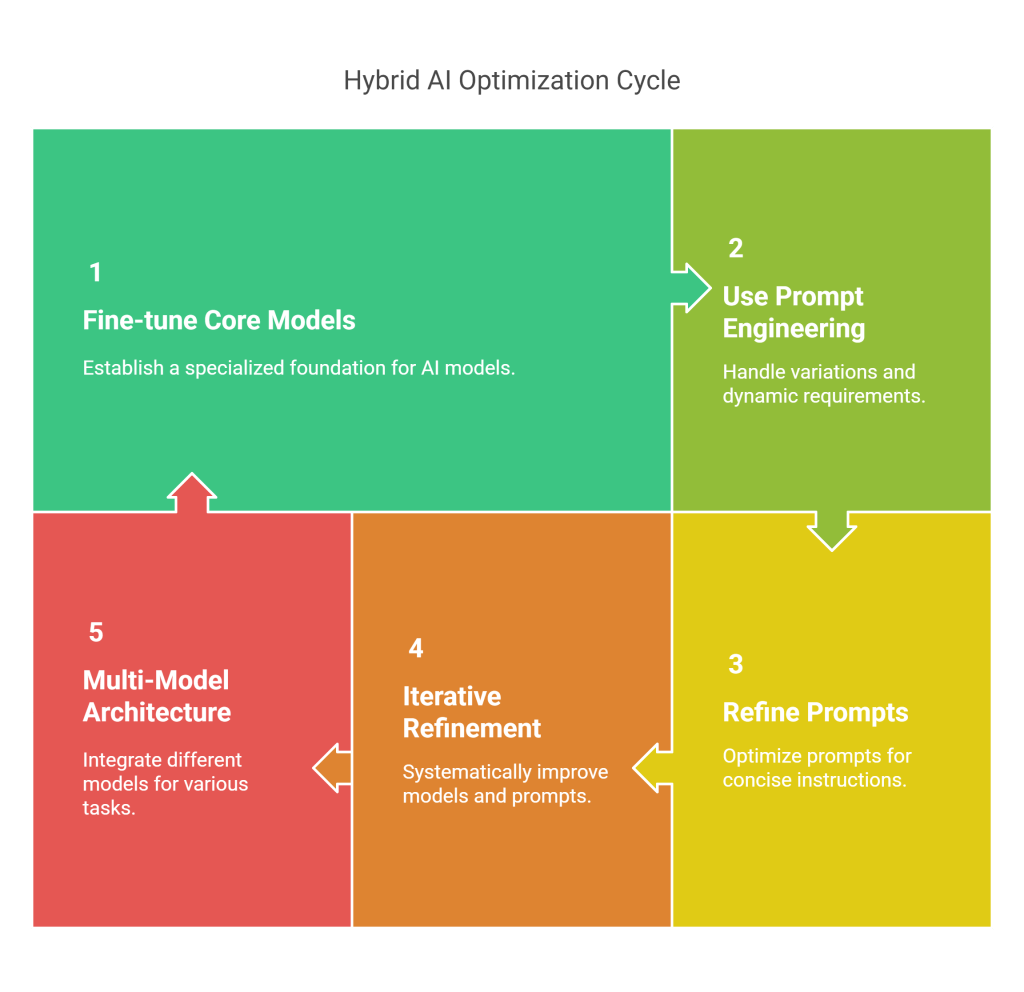

Foundation plus specialization represents a common hybrid pattern. Organizations begin by fine-tuning models on core domain knowledge and fundamental task patterns, then use prompt engineering to handle variations, edge cases, and dynamic requirements. This approach provides a solid specialized foundation while maintaining flexibility for specific scenarios.

Hierarchical prompt strategies can work effectively with fine-tuned models. Rather than requiring extensive context in every prompt, fine-tuned models can respond to more concise instructions, allowing prompts to focus on specific variations or requirements for individual requests.

Iterative refinement cycles combine both approaches systematically. Teams start with prompt engineering to establish baseline performance and identify common patterns, then use insights from this phase to design targeted fine-tuning datasets. The resulting fine-tuned models can then be further optimized through refined prompt strategies.

Multi-model architectures leverage different optimization strategies for different components. A system might employ a fine-tuned model for core task execution while using prompt-engineered models for preprocessing, validation, or output formatting.

Implementation Considerations and Best Practices

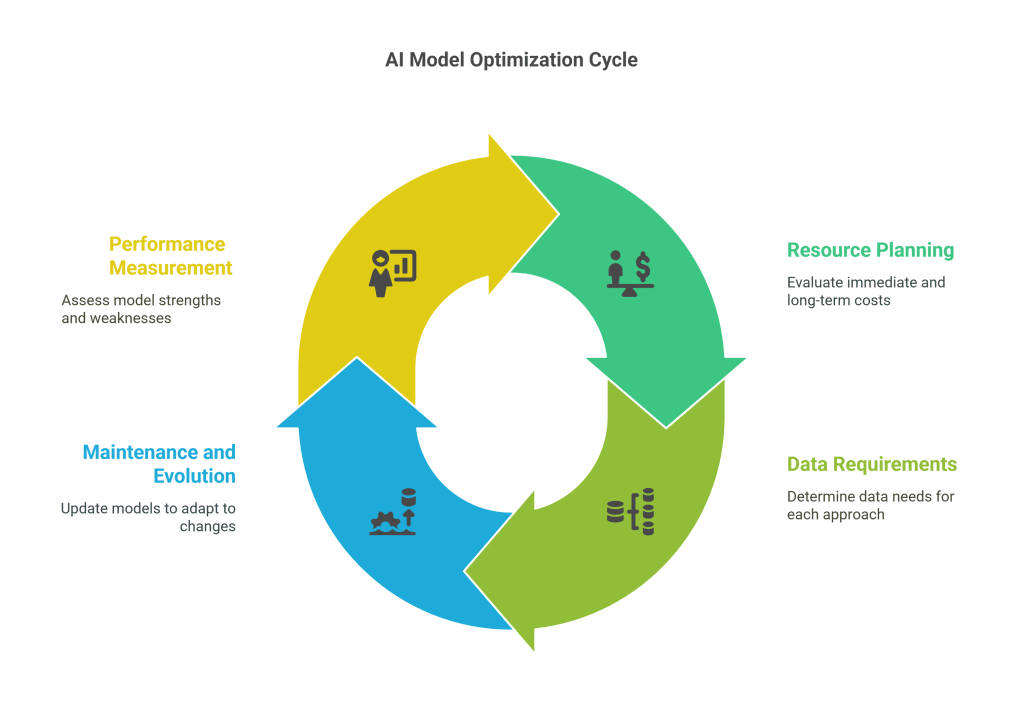

Resource planning requires careful consideration of both immediate and long-term costs. Prompt engineering demands ongoing prompt development and potentially higher per-request costs, while fine-tuning requires upfront investment in data preparation, training infrastructure, and validation processes.

Data requirements differ significantly between approaches. Prompt engineering can work with minimal examples but requires careful curation of representative cases for inclusion in prompts. Fine-tuning demands larger, well-labeled datasets but can then operate independently of these examples during inference.

Maintenance and evolution patterns vary between strategies. Prompt engineering allows for rapid updates and modifications but requires ongoing optimization as use cases evolve. Fine-tuned models provide more stable performance but require retraining for significant updates.

Performance measurement should account for the different strengths of each approach. Prompt engineering might excel in flexibility and edge case handling, while fine-tuning typically provides superior consistency and efficiency for core use cases.

Strategic Decision Framework

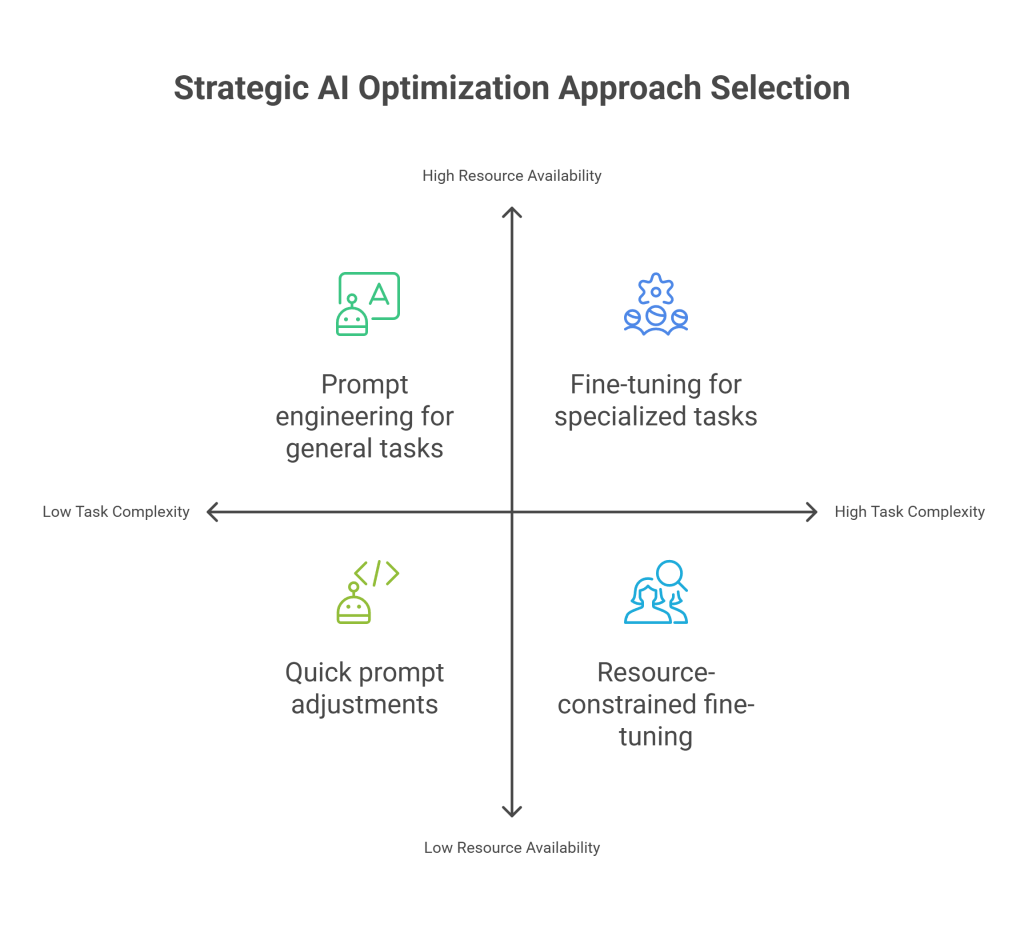

Organizations should evaluate several key factors when choosing between these approaches. Task complexity and specificity serve as primary indicators, with highly specialized or complex tasks favoring fine-tuning, while general tasks with variable requirements benefit from prompt engineering flexibility.

Volume and consistency of use cases influence the cost-benefit analysis. High-volume, consistent applications justify fine-tuning investments, while varied, lower-volume scenarios favor prompt engineering approaches.

Development timeline and resources affect strategy selection. Organizations with immediate needs and limited ML expertise often start with prompt engineering, while those with longer timelines and technical capabilities can pursue fine-tuning strategies.

Performance requirements and accuracy thresholds help determine the necessary level of model specialization. Applications requiring extremely high accuracy or specific behavioral patterns typically benefit from fine-tuning, while those with more flexible requirements can succeed with prompt engineering.

The future of AI optimization lies not in choosing between fine-tuning and prompt engineering, but in understanding how to leverage both techniques strategically. As foundation models continue to improve and tooling becomes more accessible, the most successful AI implementations will likely employ sophisticated combinations of both approaches, adapted to their specific requirements and constraints.

Organizations that develop expertise in both domains, understanding their respective strengths and limitations, will be best positioned to build robust, efficient, and adaptable AI systems that can evolve with changing requirements and advancing technology.

Discover more from SkillWisor

Subscribe to get the latest posts sent to your email.