The rise of Generative AI (Gen AI) is not just a technological revolution; it’s a paradigm shift in how businesses innovate, create, and operate. From crafting human-like text with Large Language Models (LLMs) to generating photorealistic images from simple prompts, Gen AI is unlocking unprecedented capabilities. However, transforming this potential into tangible business value is a complex endeavor. It requires more than just brilliant data scientists and machine learning engineers. It demands a new breed of leader: The Generative AI Project Manager.

This role is a critical evolution of traditional project management, blending classic discipline with a deep, nuanced understanding of the unique challenges and opportunities of AI development. A Gen AI PM is the central nervous system of a project, responsible for steering it from a nascent idea to a robust, ethical, and value-generating deployed solution. They are the translators between business stakeholders and technical teams, the guardians of quality and ethics, and the architects of a process that can navigate the inherent uncertainty of AI development.

This comprehensive guide delves into the micro-level details of managing a Gen AI project. We will dissect the entire project lifecycle, from the initial spark of an idea to post-deployment monitoring and learning. We will explore the intricate processes of quality planning, bug management, and cost tracking, providing actionable strategies and tool recommendations. Finally, we will outline the core responsibilities and best practices that define a world-class Gen AI Project Manager. This is not a high-level overview; it is a detailed, practical manual for those tasked with leading the charge in this exciting new frontier.

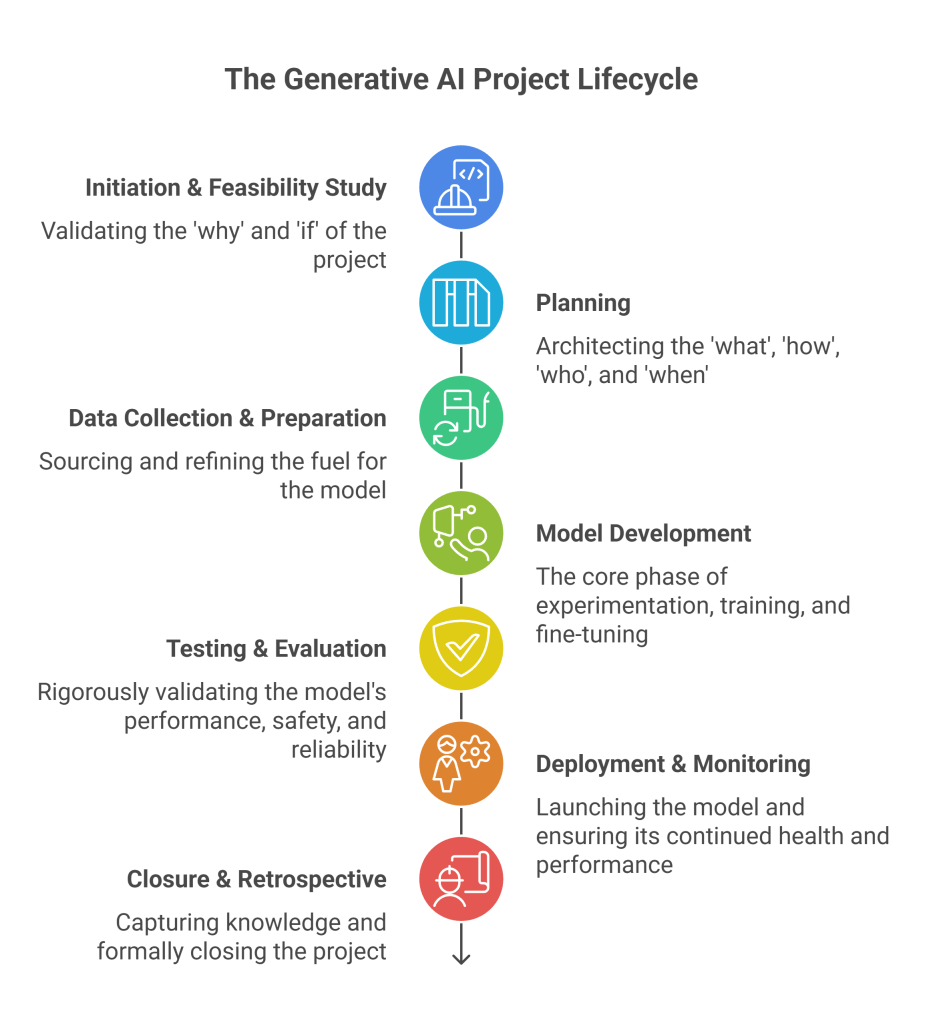

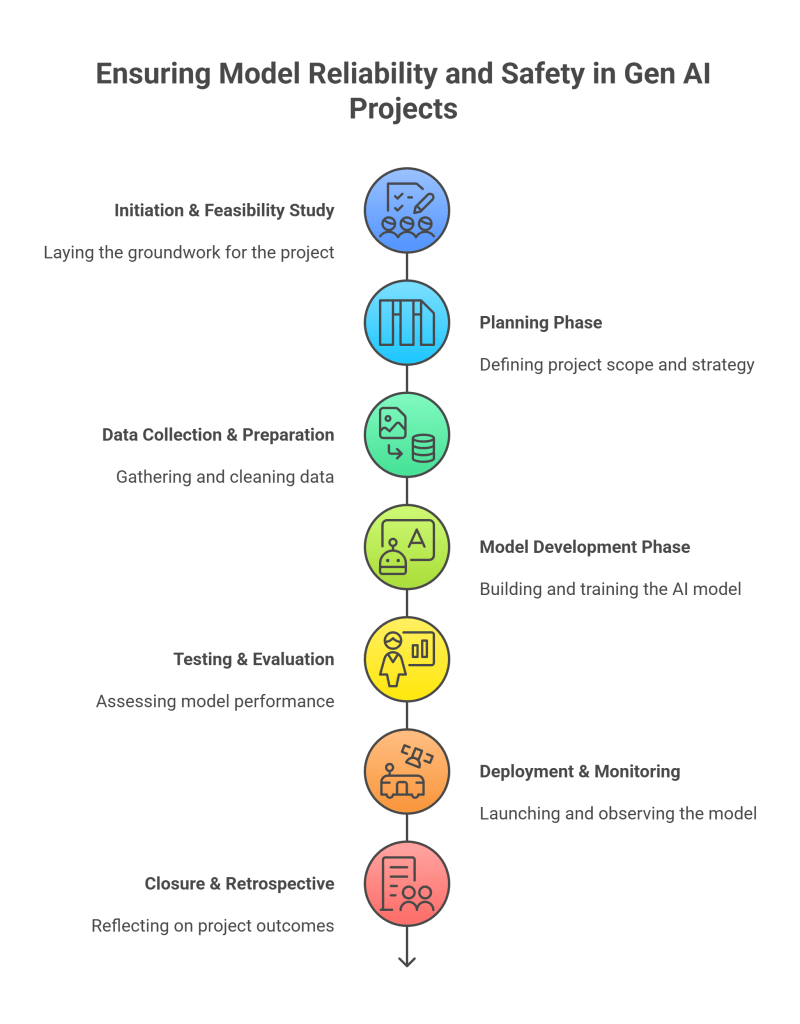

1. The Generative AI Project Lifecycle: A Detailed Overview

Generative AI projects, while sharing foundational principles with traditional software development, possess a unique lifecycle shaped by data dependency, model experimentation, and ethical considerations. A successful Gen AI Project Manager must master each phase to guide the project effectively, ensuring alignment across diverse, cross-functional teams and delivering a solution that is not only technically sound but also reliable, fair, and secure.

The lifecycle is an iterative journey, not a strictly linear path. Learnings from the testing phase might necessitate a return to data preparation, and monitoring in production can trigger new development cycles. The PM’s role is to manage this dynamic flow with agility and foresight.

Phases in the Generative AI Lifecycle:

- Initiation & Feasibility Study: Validating the ‘why’ and ‘if’ of the project.

- Planning: Architecting the ‘what’, ‘how’, ‘who’, and ‘when’.

- Data Collection & Preparation: Sourcing and refining the fuel for the model.

- Model Development: The core phase of experimentation, training, and fine-tuning.

- Testing & Evaluation: Rigorously validating the model’s performance, safety, and reliability.

- Deployment & Monitoring: Launching the model and ensuring its continued health and performance.

- Closure & Retrospective: Capturing knowledge and formally closing the project.

Let’s explore each of these stages at a micro-level.

2. Project Management Stages in Micro-Detail

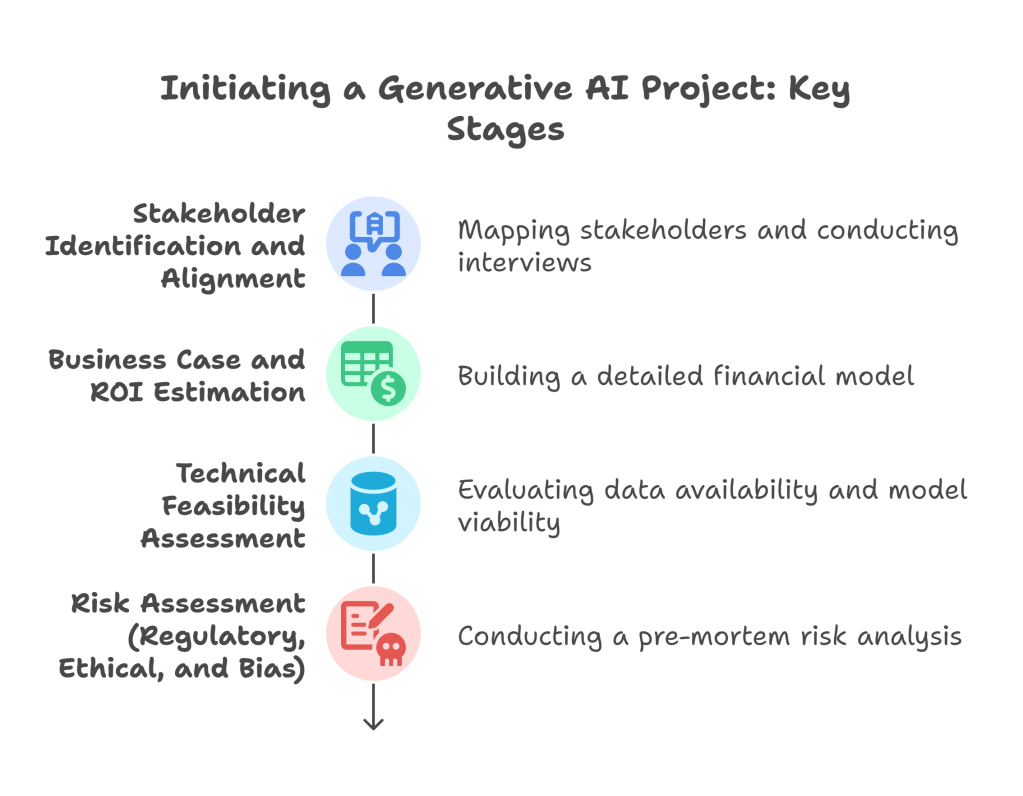

📅 2.1 Initiation & Feasibility Study

Primary Goal: To rigorously validate the business need and confirm the technical viability of the proposed Gen AI solution before committing significant resources. This phase is about asking the hard questions and preventing the pursuit of projects that are technically infeasible, ethically problematic, or lack a clear business justification.

Micro-Level Activities & PM Role:

- Stakeholder Identification and Alignment:

- Activity: The PM will create a stakeholder map, identifying not just the project sponsor but also legal, compliance, marketing, finance, and end-user representatives. This involves conducting initial interviews to understand their expectations, concerns, and definitions of success.

- PM Role: Facilitate a kickoff workshop to align all stakeholders on the high-level vision. The PM must be adept at translating a business problem (e.g., “reduce customer support resolution time”) into a potential Gen AI solution (e.g., “an internal-facing chatbot that drafts support ticket responses”).

- Business Case and ROI Estimation:

- Activity: Work with business analysts and finance to build a detailed Return on Investment (ROI) model. This isn’t a back-of-the-napkin calculation. It involves estimating potential revenue gains, cost savings (e.g., reduced man-hours for content creation), and operational efficiencies. Crucially, it must also factor in the estimated costs of development, infrastructure, data acquisition, and ongoing monitoring.

- PM Role: The PM owns the creation of the business case document. They must challenge assumptions, ensure the financial model is robust, and present it clearly to leadership. For example, if building a Gen AI image tool, the ROI calculation would include the cost of stock photo licenses saved versus the cost of GPU training hours and annotator salaries.

- Technical Feasibility Assessment:

- Activity: This is a deep dive with the technical lead and data scientists. Key questions include:

- Data Availability: Do we have access to sufficient, high-quality, and relevant data to train or fine-tune a model? If not, what is the cost and timeline for acquiring or generating it? Is synthetic data a viable option?

- Model Viability: Is there an existing foundation model (e.g., GPT-4, Llama 3, Stable Diffusion) that can be fine-tuned for our specific use case? Or does this require building a model from scratch (a much higher-risk endeavor)? What are the preliminary infrastructure requirements (e.g., number of A100/H100 GPUs)?

- PM Role: The PM facilitates these discussions, documents the findings, and ensures the technical team provides clear, data-driven answers. They are not expected to be the deepest technical expert but must be fluent enough to understand the implications of the choices being made.

- Activity: This is a deep dive with the technical lead and data scientists. Key questions include:

- Risk Assessment (Regulatory, Ethical, and Bias):

- Activity: This is a critical, AI-specific step. The PM must lead a pre-mortem risk analysis workshop with legal, ethical, and technical experts.

- Regulatory: Does the proposed application fall under existing or emerging regulations like the EU AI Act? What are the data privacy implications (GDPR, CCPA)?

- Ethical: Could the model be used to generate harmful, biased, or misleading content? What are the potential societal impacts?

- Bias: What are the potential sources of bias in our likely data sources? How could this manifest in the model’s output, and what harm could it cause to specific user groups?

- PM Role: The PM is responsible for creating and maintaining the initial Risk Register. This is a living document that logs each identified risk, its potential impact, its probability, and a preliminary mitigation strategy.

- Activity: This is a critical, AI-specific step. The PM must lead a pre-mortem risk analysis workshop with legal, ethical, and technical experts.

Key Deliverables:

- Project Charter: A formal document, signed off by the sponsor, that provides a high-level overview of the project. It includes the business case, goals, scope summary, key stakeholders, and high-level budget and timeline estimates.

- Initial Risk Register: The first version of the risk log, detailing the key feasibility, ethical, and technical risks identified.

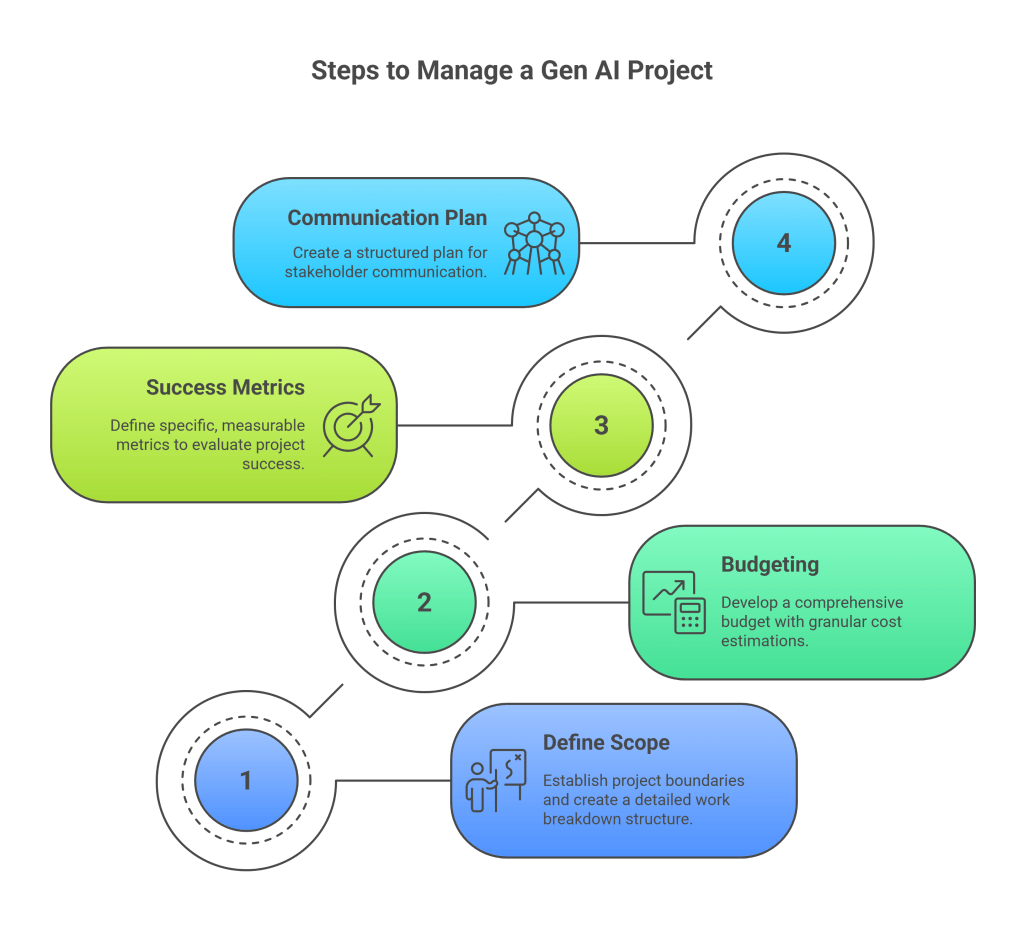

📅 2.2 Planning Phase

Primary Goal: To translate the validated idea from the initiation phase into a detailed, actionable roadmap. This phase is about meticulous planning to create a shared understanding of what will be built, how it will be measured, and how the team will operate.

Micro-Level Activities & PM Role:

- Define Scope and Create Work Breakdown Structure (WBS):

- Activity: The PM works with the team to break down the high-level project into smaller, manageable work packages. For a Gen AI project, the WBS will have unique components.

- Example WBS for an LLM Chatbot:

- 1.0 Data Management

- 1.1 Data Sourcing (Public & Internal Docs)

- 1.2 Data Cleaning & Pre-processing

- 1.3 Annotation & Labeling

- 1.4 Data Versioning Setup (DVC)

- 2.0 Model Development

- 2.1 Foundation Model Selection & Evaluation

- 2.2 Fine-tuning (LoRA/Full)

- 2.3 RLHF (Reinforcement Learning from Human Feedback) Setup

- 2.4 Experiment Tracking Setup (MLflow)

- 3.0 Evaluation & Testing

- 3.1 Red Teaming for Safety

- 3.2 Performance Testing (BLEU/ROUGE)

- 3.3 User Acceptance Testing (UAT)

- 4.0 Deployment

- 4.1 CI/CD Pipeline for Models

- 4.2 Monitoring Dashboard Setup

- 1.0 Data Management

- Example WBS for an LLM Chatbot:

- PM Role: The PM owns the WBS and uses it to create the project schedule. They ensure every conceivable task is captured, from data annotation to legal reviews.

- Activity: The PM works with the team to break down the high-level project into smaller, manageable work packages. For a Gen AI project, the WBS will have unique components.

- Budgeting and Detailed Cost Estimation:

- Activity: Building on the initial estimate, the PM now creates a line-item budget. This requires granular detail:

- Compute Costs: Estimate GPU hours needed for fine-tuning and inference. (e.g., “Fine-tuning Llama-3-70B on our dataset will require 4 x H100 GPUs for 120 hours on AWS P5 instances at $X/hour”).

- Data Costs: Cost of licensing datasets or salaries for a team of 5 annotators for 3 months.

- API Costs: If using a third-party model, estimate token usage (e.g., “Average support ticket is 500 tokens, average response is 300 tokens. At 10,000 tickets/month, we project 8 million tokens/month through the OpenAI API at Y cost”).

- Human Costs: Salaries for the project team (ML Engineers, Data Scientists, PM, etc.).

- PM Role: The PM creates and manages the Cost Plan, securing budget approval and setting up tracking mechanisms.

- Activity: Building on the initial estimate, the PM now creates a line-item budget. This requires granular detail:

- Define Success and Quality Metrics:

- Activity: This goes beyond “it works.” The team must define specific, measurable, and agreed-upon metrics.

- Performance Metrics: BLEU/ROUGE scores for text summarization, Perplexity for language modeling, FID for image generation.

- Operational Metrics: Latency (e.g., “p95 response time < 500ms”), Throughput (requests per second), Cost per inference.

- Quality & Safety Metrics: Hallucination Rate (e.g., “< 5% of outputs contain factually incorrect information, as verified by human evaluators”), Toxicity Score (e.g., “Average score < 0.1 on Perspective API for 10,000 generated samples”), PII Leakage Rate (e.g., “0 instances of PII detected in output”).

- PM Role: The PM documents these in the Quality Management Plan, ensuring every stakeholder understands and agrees on how success will be measured.

- Activity: This goes beyond “it works.” The team must define specific, measurable, and agreed-upon metrics.

- Stakeholder Communication Plan:

- Activity: Define the rhythm of communication.

- PM Role: Create a communication matrix specifying who gets what information, when, and in what format (e.g., “Weekly email update to all stakeholders, bi-weekly steering committee meeting with slide deck, daily stand-ups for the core team”).

Key Deliverables:

- Detailed Project Plan: An integrated document containing the WBS, schedule, resource assignments, and dependencies.

- Cost Management Plan: The detailed budget and plan for tracking expenses.

- Quality Management Plan: The defined metrics for success, quality, and safety.

- Communication Plan: The formal plan for stakeholder engagement.

📅 2.3 Data Collection & Preparation

Primary Goal: To acquire, clean, and structure the high-quality data that will serve as the foundation for the Gen AI model. This phase is often the most time-consuming and critical; the principle of “garbage in, garbage out” is amplified in Gen AI.

Micro-Level Activities & PM Role:

- Data Sourcing:

- Activity: The data science team executes the plan to acquire data from various sources: scraping public websites, accessing internal databases, licensing third-party datasets, or generating synthetic data.

- PM Role: The PM tracks the progress of each data source. They are the primary liaison for legal and procurement teams when licensing data, ensuring contracts are reviewed and signed promptly. They manage risks, such as a public data source becoming unavailable or an internal dataset being of lower quality than expected.

- Annotation Guidelines and Process:

- Activity: If human annotation is required (e.g., for RLHF or supervised fine-tuning), the team must create extremely detailed annotation guidelines. For a sentiment analysis task, this means defining what constitutes “positive,” “negative,” and “neutral” with numerous examples and edge cases.

- PM Role: The PM oversees the creation of these guidelines, ensuring clarity and consistency. They may manage the annotation team (whether internal or vendor-based), track their throughput and quality (e.g., using inter-annotator agreement scores), and facilitate calibration sessions to ensure all annotators are aligned.

- Pre-processing Pipelines (ETL):

- Activity: ML engineers build automated pipelines to extract, transform, and load (ETL) the data. This includes tasks like cleaning HTML tags, normalizing text, removing personally identifiable information (PII), and tokenization (breaking text down into the units the model understands).

- PM Role: The PM tracks the development of these pipelines as a distinct workstream. They ensure that dependencies are managed (e.g., the cleaning script must be ready before tokenization can begin) and that the output is validated by the data science team.

- Data Versioning:

- Activity: The team implements a tool like DVC (Data Version Control). This is crucial for reproducibility. It allows the team to tie a specific version of a dataset to a specific version of the model that was trained on it.

- PM Role: The PM ensures that adopting and consistently using the versioning tool is part of the team’s workflow, treating it with the same importance as code version control (like Git).

📅 2.4 Model Development Phase

Primary Goal: To systematically experiment with, train, and fine-tune models to achieve the target performance metrics defined in the planning phase. This is an iterative, research-heavy phase.

Micro-Level Activities & PM Role:

- Model Selection and Baseline Establishment:

- Activity: The team evaluates several candidate foundation models (e.g., comparing GPT-4o, Claude 3 Opus, and Llama 3 for a text generation task). They run a series of small-scale experiments to establish a baseline performance on a validation dataset.

- PM Role: The PM tracks these experiments. They ensure the team is using a structured tool like MLflow or Weights & Biases to log the parameters, code version, data version, and results of every single experiment. This prevents wasted effort and ensures all learnings are captured.

- Fine-tuning Strategy and Execution:

- Activity: Based on baseline results, the team decides on a fine-tuning strategy. This could be full fine-tuning (computationally expensive) or a more efficient method like LoRA (Low-Rank Adaptation). They then launch longer training jobs on powerful GPU infrastructure. This phase also includes setting up complex feedback loops like RLHF, which involves training a separate reward model based on human preferences.

- PM Role: This is where budget management becomes critical. The PM actively monitors GPU usage, comparing actual consumption against the plan. They work with the tech lead to prioritize training runs and manage the queue. They must also manage risks like overfitting (where the model memorizes the training data but fails to generalize) or the emergence of catastrophic bias.

- Infrastructure Planning and Management:

- Activity: The DevOps/MLOps team provisions and configures the necessary cloud infrastructure (e.g., Kubernetes clusters with GPU nodes, high-speed storage for datasets).

- PM Role: The PM is the coordinator. They ensure the infrastructure is ready when the modeling team needs it, preventing delays. They track infrastructure costs as a separate line item in the budget.

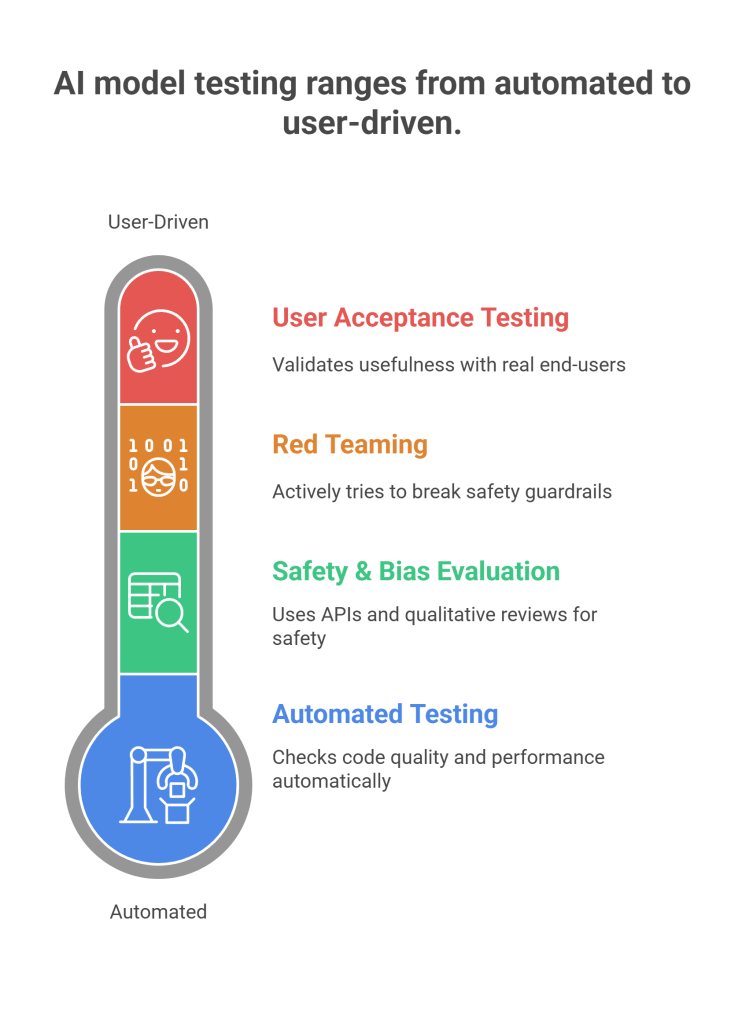

📅 2.5 Testing & Evaluation

Primary Goal: To move beyond standard performance metrics and rigorously validate the model’s quality, safety, and reliability from a user-centric and adversarial perspective.

Micro-Level Activities & PM Role:

- Automated and Manual Testing:

- Activity:

- Automated: The team creates a suite of tests that run automatically, checking for things like code quality, deterministic outputs (where applicable), and performance against benchmark datasets (e.g., calculating BLEU/ROUGE scores).

- Manual: A dedicated QA team or the project team members manually interact with the model, testing its capabilities against a predefined test plan. They check for logical inconsistencies, factual errors (hallucinations), and poor conversational flow.

- PM Role: The PM coordinates the QA effort, manages the bug tracking system (e.g., Jira), and triages incoming bug reports. They ensure there is clear traceability between test cases, requirements, and bug reports.

- Activity:

- Red Teaming for Prompt Injection and Safety:

- Activity: This is a specialized form of adversarial testing. The “Red Team” actively tries to break the model’s safety guardrails. They use techniques like prompt injection to make the model ignore its instructions, reveal its underlying prompt, or generate harmful, biased, or forbidden content.

- PM Role: The PM schedules and oversees the red teaming exercises. They ensure the findings are documented with extreme detail and are immediately reviewed by the development team to patch vulnerabilities.

- Safety & Bias Evaluation:

- Activity: The team runs the model’s outputs through specialized APIs like the Perspective API to get quantitative scores for toxicity, profanity, and other harmful attributes. They also conduct qualitative reviews to identify subtle biases that automated tools might miss.

- PM Role: The PM tracks these safety metrics on a dashboard, comparing them against the targets set in the Quality Plan. They are responsible for reporting these findings to stakeholders, especially legal and compliance.

- User Acceptance Testing (UAT):

- Activity: A group of real end-users interacts with the model in a controlled environment. Their goal is to validate if the model is truly useful and meets their needs for the intended business process.

- PM Role: The PM plans and facilitates the UAT sessions, collects structured feedback (e.g., through surveys and interviews), and ensures this feedback is prioritized and actioned by the development team.

📅 2.6 Deployment & Monitoring

Primary Goal: To successfully launch the model into a production environment and ensure its ongoing performance, reliability, and safety through robust monitoring.

Micro-Level Activities & PM Role:

- CI/CD for Models (MLOps):

- Activity: The MLOps team sets up a Continuous Integration/Continuous Deployment (CI/CD) pipeline specifically for the model. This automates the process of testing, packaging, and deploying a new model version to production.

- PM Role: The PM coordinates with the DevOps and MLOps teams to ensure this pipeline is robust and reliable. They oversee the creation of a Model Registry (using tools like MLflow or Weights & Biases) which acts as a central repository for all production-ready model versions.

- Real-time Monitoring Setup:

- Activity: The team deploys a suite of monitoring tools and dashboards.

- Operational: Tracking latency, error rates (e.g., in Sentry), and infrastructure health (CPU/GPU usage).

- Model Performance: Tracking data drift (how much the live data differs from the training data) and concept drift (whether the underlying patterns the model learned are still valid).

- Cost & Usage: Using tools like LangServe to monitor token consumption and cost per request in real-time.

- Safety: Continuously monitoring for spikes in toxicity or the generation of harmful content.

- PM Role: The PM ensures this monitoring infrastructure is in place before launch. They are responsible for tracking Service Level Agreements (SLAs) and setting up an Incident Response Plan.

- Activity: The team deploys a suite of monitoring tools and dashboards.

- Incident Response Protocol:

- Activity: The team defines a clear protocol for what to do when things go wrong. What happens if the model starts generating highly toxic content? Who gets alerted? What are the steps to roll back to a previous, safe version?

- PM Role: The PM owns this protocol. They conduct drills and ensure everyone on the on-call rotation knows their role.

📅 2.7 Closure & Retrospective

Primary Goal: To formally close the project, document and share all lessons learned, and celebrate the team’s achievements.

Micro-Level Activities & PM Role:

- Final Reporting:

- Activity: The PM prepares the final project report, comparing the final outcomes against the initial plan. This includes the final budget vs. actual spend, final schedule vs. baseline, and a summary of how the project performed against its key performance indicators (KPIs).

- PM Role: The PM presents this report to the steering committee and key stakeholders, securing their formal sign-off to close the project.

- Conduct Retrospective:

- Activity: The PM facilitates a blameless retrospective session with the entire project team. The focus is on what went well, what didn’t go well, and what could be improved in the next project.

- PM Role: The PM creates a safe space for honest feedback and ensures the key takeaways are documented and converted into actionable process improvements for the organization.

- Archive Artifacts:

- Activity: The team archives all project artifacts in a central repository. This includes the final version of the code, the datasets (with DVC pointers), the trained model weights, all documentation, and logs.

- PM Role: The PM ensures this archival process is completed thoroughly, creating a valuable knowledge base for future projects.

3. Bug Management & Quality Planning in Detail

In Gen AI, “bugs” are not just code errors. They are complex, often subtle failures in model behavior. A robust quality plan is essential for managing these risks.

📝 Defining Quality Metrics

- Hallucination Rate: The percentage of generated outputs that contain factually incorrect, nonsensical, or unprovable information.

- Measurement: This is notoriously difficult to automate. It typically requires a team of human evaluators who are given a sample of outputs and asked to fact-check them against a trusted source of truth. The rate is calculated as

(Number of Hallucinated Outputs / Total Outputs Sampled) * 100.

- Measurement: This is notoriously difficult to automate. It typically requires a team of human evaluators who are given a sample of outputs and asked to fact-check them against a trusted source of truth. The rate is calculated as

- Toxicity Score: A quantifiable measure of how harmful, insulting, or profane an output is.

- Measurement: Use a pre-trained classifier API like Google’s Perspective API or OpenAI’s Moderation endpoint. These services return a score (e.g., between 0 and 1) for various categories (toxicity, threat, profanity). The quality plan will set a threshold (e.g., “no more than 1% of outputs may have a toxicity score > 0.8”).

- Latency: The time it takes for the model to generate a response after receiving a prompt.

- Measurement: This is tracked via application performance monitoring (APM) tools. It’s important to measure not just the average latency but also percentiles (e.g., p95, p99) to understand the worst-case user experience. The goal might be “p95 latency under 800ms.”

- Output Accuracy: For tasks with a “correct” answer (like summarization or question-answering), this measures how well the model’s output matches a ground-truth reference.

- Measurement: Can be automated using scores like BLEU and ROUGE for summarization, or manual review for more nuanced tasks.

📈 Bug Severity in Quality Tracking

A tiered system for classifying bugs is critical for prioritization.

| Bug Grade | Tracked Metric / Description | Example | Action Plan |

| Grade 1 (Critical) | Legal breach, ethical violation, PII leakage, major security vulnerability. | Model leaks a user’s credit card number or generates hate speech. | Immediate Rollback: Instantly switch traffic to a previous, stable model version. Hotfix: Deploy an emergency patch. Full Incident Report: Conduct a post-mortem, notify legal/compliance, and implement preventative measures. |

| Grade 2 (High) | High hallucination rate, consistently wrong or nonsensical outputs, major functional failure. | A customer support chatbot provides dangerously incorrect advice about a product. | Patch Model: If possible, patch the model’s behavior with a targeted fix. Fine-tune/Retrain: Initiate a new fine-tuning cycle on corrected data. Disable Feature: Temporarily disable the specific feature causing the issue. |

| Grade 3 (Medium) | Inconsistent tone, occasional minor hallucinations, slow or degraded performance. | An email-drafting AI sometimes uses an overly casual tone for formal contexts. Latency increases by 50% during peak hours. | Performance Optimization: Optimize the inference code or scale up the infrastructure. Prompt Engineering: Adjust the system prompt to better guide the model’s tone. Schedule for the next regular release cycle. |

| Grade 4 (Low) | Minor typos in output, UI glitches, slightly awkward phrasing. | The chatbot’s response contains a minor grammatical error. A button in the UI is misaligned. | UI Patch: Fix in the front-end application. Add to Backlog: Address in a future, non-urgent release. |

| Grade 5 (Informational) | Feature requests, suggestions for improvement. | A user suggests the AI should be able to generate content in a new language. | Add to Product Backlog: Prioritize against other potential features for future development sprints. |

📊 Quality Tracking Tools

- LangServe / LangSmith: Excellent for deep visibility into LLM applications. They allow you to trace the entire lifecycle of a request, from the initial prompt through any intermediate steps (like API calls or retrieval) to the final output. This is invaluable for debugging complex chains and monitoring token/cost usage per trace.

- Weights & Biases / MLflow: These are MLOps platforms essential for experiment tracking. They log every detail of a model training run, allowing you to compare performance across different model versions, hyperparameters, and datasets. They are the single source of truth for model lineage.

- Sentry / Datadog: These are application performance monitoring (APM) and error tracking tools. They are crucial for monitoring the production environment, alerting the team to code-level errors, tracking latency, and providing the observability needed to maintain SLAs.

4. Cost Tracking Techniques

Gen AI projects can be notoriously expensive. Proactive and granular cost tracking is not optional; it’s a core PM responsibility.

₹ Budget Components

- Cloud Compute (GPU Time): Often the largest cost. The PM must track this meticulously.

- Micro-Tracking: Use cloud provider tagging (e.g., on AWS, GCP, Azure) to assign every GPU instance to the specific project and even to a specific experiment. Use cloud cost management dashboards to monitor spending against the budget in real-time.

- Data Acquisition & Annotation:

- Micro-Tracking: If using an annotation vendor, track the cost per annotated item. If using an in-house team, track the man-hours spent on annotation as a project cost. For licensed data, this is a fixed cost but must be allocated to the project.

- Human Evaluation Costs:

- Micro-Tracking: Similar to annotation, track the hours spent by human evaluators for red teaming, UAT, and hallucination checking. This is a significant and often underestimated cost.

- Third-Party API Usage:

- Micro-Tracking: For models from OpenAI, Anthropic, or Google, track token usage religiously. Implement internal logging that records the prompt tokens and completion tokens for every single API call. Aggregate this data to forecast monthly bills and identify high-cost use cases.

🚀 Cost Monitoring & Optimization Techniques

- LangServe for Cost Monitoring: As mentioned, this tool can be configured to track the exact number of tokens used by each endpoint or user session. This allows the PM to calculate the precise cost per request and identify which features are most expensive.

- Cloud Budget Alerts: Set up automated alerts in your cloud provider’s dashboard (e.g., AWS Budgets). The PM should receive an email or Slack notification when spending reaches 50%, 75%, 90%, and 100% of the monthly forecast.

- Optimize Prompt Length: Shorter prompts and responses mean fewer tokens and lower costs. The PM should encourage the team to experiment with prompt engineering to achieve the same quality output with more concise inputs.

- Schedule Off-Peak GPU Usage: Many cloud providers offer lower prices for “spot instances” or during off-peak hours. The PM can work with the team to schedule long training runs overnight or on weekends to take advantage of these savings.

- Model Quantization and Pruning: Encourage the team to explore techniques that make the model smaller and more efficient (e.g., quantization) without a significant drop in quality, as this directly reduces inference costs.

5. Key Responsibilities of a Gen AI Project Manager

The Gen AI PM is a multifaceted leader. Their responsibilities extend beyond traditional project management into the realms of technology, ethics, and business strategy.

- Cross-functional Team Management: A Gen AI team is a diverse mix of data scientists, ML engineers, MLOps engineers, data annotators, software developers, QA testers, and subject matter experts. The PM is the conductor of this orchestra, ensuring seamless communication, resolving conflicts, and fostering a collaborative environment.

- Proactive Risk Management: The PM is the owner of the risk register. They must constantly scan the horizon for new threats, including:

- Model Drift: The risk that the model’s performance degrades over time as real-world data changes.

- Regulatory Risk: New AI laws or regulations being introduced that impact the project.

- Ethical Risk: The model being used for unintended, harmful purposes.

- Bias Amplification: The model reinforcing or even amplifying existing societal biases present in the training data.

- Stakeholder Communication: The PM is the chief translator. They must be able to explain complex AI concepts (like hallucinations or perplexity) to non-technical business stakeholders in terms of business impact (like customer trust or brand risk). They manage expectations, provide regular, transparent updates, and build confidence in the project.

- Ethics and Compliance: The PM acts as the project’s ethical compass. They are responsible for ensuring the project aligns with the organization’s responsible AI framework, which typically includes principles like Fairness, Accountability, and Transparency (FAT). They work closely with legal and compliance teams to ensure all regulatory requirements are met.

- Continuous Improvement: The job doesn’t end at deployment. The PM is responsible for monitoring post-deployment feedback from users and monitoring systems. They use this data to plan future iterations, including retraining cycles to combat model drift and the development of new features based on user needs.

6. Best Practices for Success

- Use Agile with AI-Specific Adaptations: While Agile principles like sprints and stand-ups are valuable, they need adaptation.

- AI Adaptation: Sprints may be more research-focused (“spikes”) and less predictable. The goal of a sprint might not be a shippable feature but a learning outcome (e.g., “Determine if Model X can be fine-tuned to achieve a BLEU score above 0.7”). The sprint demo should always include a detailed model evaluation, not just a UI demonstration.

- Maintain Model Cards for Transparency: A model card is a short document that provides clear, concise information about a model. The PM should ensure one is created for every production model. It should include:

- The model’s intended use cases (and explicitly what it should not be used for).

- Details about the training data.

- Evaluation results for performance and bias.

- Ethical considerations and limitations.

- Include Human-in-the-Loop (HITL) Feedback Mechanisms: For high-stakes applications, build systems where a human can review, edit, or approve the AI’s output before it goes public. For all applications, include a simple feedback mechanism (e.g., a thumbs up/down button) so users can flag poor outputs. The PM is responsible for ensuring this feedback is collected and used to improve the model.

- Prioritize Explainability and Interpretability (XAI): While many Gen AI models are “black boxes,” it’s important to strive for explainability. The PM should encourage the team to use XAI techniques that can help explain why a model made a particular decision. This is crucial for debugging, building user trust, and meeting regulatory requirements.

- Establish a Robust Incident Response Protocol: As detailed earlier, knowing exactly what to do when a critical bug occurs is paramount. The PM must ensure this plan is documented, drilled, and ready to be executed at a moment’s notice to protect users and the organization.

The role of the Generative AI Project Manager is challenging, demanding a unique blend of technical fluency, business acumen, and ethical diligence. But for those who can master its complexities, it is an opportunity to be at the very heart of the most transformative technology of our time, shaping the future of how we work, create, and interact with the digital world.

Discover more from SkillWisor

Subscribe to get the latest posts sent to your email.