In a world dominated by single-model chat interfaces—where we rely solely on ChatGPT or Claude or Gemini—we often face a dilemma: Who do you trust? Each model has its own biases, hallucinations, and blind spots.

The llm-council repository (originally by Andrej Karpathy and hosted on Hugging Face Spaces by burtenshaw) proposes a democratic solution: Don’t trust one; trust the consensus.

My “Aha!” Moment: Validation of My Local Implementation

Seeing this architecture gain traction was incredibly satisfying because it mirrors the work I’ve attempted with Local RAG (Retrieval Augmented Generation).

I released a repository specifically focused on getting two distinct models—Gemma 3 and DeepSeek-AI 1.5B—to work in tandem on local hardware.

Check out my Repo here: Private_AI_Local_RAG_Gemma3_DeepSeek_Ai1.5b_Models

How I Built “Team AI” Locally

While the big players are doing this in the cloud via APIs, I wanted to prove that you could achieve collaborative intelligence privately.

In my implementation, I didn’t just want a chatbot; I wanted a workflow where models “talked” to each other:

- The Specialist Approach: I utilized DeepSeek for its coding and logic capabilities and Gemma for its general synthesis.

- The Interaction: Much like the Council’s “Review Phase,” my notebook demonstrates how to pass context and reasoning between these models. One acts as the retriever/reasoner, and the other refines the output for the user.

- Privacy First: The best part? This entire “council” runs locally. No data leaves the machine.

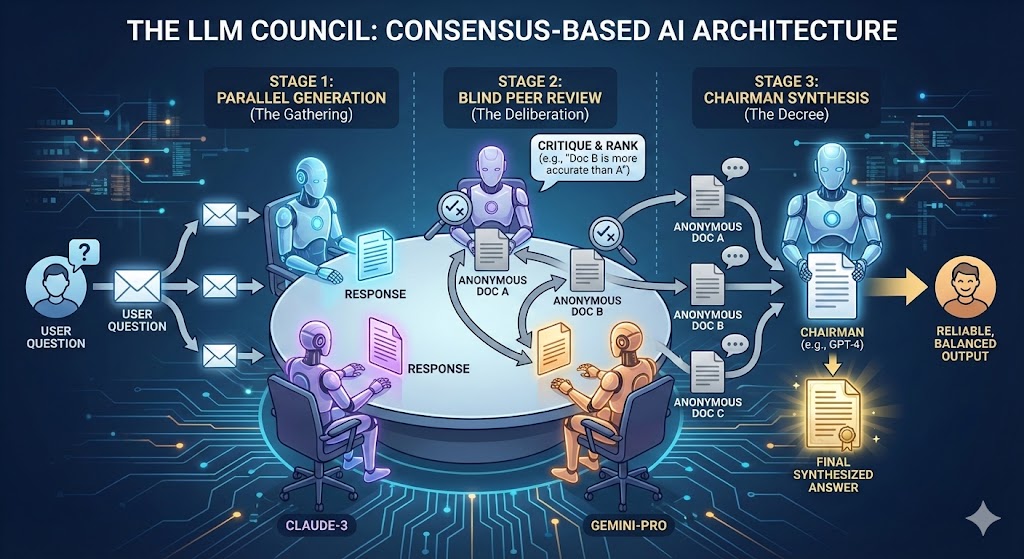

The Core Concept: A 3-Stage Pipeline

Unlike a standard chatbot that sends a prompt and awaits a completion, the LLM Council backend orchestrates a multi-step workflow. It treats LLMs not just as text generators, but as evaluators of each other’s work.

The backend (built with FastAPI and Python) manages this process in three distinct stages:

Stage 1: The Gathering (Parallel Generation)

When you ask the council a question, the backend doesn’t just send it to one model. It uses the OpenRouter API to dispatch your query asynchronously to a configured list of “Council Members” (e.g., openai/gpt-4, anthropic/claude-3.5-sonnet, google/gemini-pro).

Key Backend Logic:

- Async/Await: The system uses Python’s

asyncioto call all models in parallel. This ensures the user waits only as long as the slowest model, rather than the sum of all response times. - Graceful Degradation: If one council member fails (e.g., an API timeout), the system catches the error and proceeds with the remaining members. The show must go on.

Stage 2: The Blind Review (Deliberation)

This is the most innovative part of the codebase. Once all Stage 1 responses are collected, the backend prepares a new prompt for every council member.

Each model is given the user’s original question and the answers provided by its peers. Crucially, the backend anonymizes the authors.

- Instead of “Here is what GPT-4 said,” the prompt reads “Here is Response A.”

- The model is instructed to critique these responses and rank them based on accuracy and insight.

Why Anonymize? This prevents “brand bias.” Models often recognize the writing style of their own family or have safety filters that prevent them from criticizing specific competitors. By treating them as “Response A” and “Response B,” the backend forces an objective evaluation.

Stage 3: The Chairman’s Decree (Synthesis)

Finally, the backend calls a designated “Chairman” model (often a highly capable model like GPT-4 or Gemini 1.5 Pro).

The Chairman receives:

- The original question.

- All candidate answers (Stage 1).

- The critiques and rankings from the council (Stage 2).

The Chairman’s job is to synthesize this information into a single, final answer—effectively taking the “best of all worlds” while discarding hallucinations pointed out during the review phase.

Backend Technical Highlights

The backend folder reveals several smart engineering choices designed for flexibility and speed.

1. The OpenRouter “Switchboard” (openrouter.py)

Instead of maintaining separate SDKs for OpenAI, Anthropic, and Google, the project uses OpenRouter. This acts as a universal adapter.

- Unified Interface: The code only needs to know how to send messages to OpenRouter’s standard API endpoint.

- Model Swapping: Changing the council composition is as simple as editing a list of strings in

config.py. You can swapgpt-4forgrok-betawithout rewriting any logic.

2. Stateless “Vibe Coding”

The backend is intentionally lightweight. It doesn’t use a heavy database to store conversation history for the “Council” logic itself (though it stores chat logs locally). The complex state—who said what, and who ranked whom—is passed through the prompt chain in memory. This makes the system easy to fork, deploy, and modify.

3. Prompt Engineering as Code

The “magic” lies in the prompts defined in the backend. The Stage 2 prompt is rigorously structured to ensure the output is machine-parseable. It strictly asks for a “FINAL RANKING” section so the Python code can extract the winner without using complex regex or NLP parsers.

Why This Matters

The karpathy-llm-council represents a shift from Prompt Engineering to Flow Engineering.

- Hallucination Check: If Model A hallucinates a fact, Model B and C will likely catch it in Stage 2 (“Response A claims X, but this is incorrect because…”).

- Bias Mitigation: By averaging the perspectives of models trained by different companies (Google, OpenAI, Anthropic), you get a more balanced viewpoint.

Conclusion

This repository is more than just a fun experiment; it’s a blueprint for the future of reliable AI agents. By building a backend that privileges consensus over individual confidence, we can create systems that are significantly more trustworthy than any single model could be on its own.

- Repo: burtenshaw/karpathy-llm-council

- Original Author: Andrej Karpathy

- Tech Stack: Python, FastAPI, OpenRouter, React

Discover more from SkillWisor

Subscribe to get the latest posts sent to your email.