The Crisis Hidden in Speed

There’s a pattern that repeats across technology programs—in NLP initiatives, data analytics transformations, and AI enablement projects. The pressure to move fast overshadows the need to think clearly. Teams rush to build, project managers rush to promise, stakeholders rush to demand. Somewhere in that race, the foundation cracks.

Projects rarely collapse because people lack intelligence or capability. They derail because decisions were made too quickly, without understanding the problem, the impact, or the consequences. Complexity wasn’t respected. Risks weren’t explored. Assumptions weren’t challenged.

The truth is simple: Speed without understanding is just chaos delivered quicker.

The Invisible Cost of Reacting Instead of Thinking

Every project experiences moments when urgency takes over. A customer escalates. A stakeholder demands change. A model fails. A dashboard breaks. A deadline collapses.

The natural instinct is to respond immediately—to say yes, to solve instantly, to demonstrate control. The quick answer feels like leadership. It feels like competence. It feels safe.

But quick answers carry hidden debt.

An NLP Example: A stakeholder requests last-minute regional language support by next week. Saying yes without examination feels collaborative, but soon the team is firefighting. Training data doesn’t exist. Accuracy deteriorates. Deployment timelines collapse. What began as goodwill turns into disappointment and mistrust.

A Data Analytics Example: A VP requests dozens of additional metrics, believing it will drive deeper insights. Without thoughtful discussion, the team adds them hastily, turning the dashboard into noise. Instead of powerful insight, the result is distraction—a tool that’s visually impressive but strategically meaningless.

Why Great Leaders Ask Questions, Not Give Fast Answers

Thoughtful questions accomplish what rushed answers cannot:

- Slow down emotional reaction

- Reveal missing information

- Clarify priorities

- Highlight risks

- Create shared understanding

- Eliminate assumption-based decisions

Questions turn confusion into clarity, risks into plans, and conflicts into alignment.

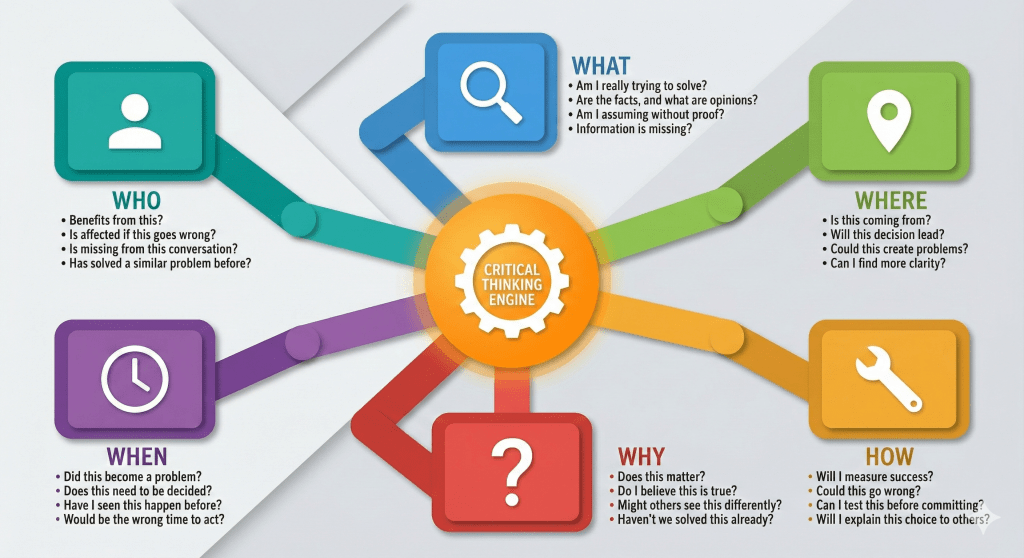

The Five-Dimensional Thinking Framework

Instead of relying on impulse, effective leaders process decisions through five essential dimensions. Each dimension is anchored by a critical question word that reveals what’s truly needed.

1. WHO: Understanding People First

Projects are human systems before they are technical systems.

Every decision touches someone—a team member, a stakeholder, a customer, a user. Thoughtful leaders start by understanding those people.

Ask:

- Who benefits from this decision? Who suffers?

- Who has context that I’m missing?

- Who should be consulted before acting?

- Whose voice is missing from this conversation?

The loudest voice isn’t always the most important. Sometimes the quietest voice carries the truth that prevents disaster—a QA engineer warning about edge cases, a data scientist concerned about training volume, an analyst unsure of business definitions.

Projects fail when decisions ignore people. They succeed when decisions are built around them.

2. WHAT: Separating Facts from Assumptions

Every problem comes wrapped in assumptions, opinions, confusion, and emotion. Stakeholders describe symptoms, not causes. Teams defend suggestions instead of facts. Urgency distorts perspective.

Thoughtful leaders separate facts from beliefs.

Ask:

- What exactly is happening?

- What is the real problem we’re trying to solve?

- What are facts versus assumptions?

- What information is missing?

- What assumptions are controlling this discussion without being validated?

Most wasted effort in projects comes not from poor execution but from poor understanding. Not from bad talent, but from bad assumptions. Not from slow teams, but from unclear direction.

The greatest efficiency comes not from moving fast, but from knowing precisely where to move.

3. WHEN: The Wisdom of Timing

The pressure to decide now is the most dangerous pressure in project life. Many decisions are made too early or too late—rarely at the right time.

Ask:

- When did this truly become a problem?

- When is the real deadline—not the emotional deadline?

- When have we faced similar issues, and what did we learn?

- When would be the worst time to act?

Timing is an underappreciated dimension of risk. Deploying a new model during high-traffic periods can be catastrophic. Adjusting a data pipeline during financial close creates reporting chaos. Agreeing to scope change during a critical milestone collapses morale.

Some decisions are urgent. Many decisions only feel urgent. Wisdom knows the difference.

4. WHERE: Understanding Origins and Consequences

Where a request comes from matters. Where a decision leads matters even more.

Ask:

- Where did this request originate—from data, customers, or opinion?

- Where will this decision take us in six months or a year?

- Where are the hidden risks and weak links?

- Where can we find clarity if we don’t yet have enough?

Every decision changes the landscape. Technical debt begins as a shortcut that becomes permanent. Process inefficiency begins as flexibility that becomes expectation. Reputation risk begins as tolerance that becomes normalization.

Great leaders think forward—beyond the immediate task into the future ecosystem that will inherit the consequences.

5. WHY: The Anchor of Clarity

WHY prevents waste. WHY guards against ego-driven decisions. WHY ensures alignment.

Ask:

- Why does this matter?

- Why is this the right priority now?

- Why will this create value?

If the answer is unclear, the work is unnecessary.

Some of the most damaging outcomes emerge not from malice, but from well-intentioned work without purpose. Teams build impressive solutions nobody needs. NLP models are optimized for metrics disconnected from business value. Dashboards measure everything except what drives decisions.

WHY is the filter that protects resources and focuses energy. WHY turns motion into progress.

6. HOW: Engineering Execution

Once clarity is achieved about people, reality, timing, risk, and purpose, execution becomes intentional instead of chaotic.

Ask:

- How will we measure success?

- How could this fail, and how will we protect against failure?

- How will we test and validate before committing?

- How will we explain the reasoning so others trust it?

Great execution is not luck—it’s engineering. It’s preparation and transparency. It’s clarity turned into action.

A well-thought decision strengthens trust. A poorly thought decision destroys it.

Real Scenarios: Thoughtful Decision-Making in Action

The framework comes alive when applied to real situations. Here are common scenarios in data analytics and NLP projects where thoughtful questioning prevents disaster.

Scenario 1: The Last-Minute Dashboard Request

The Situation: Three days before a board presentation, the CFO requests a new executive dashboard combining financial, operational, and customer data with real-time updates.

The Reactive Response: “Yes, we’ll get it done.” The team works around the clock, pulls data from multiple sources, creates visualizations, and delivers something impressive-looking by the deadline.

The Thoughtful Response: Apply the framework:

WHO:

- Who will actually use this dashboard daily? (Discovery: Only the CFO and two directors)

- Who owns the data sources we need? (Discovery: Finance owns some, Operations owns others, IT controls access)

- Who has tried building something similar before? (Discovery: A similar attempt failed last year due to data quality issues)

WHAT:

- What problem is this solving? (Discovery: The CFO wants to monitor three specific KPIs for the board, not “everything”)

- What data actually exists and is reliable? (Discovery: Real-time operational data has a 6-hour lag and frequent gaps)

- What are we assuming about data quality? (Discovery: Customer data hasn’t been cleaned in months)

WHEN:

- When is this truly needed? (Discovery: The board meeting agenda is flexible; the CFO wants it “eventually” but thought asking early means delays)

- When have we faced similar urgent requests? (Discovery: Rushed dashboards are rarely used after launch)

WHERE:

- Where will this dashboard live—existing BI tool or new platform? (Discovery: New platform requires infrastructure nobody has provisioned)

- Where are the data quality risks? (Discovery: Three critical data sources are maintained by teams being reorganized)

WHY:

- Why does the CFO need this now? (Discovery: The real need is answering one board question: “Are we on track for Q4 targets?”)

- Why real-time instead of daily updates? (Discovery: Real-time isn’t actually necessary; daily is sufficient)

HOW:

- How will we validate accuracy before the board sees it? (Discovery: No validation plan existed)

- How will we handle data source failures? (Discovery: No fallback strategy)

- How will we explain limitations transparently? (Discovery: Setting expectations prevents future disappointment)

The Outcome: After questions, the scope changes dramatically. Instead of a complex real-time dashboard, the team builds a focused daily report answering three specific questions, using validated data sources, with clear caveats. Delivery time: one week with proper testing. The CFO is more satisfied because the solution actually works and is trustworthy.

Risk Prevented: Delivering an impressive but unreliable dashboard that produces wrong numbers during a board meeting, destroying credibility and requiring emergency fixes.

Scenario 2: The Data Migration Pressure

The Situation: Leadership wants to migrate from the legacy analytics database to a modern cloud data warehouse. They want it done in 30 days to “save costs immediately.”

The Reactive Response: “We can do it.” The team starts migrating tables, rewriting queries, and rushing to move everything before the deadline.

The Thoughtful Response:

WHO:

- Who depends on the current system daily? (Discovery: 47 business users across 8 departments rely on it for critical reports)

- Who will be impacted if something breaks during migration? (Discovery: Finance month-end close process depends on 12 specific reports)

- Who has expertise in the new platform? (Discovery: Only one team member has experience; everyone else needs training)

WHAT:

- What exactly are we migrating—all tables or critical ones first? (Discovery: 200 tables exist, but only 30 are actively used)

- What dependencies exist between systems? (Discovery: 6 downstream applications consume data via APIs that will break)

- What is the real cost savings? (Discovery: Savings won’t materialize for 6 months due to parallel running costs)

WHEN:

- When is the financial close period? (Discovery: In 3 weeks—the worst possible time for disruption)

- When do annual contracts renew? (Discovery: Legacy system contract doesn’t expire for 4 months)

- When have we done similar migrations? (Discovery: Last migration took 3 months and had significant rollback issues)

WHERE:

- Where will data quality issues surface? (Discovery: Legacy system has undocumented transformations that will be lost)

- Where are single points of failure? (Discovery: One person knows how the ETL processes actually work)

- Where could data get corrupted during migration? (Discovery: Date formats, null handling, and encoding differ between systems)

WHY:

- Why 30 days specifically? (Discovery: Arbitrary target, not driven by actual business need)

- Why migrate everything at once? (Discovery: Phased approach was never considered)

- Why is this the priority now? (Discovery: Executive read an article about cloud migrations)

HOW:

- How will we validate data accuracy after migration? (Discovery: No comparison strategy exists)

- How will we handle failures during migration? (Discovery: No rollback plan)

- How will we train users on new interfaces and query patterns? (Discovery: Training wasn’t budgeted)

- How will we maintain both systems during transition? (Discovery: No plan for parallel operation)

The Outcome: The timeline changes to 90 days with a phased approach. Critical tables migrate first with extensive validation. Migration happens after financial close. Users receive training. Parallel systems run for 30 days. The migration succeeds without data loss or business disruption.

Risk Prevented: Catastrophic failure during financial close, corrupted historical data, business users unable to access critical information, expensive rollback and reputation damage.

Scenario 4: The NLP Model Deployment Rush

The Situation: A sentiment analysis model for customer feedback has been in development for 3 months. Marketing leadership wants it deployed immediately to analyze social media mentions for a product launch happening next week.

The Reactive Response: “It’s ready, let’s deploy.” The model showed 89% accuracy in testing, which sounds impressive. The team deploys to production and connects it to live social media feeds.

The Thoughtful Response:

WHO:

- Who will act on the sentiment insights? (Discovery: Marketing has no process defined for responding to sentiment data)

- Who validated the model beyond the data science team? (Discovery: No business users reviewed sample outputs)

- Who owns the decision if the model produces incorrect sentiment scores? (Discovery: Unclear accountability between data science and marketing)

- Who are the actual customers whose feedback we’re analyzing? (Discovery: Model was trained on product reviews but will analyze social media—different language patterns)

WHAT:

- What exactly does 89% accuracy mean? (Discovery: Accurate on neutral sentiment but struggles with sarcasm, cultural context, and mixed emotions)

- What data was the model trained on? (Discovery: English product reviews from 2 years ago, primarily US market)

- What languages and dialects will appear in social media? (Discovery: Product launches in 12 countries with regional slang, abbreviations, and code-switching)

- What edge cases were tested? (Discovery: None—testing only covered standard positive/negative/neutral cases)

- What happens when the model encounters emojis, memes, or image-based posts? (Discovery: Model can’t process these at all)

WHEN:

- When was the training data collected? (Discovery: 2022-2023, before recent changes in how people discuss products online)

- When will social media volume peak? (Discovery: Launch day will see 50x normal volume—model hasn’t been tested at that scale)

- When is the last moment we can safely deploy without risking the launch? (Discovery: Needs 72 hours of monitoring before high-stakes usage)

- When have we deployed similar models before? (Discovery: Previous chatbot deployment had bias issues that took weeks to fix)

WHERE:

- Where will false positives cause the most damage? (Discovery: Misidentifying negative sentiment as positive could prevent response to actual customer issues)

- Where will the model perform worst? (Discovery: Sarcasm, regional dialects, industry-specific terminology, and competitor mentions)

- Where is the model infrastructure running? (Discovery: Dev environment that hasn’t been tested for production load)

- Where will the results be displayed? (Discovery: Executive dashboard where incorrect data will inform major decisions)

WHY:

- Why deploy now versus after the launch? (Discovery: Real reason is executive saw competitor using sentiment analysis; actual business need is unclear)

- Why sentiment analysis specifically? (Discovery: Marketing actually needs to identify product issues and questions, not just sentiment)

- Why this model architecture versus alternatives? (Discovery: Chosen because it was fastest to build, not because it fit the use case)

HOW:

- How will we validate outputs in production? (Discovery: No human review process planned)

- How will we handle languages the model wasn’t trained on? (Discovery: No plan—model will produce unreliable scores)

- How will we communicate limitations to executives? (Discovery: Dashboard shows confidence scores as percentages without context)

- How will we measure actual business impact versus model accuracy? (Discovery: No success metrics beyond technical accuracy)

- How will we respond when the model misclassifies something important? (Discovery: No escalation process exists)

- How will we retrain the model as language evolves? (Discovery: No plan for ongoing maintenance)

The Outcome: After thoughtful questioning, the timeline shifts. The team:

- Conducts business user testing with real social media samples

- Builds a human-review workflow for high-confidence negative sentiments

- Creates language detection to route non-English content appropriately

- Implements confidence thresholds—only high-confidence predictions go to executives

- Deploys to a subset of channels first (Twitter/X only) for validation

- Monitors for one week before full deployment

- Documents clear limitations in the dashboard (“This model works best for English product feedback; sarcasm detection is limited”)

Result: Model launches after product release with proper safeguards. Marketing catches several product issues early through validated negative sentiment. Three false positives are caught by human review before reaching executives. The team identifies gaps (emoji handling, regional slang) and begins collecting training data for model v2.

Risk Prevented:

- Misclassifying serious customer complaints as positive feedback

- Executive decisions based on inaccurate sentiment during critical launch period

- Reputation damage from appearing tone-deaf to customer concerns

- Model failure under production load during high-visibility launch

- Cultural insensitivity from misinterpreting non-US feedback

- Wasted resources responding to false positive “crises”

Scenario 5: The Data Onboarding and Metadata Validation Request

The Situation: A new partner organization wants to share customer interaction data—12 million records covering 3 years of support tickets, chat logs, and email exchanges. Leadership wants to onboard this data “as quickly as possible” to expand analytics capabilities and train better support automation models.

The Reactive Response: “Send us the files, we’ll load them.” The team receives CSV exports, loads them into the data lake, creates some basic dashboards, and declares success.

The Thoughtful Response:

WHO:

- Who owns this data at the source? (Discovery: Three different teams at the partner organization, each with different data practices)

- Who will consume this data once onboarded? (Discovery: Customer support analytics, ML model training, compliance reporting, and executive dashboards)

- Who has accountability if data quality issues emerge later? (Discovery: No SLA or data quality agreement exists)

- Who verified that we have permission to use this data? (Discovery: Legal hasn’t reviewed data sharing agreements)

- Who are the customers represented in this data? (Discovery: Includes EU customers—GDPR compliance needs verification)

WHAT:

- What fields are actually in the dataset? (Discovery: 247 columns with cryptic names like “field_47” and “custom_attr_legacy_09”)

- What do these fields actually mean? (Discovery: No data dictionary provided; field names don’t match our definitions)

- What is the data quality level? (Discovery: Partner admits they have “some nulls and duplicates” but hasn’t quantified)

- What are the data types? (Discovery: Dates stored as strings in multiple formats; numeric IDs stored as text with leading zeros)

- What transformations were applied before export? (Discovery: Partner “cleaned” data but can’t explain what was changed)

- What PII exists in this dataset? (Discovery: Email bodies contain customer names, addresses, credit card fragments, and phone numbers)

- What assumptions is the partner making about how we’ll use this? (Discovery: They think we’ll only use aggregated data, but we plan to train models on individual records)

WHEN:

- When was this data extracted? (Discovery: Rolling extracts over 3 months—data has inconsistent timestamps)

- When did their system definitions change? (Discovery: Support ticket categorization changed twice in 3 years; old categories don’t map to new ones)

- When do we actually need this data operational? (Discovery: No urgent deadline exists; leadership said “quickly” out of habit)

- When will we audit data quality? (Discovery: Team planned to load first and check later)

- When does our compliance certification audit happen? (Discovery: In 6 weeks—uncertified PII data would fail audit)

WHERE:

- Where did this data originate? (Discovery: Four different source systems merged together; each has different reliability levels)

- Where are the data quality issues likely concentrated? (Discovery: Legacy system data from 2021 has known encoding issues)

- Where will this data be stored—what security tier? (Discovery: Contains PII but team planned to use standard data lake without encryption)

- Where will metadata be documented? (Discovery: No metadata repository planned; knowledge would live in team members’ heads)

- Where could data lineage break? (Discovery: Partner’s ETL process has undocumented transformations)

WHY:

- Why do we need 3 years of history versus recent data? (Discovery: Model training only needs 6 months; leadership assumed more is better)

- Why onboard everything versus selective fields? (Discovery: 180 of 247 fields are never used by the partner)

- Why now versus after establishing data quality standards? (Discovery: Executive enthusiasm without business case)

- Why this partner’s data specifically? (Discovery: It’s convenient, not strategically valuable)

HOW:

- How will we validate data completeness? (Discovery: No baseline—don’t know if we received all 12 million records)

- How will we handle missing values? (Discovery: 23 critical fields have 40-60% null rates)

- How will we map their field definitions to ours? (Discovery: Same field names mean different things—”customer_id” is email in their system, numeric in ours)

- How will we de-duplicate records? (Discovery: Same ticket appears multiple times due to status changes)

- How will we mask or remove PII? (Discovery: Email bodies need NLP-based PII detection; simple field-level masking won’t work)

- How will we detect data drift over time? (Discovery: No monitoring planned)

- How will we version the metadata? (Discovery: When partner changes definitions, we’d lose historical context)

- How will we test downstream impacts? (Discovery: 8 existing dashboards might break if new data has different value ranges)

The Outcome: The project transforms into a structured data onboarding process:

Phase 1: Metadata Discovery & Validation (Week 1-2)

- Partner provides comprehensive data dictionary with business definitions

- Team creates metadata catalog mapping 247 fields to business concepts

- Discovery reveals only 67 fields are relevant; others are deprecated/unused

- PII scanning identifies 12 high-risk fields requiring special handling

- Legal reviews data sharing agreement and adds compliance clauses

Phase 2: Data Quality Assessment (Week 3)

- Partner provides data quality report: null rates, duplicate counts, value distributions

- Team identifies 5 critical quality issues requiring remediation at source

- Partner fixes export logic to eliminate duplicates and standardize date formats

- Establish data quality SLA: <5% nulls in critical fields, <1% duplicates

Phase 3: Metadata Standards & Lineage (Week 4)

- Create semantic layer mapping partner fields to enterprise data model

- Document transformation rules: how “customer_id” converts between systems

- Build data lineage documentation showing source → transformation → destination

- Version metadata in Git with change history and approval workflow

Phase 4: Pilot Load & Validation (Week 5-6)

- Load 3 months of data (not 3 years) as proof of concept

- Implement automated validation checks: schema compliance, referential integrity, value range checks

- Build PII masking pipeline using NLP for unstructured text

- Create data quality dashboard showing completeness, accuracy, consistency metrics

Phase 5: Production Onboarding (Week 7-8)

- Full data load with automated quality gates

- Metadata published to enterprise catalog

- Data lineage integrated with existing documentation

- Training provided to analytics teams on new data source limitations

- Monitoring alerts configured for quality degradation

Result:

- Clean, well-documented data that analytics teams trust

- Clear metadata showing what each field means and where it came from

- PII properly handled, passing compliance audit

- Data quality issues caught before propagating to models and dashboards

- 67 useful fields onboarded instead of 247 confusing ones

- Reusable onboarding process for future partners

Risk Prevented:

- Compliance violation from improperly stored PII ($millions in potential fines)

- Models trained on poor quality data producing unreliable predictions

- Dashboards showing incorrect metrics due to misunderstood field definitions

- Months of rework when quality issues discovered after full integration

- Inability to troubleshoot data issues due to missing lineage documentation

- Security breach from PII in unencrypted data lake

- Team paralysis from 247 undocumented fields no one understands

- Downstream system failures from unexpected data formats

- Lost institutional knowledge when team members leave

A Cautionary Tale from the Field

A team once built a recommendation engine that performed beautifully in internal testing. Stakeholders celebrated. Deployment was rushed.

In real-world usage, bias emerged, affecting certain user groups disproportionately. Public sentiment turned negative. The model was rolled back, trust was shattered, and months of work disappeared overnight.

Not because of poor algorithms. Because assumptions were never questioned and risks were never explored.

Another organization built dashboards filled with hundreds of metrics—impressive visual complexity. But when leadership tried to use them to make decisions, no clarity emerged. The charts were beautiful but meaningless.

Nobody had asked: “Why do we need these metrics, and how will they be used to act?”

In both situations, it wasn’t the technology that failed—it was the thinking surrounding it.

Scenario 3: The “Quick” Data Quality Fix

The Situation: Sales leadership complains that customer revenue reports show inconsistent numbers. They want it “fixed by Friday” because they’re presenting to investors.

The Reactive Response: “We’ll patch it.” The team identifies obvious errors, applies quick corrections, and updates the report.

The Thoughtful Response:

WHO:

- Who else uses this data beyond sales? (Discovery: Finance, marketing, and customer success all depend on the same data)

- Who created these reports originally? (Discovery: The person left the company; no documentation exists)

- Who has complained about this before? (Discovery: Finance flagged it 6 months ago but was told it’s “good enough”)

WHAT:

- What is causing the inconsistency—data source, calculation logic, or timing? (Discovery: Three different systems define “revenue” differently)

- What assumptions are baked into the current calculations? (Discovery: The report assumes all deals close on contract date, which is incorrect)

- What other reports might be affected? (Discovery: 15 other dashboards use the same flawed logic)

WHEN:

- When did the inconsistencies start appearing? (Discovery: After a CRM system upgrade 4 months ago that changed field definitions)

- When is the investor presentation really happening? (Discovery: Next month, not this week;

The Quiet Strength of Leaders Who Pause

Project management is not command and control. It’s stewardship of clarity. It’s protection of teams. It’s partnership with stakeholders. It’s discipline under pressure.

The strongest leaders are not loud. They do not rush. They do not pretend to know.

They ask. They listen. They reflect.

They think before committing. They speak after understanding. They act with intention rather than instinct.

Teams feel safer with them. Stakeholders trust them. Projects succeed under them.

Not because they always make the right decision—but because they always make a thoughtfully constructed decision.

The Leadership Mindset

Thoughtful project leadership begins with a belief:

Every request, every issue, and every decision deserves to be understood fully before it is answered.

This mindset requires:

- Humility — to ask questions rather than pretend to have answers

- Courage — to say, “I need more clarity before committing”

- Discipline — to examine consequences rather than chase approval

The most powerful tool a project leader possesses isn’t a framework, dashboard, or tracking tool. It’s the ability to think in questions—questions that reveal what is unseen, unsaid, and untested.

When decisions are made thoughtfully, they aren’t reactions; they’re commitments. They’re grounded in reality, supported by evidence, aligned with capability, and shaped with intention.

Such decisions save hours of rework, weeks of delay, and millions of dollars lost to misalignment.

Closing Reflection

Projects may be filled with tools, frameworks, dashboards, KPIs, sprints, and milestones—but success is built on something far more fundamental:

The quality of decisions made along the way.

If decisions are made impulsively, even brilliant plans deteriorate.

If decisions are made thoughtfully, even uncertain journeys succeed.

Leadership begins the moment you choose to think instead of react—the moment you ask instead of assume—the moment you build clarity instead of chaos.

Think deeply.

Question fearlessly.

Decide intentionally.

Act confidently.

That is how projects succeed.

That is how teams grow.

That is how trust is built.

That is how leaders are made.

Discover more from SkillWisor

Subscribe to get the latest posts sent to your email.

good job

LikeLike